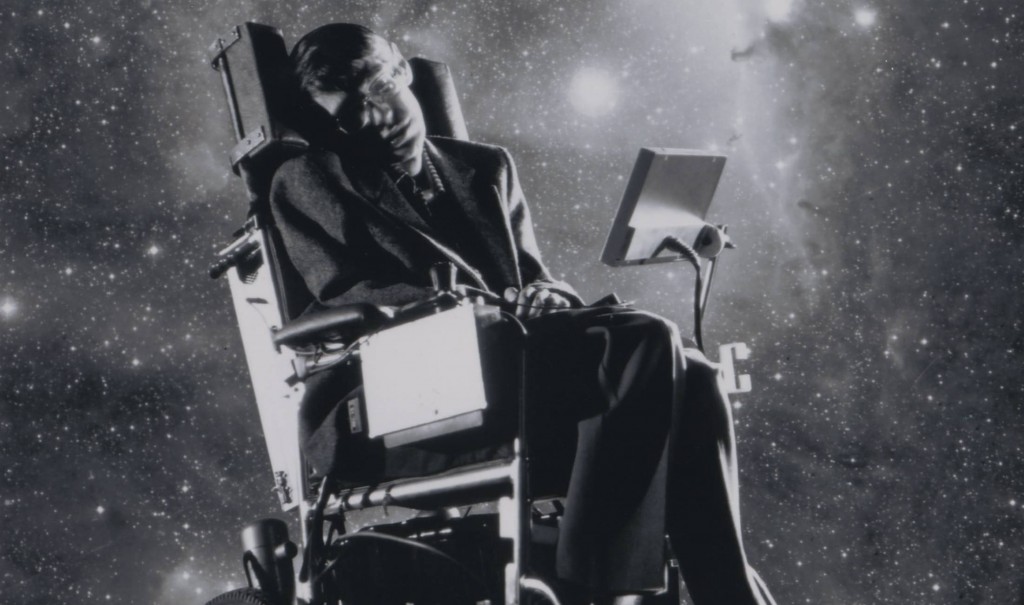

Stephen Hawking:

I would like to know a unified theory of gravity and the other forces. Which of the big questions in science would you like to know the answer to and why?

Mark Zuckerberg:

That’s a pretty good one!

I’m most interested in questions about people. What will enable us to live forever? How do we cure all diseases? How does the brain work? How does learning work and how we can empower humans to learn a million times more?

I’m also curious about whether there is a fundamental mathematical law underlying human social relationships that governs the balance of who and what we all care about. I bet there is.

___________________________

Jeff Jarvis:

Mark: What do you think Facebook’s role is in news? I’m delighted to see Instant Articles and that it includes a business model to help support good journalism. What’s next?

Mark Zuckerberg:

People discover and read a lot of news content on Facebook, so we spend a lot of time making this experience as good as possible.

One of the biggest issues today is just that reading news is slow. If you’re using our mobile app and you tap on a photo, it typically loads immediately. But if you tap on a news link, since that content isn’t stored on Facebook and you have to download it from elsewhere, it can take 10+ seconds to load. People don’t want to wait that long, so a lot of people abandon news before it has loaded or just don’t even bother tapping on things in the first place, even if they wanted to read them.

That’s easy to solve, and we’re working on it with Instant Articles. When news is as fast as everything else on Facebook, people will naturally read a lot more news. That will be good for helping people be more informed about the world, and it will be good for the news ecosystem because it will deliver more traffic.

It’s important to keep in mind that Instant Articles isn’t a change we make by ourselves. We can release the format, but it will take a while for most publishers to adopt it. So when you ask about the “next thing”, it really is getting Instant Articles fully rolled out and making it the primary news experience people have.

___________________________

Ben Romberg:

Hi Mark, tell us more about the AI initiatives that Facebook are involved in.

Mark Zuckerberg:

Most of our AI research is focused on understanding the meaning of what people share.

For example, if you take a photo that has a friend in it, then we should make sure that friend sees it. If you take a photo of a dog or write a post about politics, we should understand that so we can show that post and help you connect to people who like dogs and politics.

In order to do this really well, our goal is to build AI systems that are better than humans at our primary senses: vision, listening, etc.

For vision, we’re building systems that can recognize everything that’s in an image or a video. This includes people, objects, scenes, etc. These systems need to understand the context of the images and videos as well as whatever is in them.

For listening and language, we’re focusing on translating speech to text, text between any languages, and also being able to answer any natural language question you ask.

This is a pretty basic overview. There’s a lot more we’re doing and I’m looking forward to sharing more soon.

___________________________

Jenni Moore:

Also in 10 years time what’s your view on the world where do you think we all will be from a technology perspective and social media?

Mark Zuckerberg:

In 10 years, I hope we’ve improved a lot of how the world connects. We’re doing a few big things:

First, we’re working on spreading internet access around the world through Internet.org. This is the most basic tool people need to get the benefits of the internet — jobs, education, communication, etc. Today, almost 2/3 of the world has no internet access. In the next 10 years, Internet.org has the potential to help connect hundreds of millions or billions of people who do not have access to the internet today.

As a side point, research has found that for every 10 people who gain access to the internet, about 1 person is raised out of poverty. So if we can connect the 4 billion people in the world who are unconnected, we can potentially raise 400 million people out of poverty. That’s perhaps one of the greatest things we can do in the world.

Second, we’re working on AI because we think more intelligent services will be much more useful for you to use. For example, if we had computers that could understand the meaning of the posts in News Feed and show you more things you’re interested in, that would be pretty amazing. Similarly, if we could build computers that could understand what’s in an image and could tell a blind person who otherwise couldn’t see that image, that would be pretty amazing as well. This is all within our reach and I hope we can deliver it in the next 10 years.

Third, we’re working on VR because I think it’s the next major computing and communication platform after phones. In the future we’ll probably still carry phones in our pockets, but I think we’ll also have glasses on our faces that can help us out throughout the day and give us the ability to share our experiences with those we love in completely immersive and new ways that aren’t possible today.

Those are just three of the things we’re working on for the next 10 years. I’m pretty excited about the future.•