It’s amusing that the truest thing Hillary Clinton has said during the election season–the “basket of deplorables” line–has caused her grief. The political rise of the hideous hotelier Donald Trump, from his initial announcement of his candidacy forward, has always been about identity politics (identity: white), with the figures of the forgotten, struggling Caucasians of Thomas Frank narratives more noise than signal.

It’s amusing that the truest thing Hillary Clinton has said during the election season–the “basket of deplorables” line–has caused her grief. The political rise of the hideous hotelier Donald Trump, from his initial announcement of his candidacy forward, has always been about identity politics (identity: white), with the figures of the forgotten, struggling Caucasians of Thomas Frank narratives more noise than signal.

The American middle class has legitimately taken a big step back for four decades, owing to a number of factors (globalization, computerization, tax rates, etc.), but the latest numbers show a huge rebound for middle- and lower-class Americans under President Obama and his worker-friendly policies.

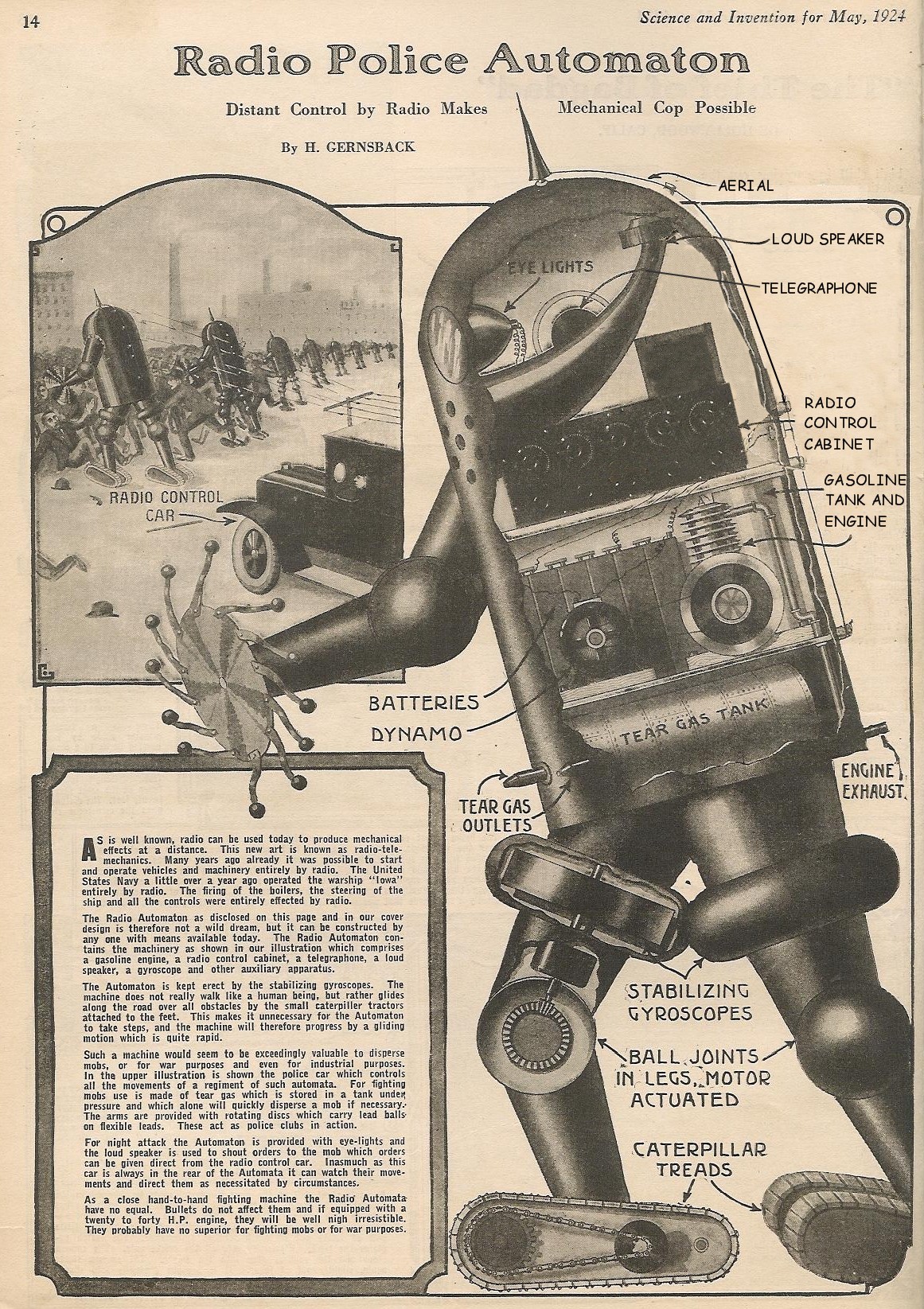

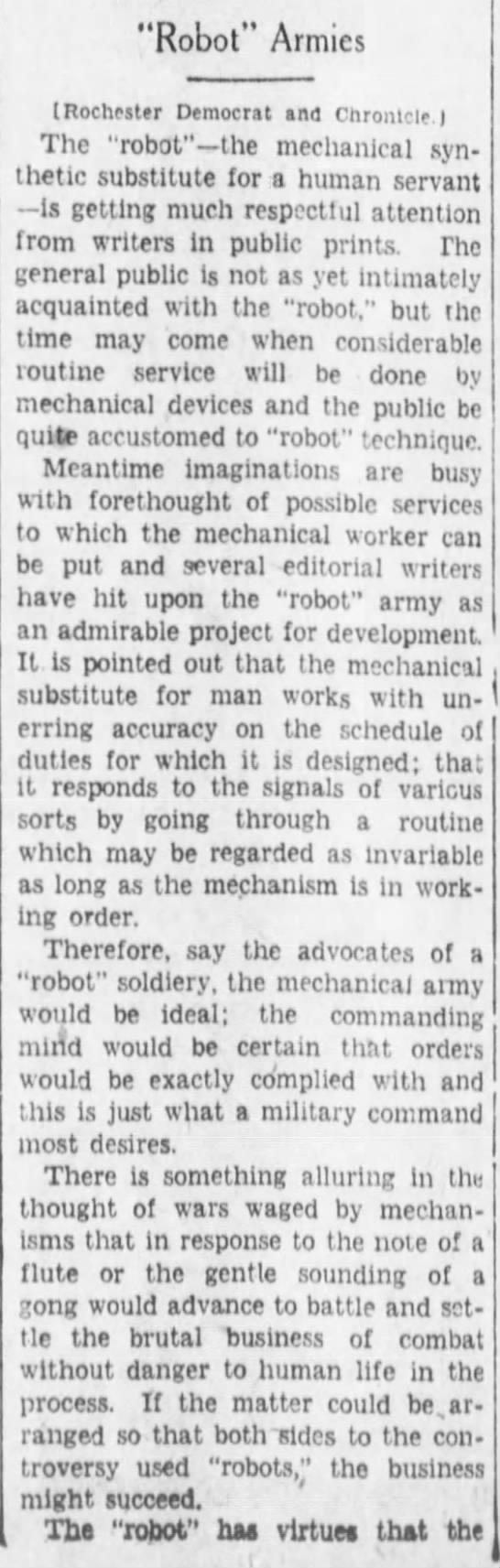

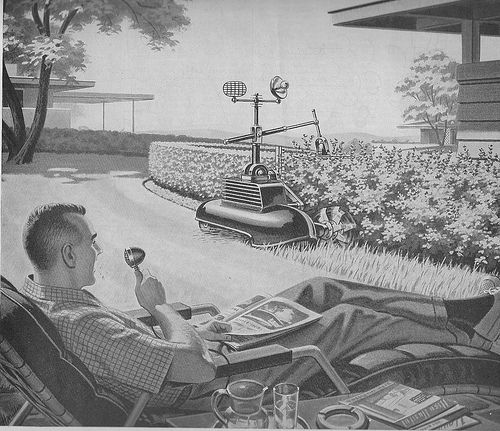

Perhaps that progress will be short lived, with automation and robotics poised to alter the employment landscape numerous times in the coming decades. But the Digital Age challenges have been completely absent from Trump’s rhetoric (if he even knows about them). And his stated policies will reverse the gains made by the average American over the last eight years. His ascent has always been about color and not the color of money.

From Sam Fleming at the Financial Times:

Household incomes surged last year in the US, suggesting American middle class fortunes are improving in defiance of the dark rhetoric that has dominated the presidential election campaign.

A strengthening labour market, higher wages and persistently subdued inflation pushed real median household income up 5.2 per cent between 2014 and 2015 to $56,516, the Census Bureau said on Tuesday. This marked the first gain since the eve of the global financial crisis in 2007 and the first time that inflation-adjusted growth exceeded 5 per cent since the bureau’s records began in 1967.

But the increase in 2015 still brought incomes to just 1.6 per cent below the levels they were hovering at the year before the recession started and they remain 2.4 per cent below their peak in 1999. Income gains were largest at the bottom and middle of the income scale relative to the top, reducing income inequality.

The US election debate has been dominated by the story of long-term income stagnation, with analysts attributing the rise of Donald Trump in part to the shrinking ranks of America’s middle class, rising inequality and the impact of globalisation on household incomes. Tuesday’s strong numbers, which cover the year in which the Republican candidate launched his campaign, cast that narrative in a new light.•