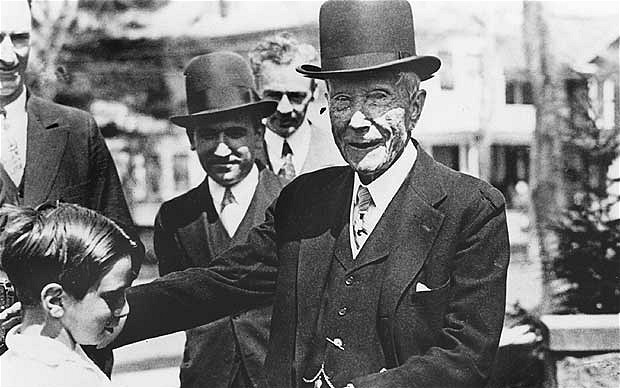

In America, ridiculously rich people are considered oracles, whether they deserve to be or not.

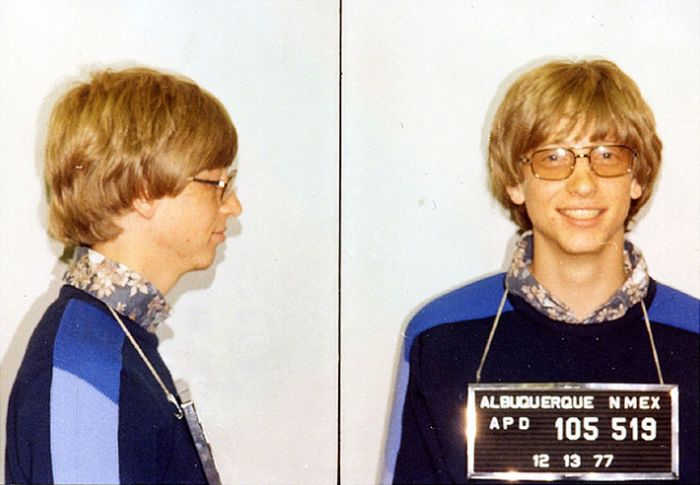

Bill Gates probably earns that status more than most. He was a raging a-hole when engaged full time as a businessperson at Microsoft, but he’s done as much good for humanity as anyone likely can in his 2.0 avuncular philanthropist rebooting. Gates just did one of his wide-ranging Reddit AMAs. A few exchanges follow.

_____________________________

Question:

What do you see human society accomplishing in the next 20 years? What are you most excited for?

Bill Gates:

I will mention three things.

First is an energy innovation to lower the cost and get rid of green house gases. This isn’t guaranteed so we need a lot of public and private risk taking.

EDIT: I talked about this recently in my annual letter.

Second is progress on disease particularly infectious disease. Polio, Malaria, HIV, TB, etc.. are all diseases we should be able to either eliminate of bring down close to zero. There is amazing science that makes us optimistic this will happen.

Third are tools to help make education better – to help teachers learn how to teach better and to help students learn and understand why they should learn and reinforce their confidence.

_____________________________

Question:

Hey Bill! Has there been a problem or challenge that’s made you, as a billionaire, feel completely powerless? Did you manage to overcome it, and if so, how?

Bill Gates:

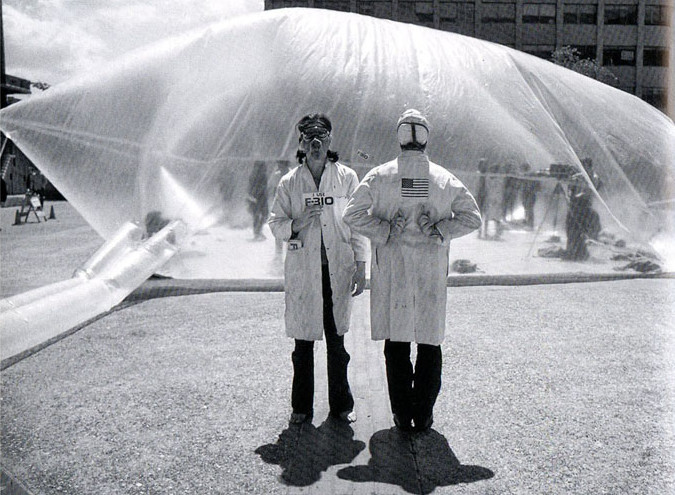

The problem of how we prevent a small group of terrorists using nuclear or biological means to kill millions is something I worry about. If Government does their best work they have a good chance of detecting it and stopping it but I don’t think it is getting enough attention and I know I can’t solve it.

_____________________________

Question:

What’s your take on the recent FBI/Apple situation?

Bill Gates:

I think there needs to be a discussion about when the government should be able to gather information. What if we had never had wiretapping? Also the government needs to talk openly about safeguards. Right now a lot of people don’t think the government has the right checks to make sure information is only used in criminal situations. So this case will be viewed as the start of a discussion. I think very few people take the extreme view that the government should be blind to financial and communication data but very few people think giving the government carte blanche without safeguards makes sense. A lot of countries like the UK and France are also going through this debate. For tech companies there needs to be some consistency including how governments work with each other. The sooner we modernize the laws the better.

_____________________________

Question:

Some people (Elon Musk, Stephen Hawking, etc) have come out in favor of regulating Artificial Intelligence before it is too late. What is your stance on the issue, and do you think humanity will ever reach a point where we won’t be able to control our own artificially intelligent designs?

Bill Gates:

I haven’t seen any concrete proposal on how you would do the regulation. I think it is worth discussing because I share the view of Musk and Hawking that when a few people control a platform with extreme intelligence it creates dangers in terms of power and eventually control.

_____________________________

Question:

How soon do you think quantum computing will catch on, and what do you think about the future of cryptography if it does? Thanks!

Bill Gates:

Microsoft and others are working on quantum computing. It isn’t clear when it will work or become mainstream. There is a chance that within 6-10 years that cloud computing will offer super-computation by using quantum. It could help use solve some very important science problems including materials and catalyst design.

_____________________________

Question:

You have previously said that, through organizations like Khan Academy and Wikipedia and the Internet in general, getting access to knowledge is now easier than ever. While that is certainly true, K-12 education seems to have stayed frozen in time. How do you think the school system will or should change in the decades to come?

Bill Gates:

I agree that our schools have not improved as much as we want them to. There are a lot of great teachers but we don’t do enough to figure out what they do so well and make sure others benefit from that. Most teachers get very little feedback about what they do well and what they need to improve including tools that let them see what the exemplars are doing.

Technology is starting to improve education. Unfortunately so far it is mostly the motivated students who have benefited from it. I think we will get tools like personalized learning to all students in the next decade.

A lot of the issue is helping kids stay engaged. If they don’t feel the material is relevant or they don’t have a sense of their own ability they can check out too easily. The technology has not done enough to help with this yet.

_____________________________

Question:

What’s a fantasy technological advancement you wish existed?

Bill Gates:

I recently saw a company working on “robotic” surgery where the ability to work at small scales was stunning. The idea that this will make surgeries higher quality, faster and less expensive is pretty exciting. It will probably take a decade before this gets mainstream – to date it has mostly been used for prostate surgery.

In the Foundation work there are a lot of tools we are working on we don’t have yet. For example an implant to protect a woman from getting HIV because it releases a protective drug.

Question:

What’s a technological advancement that’s come about in the past few years that you think we were actually better off without?

Bill Gates:

I am concerned about biological tools that could be used by a bioterrorist. However the same tools can be used for good things as well.

Some people think Hoverboards were bad because they caught on fire. I never got to try one.•