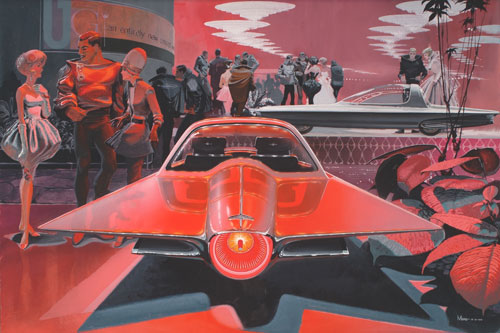

The past isn’t necessarily prologue. Sometimes there’s a clean break from history. The Industrial Age transformed Labor, moving us from an agrarian culture to an urban one, providing new jobs that didn’t previously exist: advertising, marketing, car mechanic, etc. That doesn’t mean the Digital Age will follow suit. Much of manufacturing, construction, driving and other fields will eventually fall, probably sooner than later, and Udacity won’t be able to rapidly transition everyone into a Self-Driving Car Engineer. That type of upskilling can take generations to complete.

Not every job has to vanish. Just enough to make unemployment scarily high to cause social unrest. And those who believe Universal Basic Income is a panacea must beware truly bad versions of such programs, which can end up harming more than helping.

Radical abundance doesn’t have to be a bad thing, of course. It should be a very good one. But we’ve never managed plenty in America very well, and this level would be on an entirely different scale.

Excerpts from two articles on the topic.

From Giles Wilkes’ Economist review of Ryan Avent’s The Wealth of Humans:

What saves this work from overreach is the insistent return to the problem of abundant human labour. The thesis is rather different from the conventional, Malthusian miserabilism about burgeoning humanity doomed to near-starvation, with demand always outpacing supply. Instead, humanity’s growing technical capabilities will render the supply of what workers produce, be that physical products or useful services, ever more abundant and with less and less labour input needed. At first glance, worrying about such abundance seems odd; how typical that an economist should find something dismal in plenty.

But while this may be right when it is a glut of land, clean water, or anything else that is useful, there is a real problem when it is human labour. For the role work plays in the economy is two-sided, responsible both for what we produce, and providing the rights to what is made. Those rights rely on power, and power in the economic system depends on scarcity. Rob human labour of its scarcity, and its position in the economic hierarchy becomes fragile.

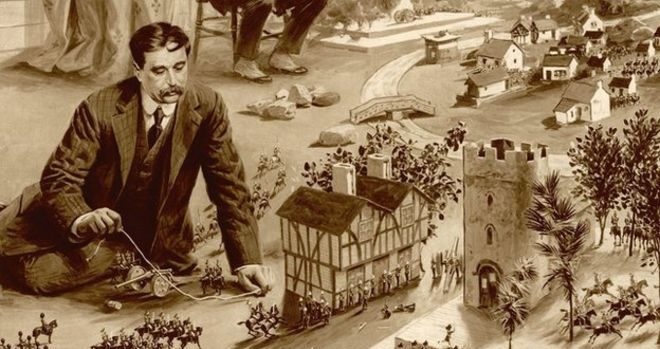

A good deal of the Wealth of Humans is a discussion on what is increasingly responsible for creating value in the modern economy, which Mr Avent correctly identifies as “social capital”: that intangible matrix of values, capabilities and cultures that makes a company or nation great. Superlative businesses and nation states with strong institutions provide a secure means of getting well-paid, satisfying work. But access to the fruits of this social capital is limited, often through the political system. Occupational licensing, for example, prevents too great a supply of workers taking certain protected jobs, and border controls achieve the same at a national level. Exceptional companies learn how to erect barriers around their market. The way landholders limit further development provides a telling illustration: during the San Fransisco tech boom, it was the owners of scarce housing who benefited from all that feverish innovation. Forget inventing the next Facebook, be a landlord instead.

Not everyone can, of course, which is the core problem the book grapples with. Only a few can work at Google, or gain a Singaporean passport, inherit property in London’s Mayfair or sell $20 cheese to Manhattanites. For the rest, there is a downward spiral: in a sentence, technological progress drives labour abundance, this abundance pushes down wages, and every attempt to fight it will encourage further substitution towards alternatives.•

From Duncan Jefferies’ Guardian article “The Automated City“:

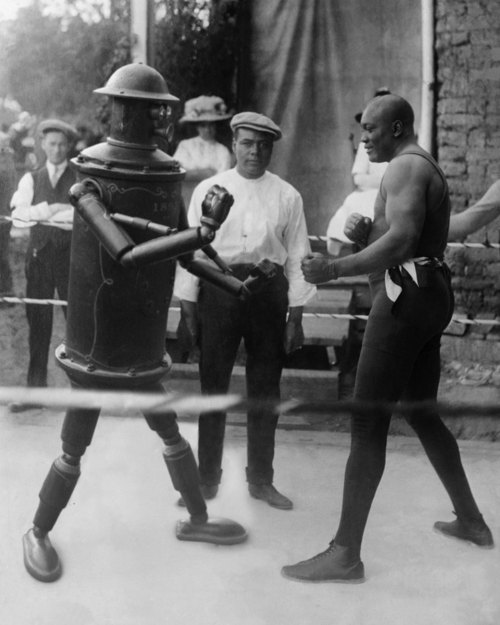

Enfield council is going one step further – and her name is Amelia. She’s an “intelligent personal assistant” capable of analysing natural language, understanding the context of conversations, applying logic, resolving problems and even sensing emotions. She’s designed to help residents locate information and complete application forms, as well as simplify some of the council’s internal processes. Anyone can chat to her 24/7 through the council’s website. If she can’t answer something, she’s programmed to call a human colleague and learn from the situation, enabling her to tackle a similar question unaided in future.

Amelia is due to be deployed later this year, and is supposed to be 60% cheaper than a human employee – useful when you’re facing budget cuts of £56m over the next four years. Nevertheless, the council claims it has no plans to get rid of its 50 call centre workers.

The Singaporean government, in partnership with Microsoft, is also planning to roll out intelligent chatbots in several stages: at first they will answer simple factual questions from the public, then help them complete tasks and transactions, before finally responding to personalised queries.

Robinson says that, while artificially intelligent chatbots could have a role to play in some areas of public service delivery: “I think we overlook the value of a quality personal relationship between two people at our peril, because it’s based on life experience, which is something that technology will never have – certainly not current generations of technology, and not for many decades to come.”

But whether everyone can be “upskilled” to carry out more fulfilling work, and how many staff will actually be needed as robots take on more routine tasks, remains to be seen.•