If you’re not concerned about AI safety, you should be. Vastly more risk than North Korea. pic.twitter.com/2z0tiid0lc

— Elon Musk (@elonmusk) August 12, 2017

“Elon Musk is a 21st-century genius — you have to listen to what he says,” asserted Richard Dawkins in a recent Scientific American interview, as one brilliant person with often baffling stances paid homage to another. The “21st-century” part is particularly interesting, as it seems to confer upon Musk a new type of knowledge beyond questioning. Musk, however, needs very much to be questioned.

Someone who teach himself rocketry as the SpaceX founder did is certainly very smart in a many ways, but it might really be better for America if we stopped looking to billionaires for the answers to our problems. Perhaps the very existence of billionaires is the problem?

Musk has many perplexing opinions that run the gamut from politics to existential risks to space travel. Walter Isaacson has lauded him as a new Benjamin Franklin, but there’s no way the Founding Father would have cozied up to a fledgling fascist like Trump, believing he could somehow control a person more combustible than a rocket. Franklin knew that we all have to hang together against tyranny or we would all hang separately.

The plans Musk has for his rockets also are increasingly erratic and questionable. As he jumps his timeline for launches from this year to that one, there’s some question of whether humans should be traveling to Mars at all, especially in the near future. Why not use relatively cheap robotics to massively explore and develop the universe over the next several decades rather than rushing humans into an incredibly inhospitable environment?

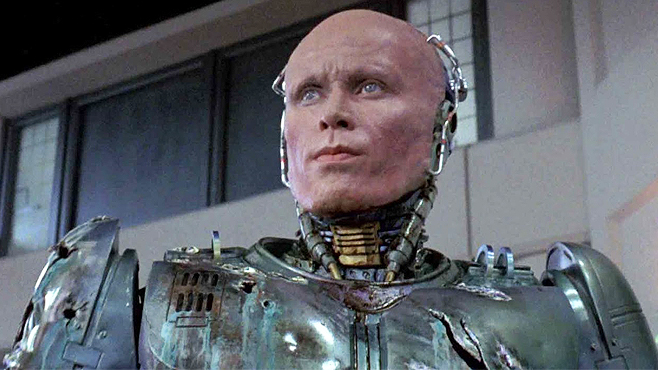

Musk’s answer is that we are sitting ducks for an existential threat, either of our own making or a natural one. That’s true and always will be. Since going on a Bostrom bender a few years back, the entrepreneur’s main fear seems to be that Artificial Intelligence will be the doom of human beings. This dark future is possible in the longer term but no sure thing, and as I sit here typing this today climate change is a far greater threat. There’s no doubt Musk’s work with the Powerwall and EVs may help us avoid some of this human-made scourge — that is his greatest contribution to society, for sure — but his outsize concern about AI is distracting. Threats from machine intelligence should be a priority but not nearly the top priority.

Something very sinister is quietly baked into the Nick Bostrom view of Homo sapiens becoming extinct which Musk adheres to, which is the idea that climate change and nuclear holocaust, say, would still leave some humans to soldier on, even if hundreds of millions or billions are killed off. That’s why something that could theoretically end the entire species–like AI–is given precedence over more pressing matters. That’s insane and immoral.

The opening of a Samuel Gibbs Guardian article:

Elon Musk has warned again about the dangers of artificial intelligence, saying that it poses “vastly more risk” than the apparent nuclear capabilities of North Korea does.

The Tesla and SpaceX chief executive took to Twitter to once again reiterate the need for concern around the development of AI, following the victory of Musk-led AI development over professional players of the Dota 2 online multiplayer battle game.

This is not the first time Musk has stated that AI could potentially be one of the most dangerous international developments. He said in October 2014 that he considered it humanity’s “biggest existential threat”, a view he has repeated several times while making investments in AI startups and organisations, including OpenAI, to “keep an eye on what’s going on”.

Musk again called for regulation, previously doing so directly to US governors at their annual national meeting in Providence, Rhode Island.

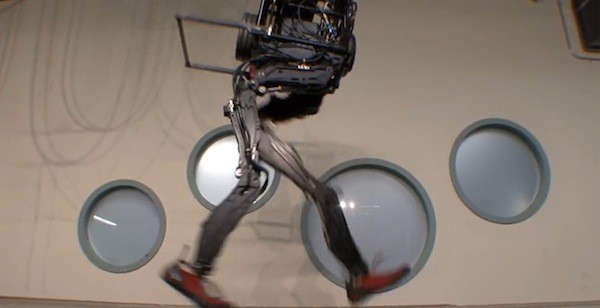

Musk’s tweets coincide with the testing of an AI designed by OpenAI to play the multiplayer online battle arena (Moba) game Dota 2, which successfully managed to win all its 1-v-1 games at the International Dota 2 championships against many of the world’s best players competing for a $24.8m (£19m) prize fund.

The AI displayed the ability to predict where human players would deploy forces and improvise on the spot, in a game where sheer speed of operation does not correlate with victory, meaning the AI was simply better, not just faster than the best human players.•