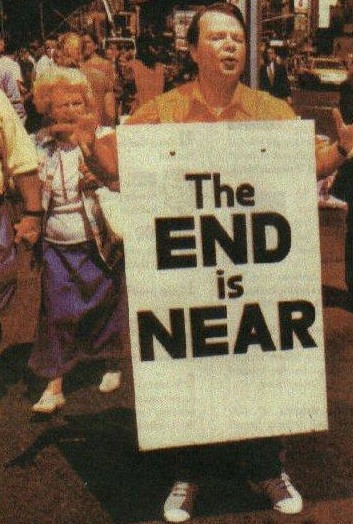

I think, sadly, that Aubrey de Grey will die soon, as will the rest of us. A-mortality is probably theoretically possible, though I think it will be awhile. But the SENS radical gerontologist has probably done more than anyone to get people to rethink aging as a disease to be cured rather than an inevitability to be endured. In the scheme of things, that paradigm shift has enormous (and hopefully salubrious) implications. De Grey just did an Ask Me Anything at Reddit. A few exchanges follow.

__________________________

Question:

What is the likelihood that someone who is 40 (30? 20? 10?) today will have their life significantly extended to the point of practical immortality?

Is it a slow, but rapidly rising collusion of things that are going to cause this, or is it something that is going to kind of snap into effect one day?

Will the technology be accessible to everyone, or will it be reserved for the rich?

What are your thoughts on cryonics?

What is your personal preferred method of achieving practical immortality? Nanotechnology? Cyborgs? Something else?

Aubrey de Grey:

I’d put it at 60, 70, 80, 90% respectively.

Kind of snap, in that we will reach longevity escape velocity.

For everyone, absolutely for certain.

Cryonics (not cryogenics) is a totally reasonable and valid research area and I am signed up with Alcor.

Anything that works! – but I expect SENS to get there first.

__________________________

Question:

Before defeating aging, what if we were to first defeat cardiovascular disease or cancer or Alzheimer’s disease? Do you think this would be enough to make people snap of of their “pro-aging trance” and be more optimistic about the feasibility & desirability of SENS and other rejuvenation therapies?

EDIT: Do you think people would be more convinced by more cosmetic rejuvenation therapies instead (reversal of hair loss/graying, reduction of wrinkles and spots in the skin)?

Aubrey de Grey:

Not a chance. People’s main problem is that they have a microbe in their brains called “aging” that they think means something distinct from diseases. The only way that will change is big life extension in mice.

__________________________

Question:

Long life for our ability to continue to develop ourselves, explore the world, gain knowledge, and create is great. The goal however has different paths, from genetic manipulation to body cyborgization. Some speak of mind uploading but who knows if that’s possible, as mind transfer implies dualism.

Is one preferable over another?

And what is your opinion on the potential of our unstable interconnected world to negatively impact our potential for progress from things like ecological collapse, global warming, etc.? I feel like it’s a race between disaster and scientific progress, can we out run chaos? Or is this a false dichotomy, maybe the future is a world of suffering and a few individuals have military grade cyborg tech.

Aubrey de Grey:

I don’t think mind transfer necessarily implies dualism, and I’m all for exploring all options.

I am quite sure we can outrun chaos.

__________________________

Question:

I’ve been learning more and more lately about the work that you do in the fight to end aging, and fully believe that it is both possible and just over the horizon. How can the general public get involved in the fight other than donating?

Aubrey de Grey:

Money is the bottleneck, I’m afraid, so the next best thing to donating is getting others to donate.

__________________________

Question:

We really appreciate all your work. Some people have expressed concerns that these anti-aging techniques and treatments won’t be available to everyone, but only to the extremely wealthy. Are there strategies to prevent this?

Aubrey de Grey:

Yes – they are called elections. Those in power want to stay there.•