How soon will it be until robots walk among us, handling the drudgery and making us all unemployed hobos? It probably depends on how much time the geniuses at MIT waste conducting Ask Me Anythings at Reddit. Ross Finman, Patrick R. Barragán and Ariel Anders, three young roboticists at the school, just did such a Q&A. A few exchanges follow.

____________________________________

Question:

- How far away are we from robo-assisted “personal care”?

- Given the chance, would either of you augment (with current and newly developed equipment) yourselves, and if so: to what extent?

Ross Finman:

1) Well… cop out answer, but it depends. Fully autonomous health care robots that would fully displace human health care professionals will be decades. The level of difficulty in that job (and difficulty for robots is deviation) is immense. Smaller aspects can be automated, but as a whole, a long time.

2) I would love to augment my brain with access to the internet. When hitting a problem and then taking the time to go and search online for the solution is so inefficient. If that could be done in thoughts, that would be awesome! Also, one of my friends is working on a wearable version of Facebook that could remind you when you know someone. Would avoid those awkward situations when you pretend to know someone.

____________________________________

Question:

What is currently the most challenging aspect of developing artificial intelligence? (i.e. What are the roadblocks to me getting a mechanical slave?)

Patrick R. Barragán:

This question is pretty general, and most people will have different answers on the topic. I think there are many big problems with developing AI. I think one is representation. It is hard in a general way to think about how to represent a problem or parts of a problem to even begin to think about how to solve it. For example, what are the real differences between a cup and bowl even if humans could easily distinguish them. There is a representation question there for one very specific type of problem. On the other end of the spectrum, how to deal with the huge amount of information that we humans get every moment of every day in the context a robot or computer is also unclear. What do you pay attention to? What do you ignore? How much to your process all the little things that happen? How do you reuse information that you learned later? How do you learn it in the first place?

____________________________________

Question:

I remember reading this article about robot sex becoming a mainstream thing in 2050, according to a few robotics experts:

http://www.digitaljournal.com/article/256430

Do you agree or disagree with this assertion?

Patrick R. Barragán:

I guess it’s possible, but if that is where we end up first on this train, I would be surprised. The article that you linked to suggests that people have built robots for all kind of things, and it suggests that those robots work, are deployed, and are now solutions to problems. Those suggestions, which pervade media stories about robotics, are not accurate. We have produced demonstrations of robots that can do certain things, but those sorts of robots that might sound like precursors before we get to “important” sex robots don’t exist in any general way yet.

Also, I don’t know anyone who is working on it or thinks they should be.

____________________________________

Question:

Do you think programming should be a required course in all American schools? Do you believe everyone can be benefited by knowing programming skills?

Ariel Anders:

Required? No. Beneficial? Definitely.

____________________________________

Question:

Will a computer be able to learn from it’s mistakes in the future?

Ross Finman:

Will humans be able to learn from their mistakes in the future?•

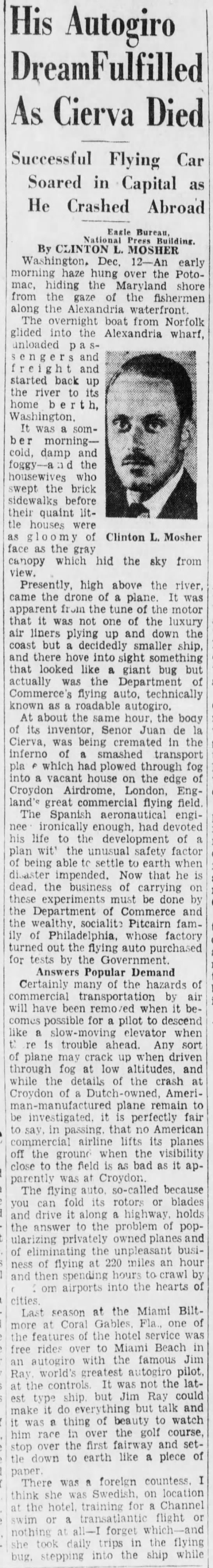

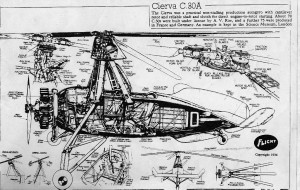

For some reason, people long for their cars to fly. In the 1930s it was believed that Spanish aviator Juan de la Cierva had made the dream come true, although he coincidentally died in an air accident in Amsterdam just as his roadable flying machine was proving a success in Washington D.C.

For some reason, people long for their cars to fly. In the 1930s it was believed that Spanish aviator Juan de la Cierva had made the dream come true, although he coincidentally died in an air accident in Amsterdam just as his roadable flying machine was proving a success in Washington D.C.