If Brad Darrach hadn’t also profiled Bobby Fischer, the autonomous robot named Shakey would have been the most famous malfunctioning machine he ever wrote about.

The John Markoff’s Harper’s piece I posted about earlier made mention of Shakey, the so-called “first electronic person,” which struggled to take baby steps on its own during the 1960s at the Stanford Research Institute. The machine’s intelligence was glacial, and much to the chagrin of its creators, it did not show rapid progress in the years that followed, as Moore’s Law forgot to do its magic.

Although Darrach’s 1970 Life piece mostly focuses on the Palo Alto area, it ventured to the other coast to allegedly record this extravagantly wrong prediction from MIT genius Marvin Minsky: “In from three to eight years we will have a machine with the generaL intelligence of an average human being. I mean a machine that will he able to read Shakespeare, grease a car, play office politics, tell a joke, have a fight.” The thing is, Minsky immediately and vehemently denied the quote and since other parts of the piece’s veracity were also questioned, I believe his disavowal.

The Life article (which misspelled the robot’s name as “Shaky”), for its many flaws and ethical lapses, did sagely acknowledge the potential for a post-work world and the advent of superintelligence and the challenges those developments might bring. The opening:

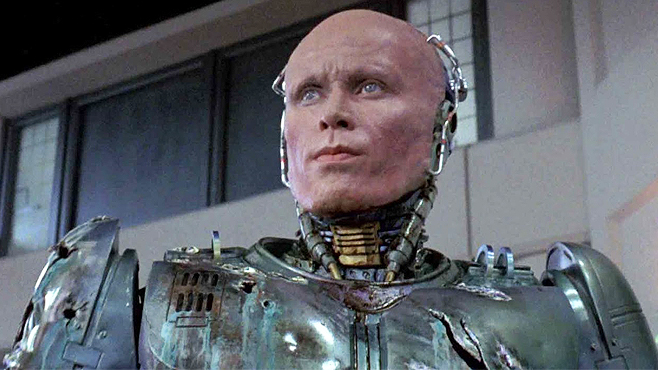

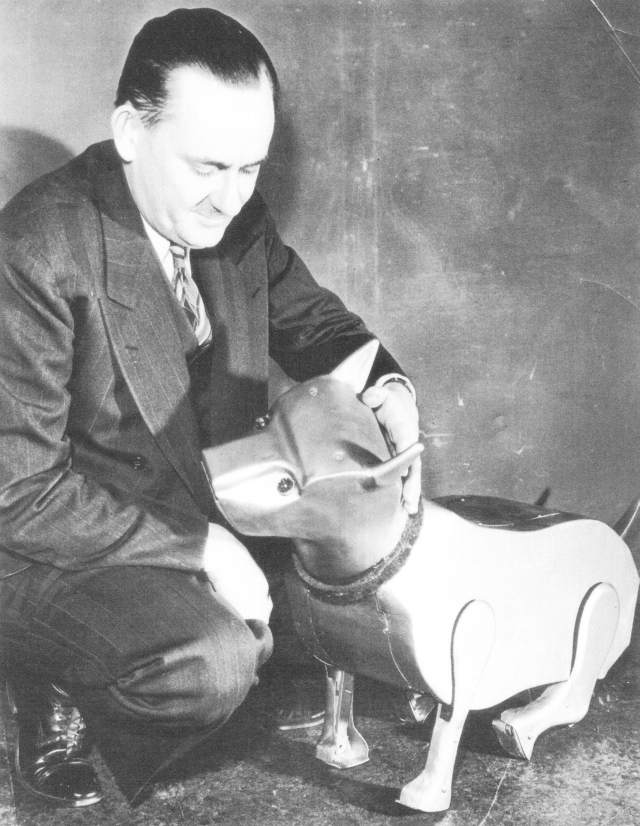

It looked at first glance like a Good Humor wagon sadly in need of a spring paint job. But instead of a tinkly little bell on top of its box-shaped body there was this big mechanical whangdoodle that came rearing up, full of lenses and cables, like a junk sculpture gargoyle.

“Meet Shaky,” said the young scientist who was showing me through the Stanford Research Institute. “The first electronic person.”

I looked for a twinkle in the scientist’s eye. There wasn’t any. Sober as an equation, he sat down at an input ter minal and typed out a terse instruction which was fed into Shaky’s “brain,” a computer set up in a nearby room: PUSH THE BLOCK OFF THE PLATFORM.

Something inside Shaky began to hum. A large glass prism shaped like a thick slice of pie and set in the middle of what passed for his face spun faster and faster till it disolved into a glare then his superstructure made a slow 360degree turn and his face leaned forward and seemed to be staring at the floor. As the hum rose to a whir, Shaky rolled slowly out of the room, rotated his superstructure again and turned left down the corridor at about four miles an hour, still staring at the floor.

“Guides himself by watching the baseboards,” the scientist explained as he hurried to keep up. At every open door Shaky stopped, turned his head, inspected the room, turned away and idled on to the next open door. In the fourth room he saw what he was looking for: a platform one foot high and eight feet long with a large wooden block sitting on it. He went in, then stopped short in the middle of the room and stared for about five seconds at the platform. I stared at it too.

“He’ll never make it.” I found myself thinking “His wheels are too small.” All at once I got gooseflesh. “Shaky,” I realized, ”is thinking the same thing I am thinking!”

Shaky was also thinking faster. He rotated his head slowly till his eye came to rest on a wide shallow ramp that was lying on the floor on the other side of the room. Whirring brisky, he crossed to the ramp, semicircled it and then pushed it straight across the floor till the high end of the ramp hit the platform. Rolling back a few feet, he cased the situation again and discovered that only one corner of the ramp was touching the platform. Rolling quickly to the far side of the ramp, he nudged it till the gap closed. Then he swung around, charged up the slope, located the block and gently pushed it off the platform.

Compared to the glamorous electronic elves who trundle across television screens, Shaky may not seem like much. No death-ray eyes, no secret transistorized lust for nubile lab technicians. But in fact he is a historic achievement. The task I saw him perform would tax the talents of a lively 4-year-old child, and the men who over the last two years have headed up the Shaky project—Charles Rosen, Nils Nilsson and Bert Raphael—say he is capable of far more sophisticated routines. Armed with the right devices and programmed in advance with basic instructions, Shaky could travel about the moon for months at a time and, without a single beep of direction from the earth, could gather rocks, drill Cores, make surveys and photographs and even decide to lay plank bridges over crevices he had made up his mind to cross.

The center of all this intricate activity is Shaky’s “brain,” a remarkably programmed computer with a capacity more than 1 million “bits” of information. In defiance of the soothing conventional view that the computer is just a glorified abacuus, that cannot possibly challenge the human monopoly of reason. Shaky’s brain demonstrates that machines can think. Variously defined, thinking includes processes as “exercising the powers of judgment” and “reflecting for the purpose of reaching a conclusion.” In some at these respects—among them powers of recall and mathematical agility–Shaky’s brain can think better than the human mind.

Marvin Minsky of MIT’s Project Mac, a 42-year-old polymath who has made major contributions to Artificial Intelligence, recently told me with quiet certitude, “In from three to eight years we will have a machine with the generaL intelligence of an average human being. I mean a machine that will he able to read Shakespeare, grease a car, play office politics, tell a joke, have a fight. At that point the machine will begin to educate itself with fantastic speed. In a few months it will be at genius level and a few months after that its powers will be incalculable.”

I had to smile at my instant credulity—the nervous sort of smile that comes when you realize you’ve been taken in by a clever piece of science fiction. When I checked Minsky’s prophecy with other people working on Artificial Intelligence, however, many at them said that Minsky’s timetable might be somewhat wishful—”give us 15 years,” was a common remark—but all agreed that there would be such a machine and that it could precipitate the third Industrial Revolution, wipe out war and poverty and roll up centuries of growth in science, education and the arts. At the same time a number of computer scientists fear that the godsend may become a Golem. “Man’s limited mind,” says Minsky, “may not be able to control such immense mentalities.”•