Roboticist Daniel H. Wilson explains that eventually you’ll have the implant.

You are currently browsing the archive for the Science/Tech category.

Tags: Daniel H. Wilson

From “Our Biotech Future,” Freeman Dyson’s 2007 New York Review of Books essay, in which the scientist ponders the possibilities that will result from genetic engineering being conducted by the general public:

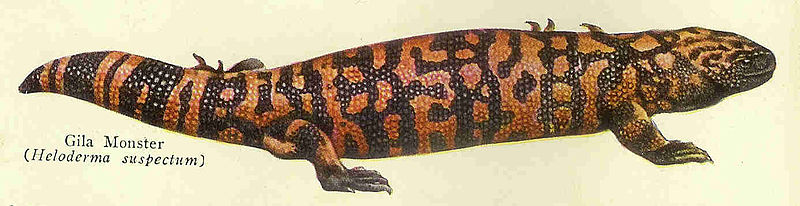

“Domesticated biotechnology, once it gets into the hands of housewives and children, will give us an explosion of diversity of new living creatures, rather than the monoculture crops that the big corporations prefer. New lineages will proliferate to replace those that monoculture farming and deforestation have destroyed. Designing genomes will be a personal thing, a new art form as creative as painting or sculpture.

Few of the new creations will be masterpieces, but a great many will bring joy to their creators and variety to our fauna and flora. The final step in the domestication of biotechnology will be biotech games, designed like computer games for children down to kindergarten age but played with real eggs and seeds rather than with images on a screen. Playing such games, kids will acquire an intimate feeling for the organisms that they are growing. The winner could be the kid whose seed grows the prickliest cactus, or the kid whose egg hatches the cutest dinosaur. These games will be messy and possibly dangerous. Rules and regulations will be needed to make sure that our kids do not endanger themselves and others. The dangers of biotechnology are real and serious.

If domestication of biotechnology is the wave of the future, five important questions need to be answered. First, can it be stopped? Second, ought it to be stopped? Third, if stopping it is either impossible or undesirable, what are the appropriate limits that our society must impose on it? Fourth, how should the limits be decided? Fifth, how should the limits be enforced, nationally and internationally? I do not attempt to answer these questions here. I leave it to our children and grandchildren to supply the answers.”

Tags: Freeman Dyson

Interesting article in the Economist predicting the decline of the efficacy of doctors in an increasingly wired world with a growing, graying population:

“The past 150 years have been a golden age for doctors. In some ways, their job is much as it has been for millennia: they examine patients, diagnose their ailments and try to make them better. Since the mid-19th century, however, they have enjoyed new eminence. The rise of doctors’ associations and medical schools helped separate doctors from quacks. Licensing and prescribing laws enshrined their status. And as understanding, technology and technique evolved, doctors became more effective, able to diagnose consistently, treat effectively and advise on public-health interventions—such as hygiene and vaccination—that actually worked.

This has brought rewards. In developed countries, excluding America, doctors with no speciality earn about twice the income of the average worker, according to McKinsey, a consultancy. America’s specialist doctors earn ten times America’s average wage. A medical degree is a universal badge of respectability. Others make a living. Doctors save lives, too.

With the 21st century certain to see soaring demand for health care, the doctors’ star might seem in the ascendant still. By 2030, 22% of people in the OECD club of rich countries will be 65 or older, nearly double the share in 1990. China will catch up just six years later. About half of American adults already have a chronic condition, such as diabetes or hypertension, and as the world becomes richer the diseases of the rich spread farther. In the slums of Calcutta, infectious diseases claim the young; for middle-aged adults, heart disease and cancer are the most common killers. Last year the United Nations held a summit on health (only the second in its history) that gave warning about the rising toll of chronic disease worldwide.

But this demand for health care looks unlikely to be met by doctors in the way the past century’s was.”

••••••••••

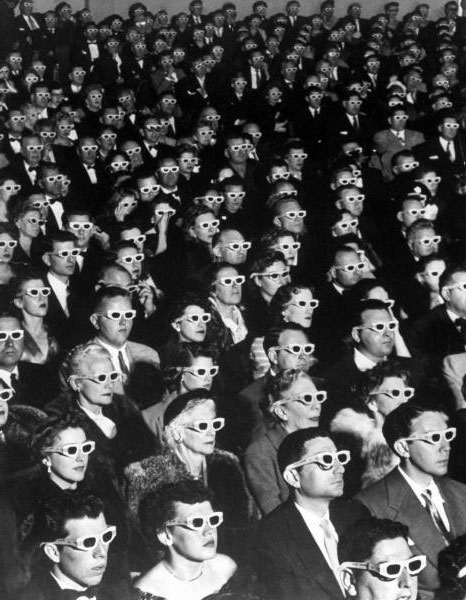

“Man…woman…birth…death…infinity”:

Guy Debord directed this 1973 adaptation of his book, Society of the Spectacle. Um, some generalizations.

Tags: Guy Debord

From Vernor Vinge’s famous 1993 essay, “The Coming Technological Singularity: How to Survive in the Post-Human Era,” which coined the word which describes that moment when machine knowledge surpasses the human kind:

“What are the consequences of this event? When greater-than-human intelligence drives progress, that progress will be much more rapid. In fact, there seems no reason why progress itself would not involve the creation of still more intelligent entities — on a still-shorter time scale. The best analogy that I see is with the evolutionary past: Animals can adapt to problems and make inventions, but often no faster than natural selection can do its work — the world acts as its own simulator in the case of natural selection. We humans have the ability to internalize the world and conduct ‘what if’s’ in our heads; we can solve many problems thousands of times faster than natural selection. Now, by creating the means to execute those simulations at much higher speeds, we are entering a regime as radically different from our human past as we humans are from the lower animals.

From the human point of view this change will be a throwing away of all the previous rules, perhaps in the blink of an eye, an exponential runaway beyond any hope of control. Developments that before were thought might only happen in ‘a million years’ (if ever) will likely happen in the next century.

I think it’s fair to call this event a singularity (‘the Singularity’ for the purposes of this paper). It is a point where our models must be discarded and a new reality rules. As we move closer and closer to this point, it will loom vaster and vaster over human affairs till the notion becomes a commonplace. Yet when it finally happens it may still be a great surprise and a greater unknown.”

Tags: Vernor Vinge

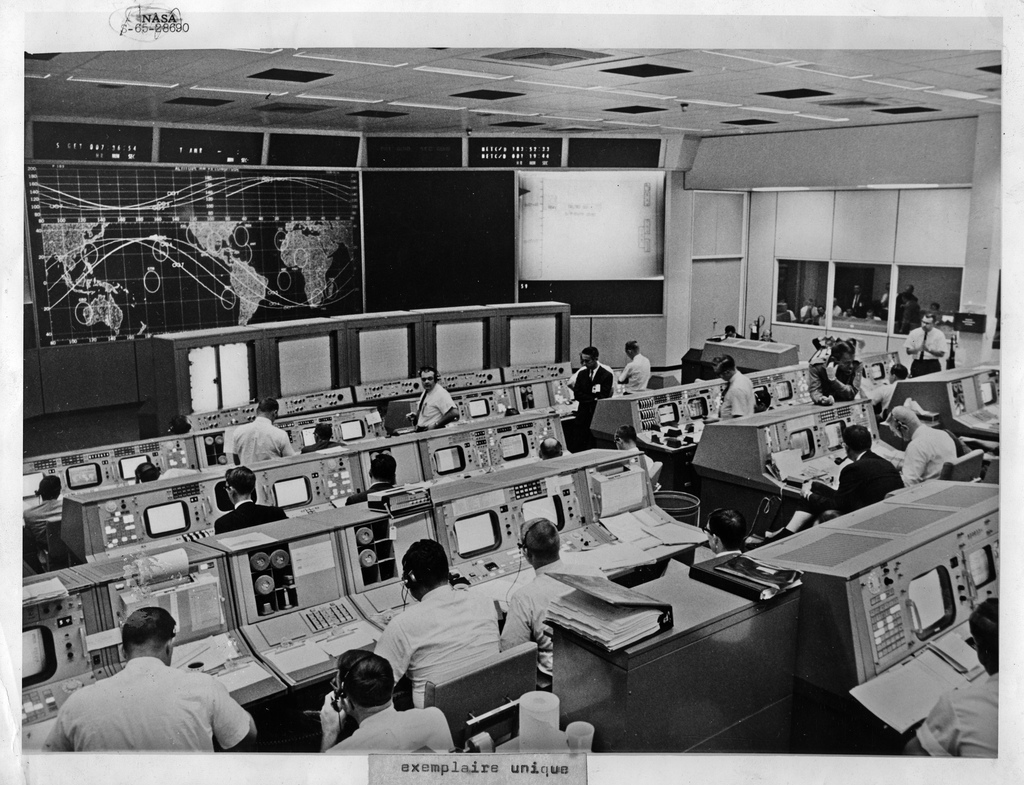

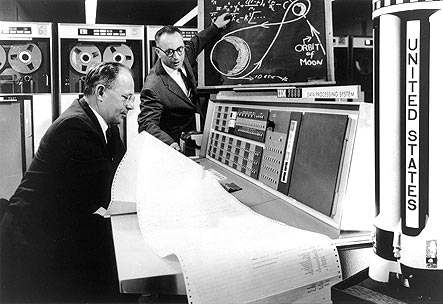

Even more lifelike than John Glenn.

Maybe it’s because I’m from a fringy, blue-collar background in NYC, but I’ve always been very familiar with the informal economy, people earning a few extra dollars selling loosies or trinkets or food, handing out betting cards for bookies and other stuff that may or may not be legal. Such low-level entrepreneurship is becoming more common in the West during these desperate times, with the help of digital tools. A succinct explanation of the informal economy by Anand Giridharadas in the New York Times:

“One of the differences between rich and poor countries is that in the latter, people seldom wait for the government to ‘create jobs.’

When times are hard, they buy packs of cigarettes and sell them as singles; they find houses to clean through cousins of a cousin; they rent out bedrooms to students; they stock up on cellphone credit and peddle sidewalk calls by the minute.

It’s called the informal economy, and in much of the world it is bigger than the formal one. But it has been pushed onto the sidelines in the West, the refuge of criminals and the poor, because of labor laws, taxes, health and safety regulations and the like, which emerged to protect workers and consumers from the market’s vicissitudes and companies’ whims.”

Tags: Anand Giridharadas

The opening of “Look Deep Into the Mind’s Eye,” Carl Zimmer’s 2010 Discover article about a building surveyor’s sudden inability to visualize things:

“One day in 2005, a retired building surveyor in Edinburgh visited his doctor with a strange complaint: His mind’s eye had suddenly gone blind.

The surveyor, referred to as MX by his doctors, was 65 at the time. He had always felt that he possessed an exceptional talent for picturing things in his mind. The skill had come in handy in his job, allowing MX to recall the fine details of the buildings he surveyed. Just before drifting off to sleep, he enjoyed running through recent events as if he were watching a movie. He could picture his family, his friends, and even characters in the books he read.

Then these images all vanished. The change happened shortly after MX went to a hospital to have his blocked coronary arteries treated. As a cardiologist snaked a tube into the arteries and cleared out the obstructions, MX felt a ‘reverberation’ in his head and a tingling in his left arm. He didn’t think to mention it to his doctors at the time. But four days later he realized that when he closed his eyes, all was darkness.”

Tags: Carl Zimmer

Tags: Richard Feynman

You’ve probably seen this appearance by Louis C.K. with Conan (whom he wrote for earlier in his career) about a dozen times because it’s arguably the stand-up’s most famous moment. But I post it here anyway because I think when this interconnected and narcissistic age has calmed down, it will be the truest thing anyone has said about us. A compliment it’s not.

From “Do You Really Want to Live Forever?” Ronald Bailey’s Reason review of Stephen Cave’s new book, Immortality, which sees the defeat of death as a Pyrrhic victory:

“Why not simply repair the damage caused by aging, thus defeating physical death? This is the goal of transhumanists like theoretical biogerontologist Aubrey de Grey who has devised the Strategies for Engineered Negligible Senescence (SENS) program. SENS technologies would include genetic interventions to rejuvenate cells, stem cell transplants to replace aged organs and tissues, and nano-machines to patrol our bodies to prevent infections and kill nascent cancers. Ultimately, Cave cannot argue that these life-extension technologies will not work for individuals but suggests that they would produce problems like overpopulation and environmental collapse that would eventually subvert them. He also cites calculations done by a demographer that assuming aging and disease is defeated by biomedical technology accidents would still do in would-be immortals. The average life expectancy of medical immortals would be 5,775 years.” (Thanks Browser.)

Tags: Aubrey de Grey, Ronald Bailey, Stephen Cave

From a piece about the need for ethical robots, in the Economist:

“Robots are spreading in the civilian world, too, from the flight deck to the operating theatre. Passenger aircraft have long been able to land themselves. Driverless trains are commonplace. Volvo’s new V40 hatchback essentially drives itself in heavy traffic. It can brake when it senses an imminent collision, as can Ford’s B-Max minivan. Fully self-driving vehicles are being tested around the world. Google’s driverless cars have clocked up more than 250,000 miles in America, and Nevada has become the first state to regulate such trials on public roads. In Barcelona a few days ago, Volvo demonstrated a platoon of autonomous cars on a motorway.

As they become smarter and more widespread, autonomous machines are bound to end up making life-or-death decisions in unpredictable situations, thus assuming—or at least appearing to assume—moral agency. Weapons systems currently have human operators ‘in the loop,’ but as they grow more sophisticated, it will be possible to shift to ‘on the loop’ operation, with machines carrying out orders autonomously.

As that happens, they will be presented with ethical dilemmas. Should a drone fire on a house where a target is known to be hiding, which may also be sheltering civilians? Should a driverless car swerve to avoid pedestrians if that means hitting other vehicles or endangering its occupants? Should a robot involved in disaster recovery tell people the truth about what is happening if that risks causing a panic?”

••••••••••

Volvo’s autonomous vehicles in Barcelona:

In Evgeny Morozov’s new article at Slate, he explains why cyber warfare may not be attractive to terrorist groups. Of course, if there was a critical mass of driverless cars or robotic surgeons to tamper with, things might be different. There would definitely by a scary wow factor in that scenario. An excerpt:

“Terrorists may be more keen on anonymity, but the reality is that in the decade since 9/11, no terrorist group has had much success causing serious disruption of the civilian or military infrastructure. For a group like al-Qaida, the costs of getting it right are too high, particularly because it’s not guaranteed that such a cyber-terror campaign would be as spectacular as detonating a bomb in a busy public square.”

Tags: Evgeny Morozov

Tags: Buckminster Fuller

The opening of “Remember This,” Joshua Foer’s excellent 2007 National Geographic piece about people with extreme memories and those of us with more average neural connections governing the data and images we retain:

“There is a 41-year-old woman, an administrative assistant from California known in the medical literature only as ‘AJ,’ who remembers almost every day of her life since age 11. There is an 85-year-old man, a retired lab technician called ‘EP,’ who remembers only his most recent thought. She might have the best memory in the world. He could very well have the worst.

‘My memory flows like a movie—nonstop and uncontrollable,’ says AJ. She remembers that at 12:34 p.m. on Sunday, August 3, 1986, a young man she had a crush on called her on the telephone. She remembers what happened on Murphy Brown on December 12, 1988. And she remembers that on March 28, 1992, she had lunch with her father at the Beverly Hills Hotel. She remembers world events and trips to the grocery store, the weather and her emotions. Virtually every day is there. She’s not easily stumped.

There have been a handful of people over the years with uncommonly good memories. Kim Peek, the 56-year-old savant who inspired the movie Rain Man, is said to have memorized nearly 12,000 books (he reads a page in 8 to 10 seconds). ‘S,’ a Russian journalist studied for three decades by the Russian neuropsychologist Alexander Luria, could remember impossibly long strings of words, numbers, and nonsense syllables years after he’d first heard them. But AJ is unique. Her extraordinary memory is not for facts or figures, but for her own life. Indeed, her inexhaustible memory for autobiographical details is so unprecedented and so poorly understood that James McGaugh, Elizabeth Parker, and Larry Cahill, the neuroscientists at the University of California, Irvine who have been studying her for the past seven years, had to coin a new medical term to describe her condition: hyperthymestic syndrome.

EP is six-foot-two (1.9 meters), with perfectly parted white hair and unusually long ears. He’s personable, friendly, gracious. He laughs a lot. He seems at first like your average genial grandfather. But 15 years ago, the herpes simplex virus chewed its way through his brain, coring it like an apple. By the time the virus had run its course, two walnut-size chunks of brain matter in the medial temporal lobes had disappeared, and with them most of EP’s memory.”

••••••••••

Oliver Sacks discussing his pen pal Alexander Luria, the neuropsychologist who authored The Mind of a Mnemonist:

Tags: Alexander Luria, Joshua Foer, Oliver Sacks

Major League Baseball long generated most of its income through live attendance, so there was apprehension about “giving away” games on cable, even as screens shrunk and became ubiquitous. But regional–and very lucrative–contracts have proliferated as team owners realized that they weren’t harming their brands but extending them. And cable badly needs baseball, since it can provide so much live content that isn’t likely to be time-shifted. From the Sports Economist:

“Local TV rights provides the revenue stream, but doesn’t this just push back the insanity one level? Why would anyone bid these amounts for baseball — America’s dying pastime? A funny thing happened on the way to the funeral — baseball franchises discovered that a substitute was actually a complement. Over the past several years, teams switched to televising most, if not all, of their games via local broadcast/cable/satellite. Teams long resisted such TV saturation, thinking that televised games substitute for fans in the seats. Texas drew almost 3 million fans last season in spite of extensive regional televising of their games. Yes, a few fans, at the margin, will choose to attend fewer games and watch on TV. However, many potential fans will attend very few games regardless. Televising the games becomes a way of extending the stadium capacity to include these households. The TV advertisers along with cable/satellite fees pay for these ‘at-home season ticket holders.'”

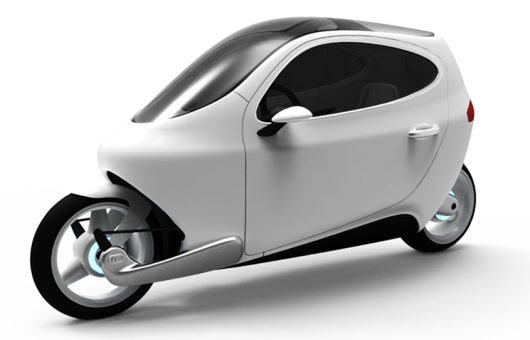

From an L.A. Times piece about the C-1, a self-balancing, enclosed, electric motorcycle from Lit Motors.

“Lit Motors calls it the C-1, but the San Francisco start-up’s untippable motorcycle seems nothing short of magic. It uses gyroscopes to stay balanced in a straight line and in turns in which drivers can, in theory, roll down their windows and drag their knuckles on the ground.

Is it a motorcycle? A car? Neither. It’s an entirely new form of personal transportation, presuming it gets off the ground.

The all-electric vehicle is fully enclosed and uses a steering wheel and floor pedals like a car. But it weighs just 800 pounds and balances on two wheels even when stopped, making it more efficient than hauling around a 2-ton four-wheeler and safer than an accident-prone bike.”

From “How Headphones Changed the World,” Derek Thompson’s Atlantic article about the unusual origins of the tech item, which was invented by Nathaniel Baldwin, a Mormon supporter of the polygamist movement:

“In 1910, the Radio Division of the U.S. Navy received a freak letter from Salt Lake City written in purple ink on blue-and-pink paper. Whoever opened the envelope probably wasn’t expecting to read the next Thomas Edison. But the invention contained within represented the apotheosis of one of Edison’s more famous, and incomplete, discoveries: the creation of sound from electrical signals.

The author of the violet-ink note, an eccentric Utah tinkerer named Nathaniel Baldwin, made an astonishing claim that he had built in his kitchen a new kind of headset that could amplify sound. The military asked for a sound test. They were blown away. Naval radio officers clamored for the ‘comfortable, efficient headset’ on the brink of World War I. And so, the modern headphone was born.”

••••••••••

Headphones on display in a 1970s Continental ad:

Tags: Derek Thompson, Nathaniel Baldwin

The first three graphs of Wil S. Hylton’s excellent New York Times Magazine article about Craig Venter, who wants to make tiny machines that breathe:

“In the menagerie of Craig Venter’s imagination, tiny bugs will save the world. They will be custom bugs, designer bugs — bugs that only Venter can create. He will mix them up in his private laboratory from bits and pieces of DNA, and then he will release them into the air and the water, into smokestacks and oil spills, hospitals and factories and your house.

Each of the bugs will have a mission. Some will be designed to devour things, like pollution. Others will generate food and fuel. There will be bugs to fight global warming, bugs to clean up toxic waste, bugs to manufacture medicine and diagnose disease, and they will all be driven to complete these tasks by the very fibers of their synthetic DNA.

Right now, Venter is thinking of a bug. He is thinking of a bug that could swim in a pond and soak up sunlight and urinate automotive fuel. He is thinking of a bug that could live in a factory and gobble exhaust and fart fresh air. He may not appear to be thinking about these things. He may not appear to be thinking at all. He may appear to be riding his German motorcycle through the California mountains, cutting the inside corners so close that his kneepads skim the pavement. This is how Venter thinks. He also enjoys thinking on the deck of his 95-foot sailboat, halfway across the Pacific Ocean in a gale, and while snorkeling naked in the Sargasso Sea surrounded by Portuguese men-of-war. When Venter was growing up in San Francisco, he would ride his bicycle to the airport and race passenger jets down the runway. As a Navy corpsman in Vietnam, he spent leisurely afternoons tootling up the coast in a dinghy, under a hail of enemy fire.” (Thanks Browser.)

Tags: Craig Venter, Wil S. Hylton

A demonstration of Quantum Trapping–and its potential to revolutionize travel–at Tel Aviv University.

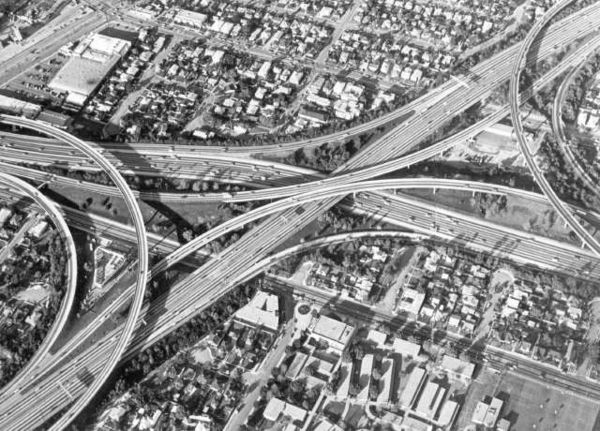

From an essay by Gabrielle Esperdy at Design Observer about the Trollope-ish trips across America taken by the late urban theorist Reyner Banham, who loved Los Angeles:

“Once Banham got a driver’s license, the pull of those miles was irresistible, and he spent plenty of time behind the wheel: up and down the California coast, into the deserts and canyons of the Southwest and Texas, across the rust belt and the Midwest, and over and through the northeast megalopolis. There were countless stops in between and along the way, all fully documented in field notebooks, maps, postcards and 35mm slides. Banham visited architectural monuments and natural landmarks, evincing equal interest in the Seagram Building and the Cima Dome. He was attracted to everyday landscapes and out-of-the-way obscurities, supermarkets and motel chains having nearly the same allure as one-off wilderness resorts. He explored thriving commercial centers and abandoned industrial wastelands (and vice versa), lavishing the same attention on a Ponderosa Steak House as on a General Mills grain elevator.

From the Tennessee Valley to Silicon Valley, no building or landscape was unworthy — or safe — from Banham’s formal analysis, socio-cultural critique or outspoken opinionating. He thought the TVA dams with their ‘overwhelming physical grandeur’ were better in real life than in the iconographic New Deal photographs where he had first encountered them, and while he may have sneered at the ‘Redneck Macholand’ in which they were located, he reserved his true scorn for the ‘eco-radicalist’ supporters of the Endangered Species Act who, in the 1970s, prevented the closing of the Tellico Dam sluices in order to preserve the snail darter minnow. The construction of the dam may have looked more arrogant, but Banham wondered if it really was.

The ‘Fertile Crescent of Electronics’ presented no such moral quandaries when Banham visited the sylvan corporate landscapes of IBM, Hewlett-Packard and other now obsolete tech-companies in 1981. Concerns about the environmental impact of all those microchips were still about a decade away and, given his long-standing technophilia, Banham would probably have minimized their significance with the same greater-good rationale he applied to the TVA. While 1981 was early enough in the digital revolution that Space Invaders was cutting edge, it was late enough that Silicon Valley had already produced a ‘better-than-respectable body of architecture.’ The buildings, in Banham’s view, were as sleek, silver and modern as the gadgets designed in the research labs within, an alignment of high-tech imagery and confident industrial consciousness — exemplified by MBT Associate’s IBM’s Santa Teresa campus in San Jose — that Banham considered a cause for celebration. Only the most ‘crass and unobservant’ among the ‘modern-architecture knockers’ and ‘California-mockers’ could possibly disagree.”

Tags: Gabrielle Esperdy, Reyner Banham

Brief 1977 film about laserists and their craft. Dude.

From “The Night the Lights Went Out in Griffith Park,” an L.A. Times article about the first laser-light show for the masses, in 1973: “Before the lights go down, the creator of Laserium, Ivan Dryer, is introduced and receives a lengthy standing ovation. The 62-year-old filmmaker with an astronomy and engineering background saw his first ‘laser light show’ in November 1970, when he was invited to film a demonstration by a Caltech scientist who’d been using a laser in her off hours to create artwork. A month later, he approached officials at the Griffith Park Observatory, where he’d once worked as a guide, with the idea of putting on a show there. For three years, the answer was no, but a new administration warmed to the idea, and Laserium was born.

‘When we first started, there were a whole lot of mistakes to be made,’ recalls Dryer, a tall, wizened, Ichabod Crane of a man. ‘We found that if we made mistakes on cue, on the beat, that was OK with the audience.’There was ‘The John Effect’–if too many toilets were flushed in the bathroom, the water pressure in the water-cooled ion gas laser (which reaches a core temperature of 5,000 degrees) would fail. Dryer also recounts the time his then-partner accidentally touched a high-voltage wire and shocked himself during a show, the audience believing the screaming was part of the entertainment. Similarly, when a fly landed on the lumia lens and began crawling around it, casting a giant sci-fi insect form on the ceiling, the crowd cheered wildly at the clever effect. Rather than swat it away, Dryer says, ‘we wished we could train it and hire it for future shows.’

In the intervening 28 years, laser technology and techniques improved, glitches were removed and new versions came along–Laser Rock, Pink Floyd’s Dark Side of the Moon, Led Zeppelin, Lollapalaser and the final incarnation, Laser Visions, which features ambient/ techno/electronica a la Orb, Enya and Vangelis.”

Tags: Ivan Drye