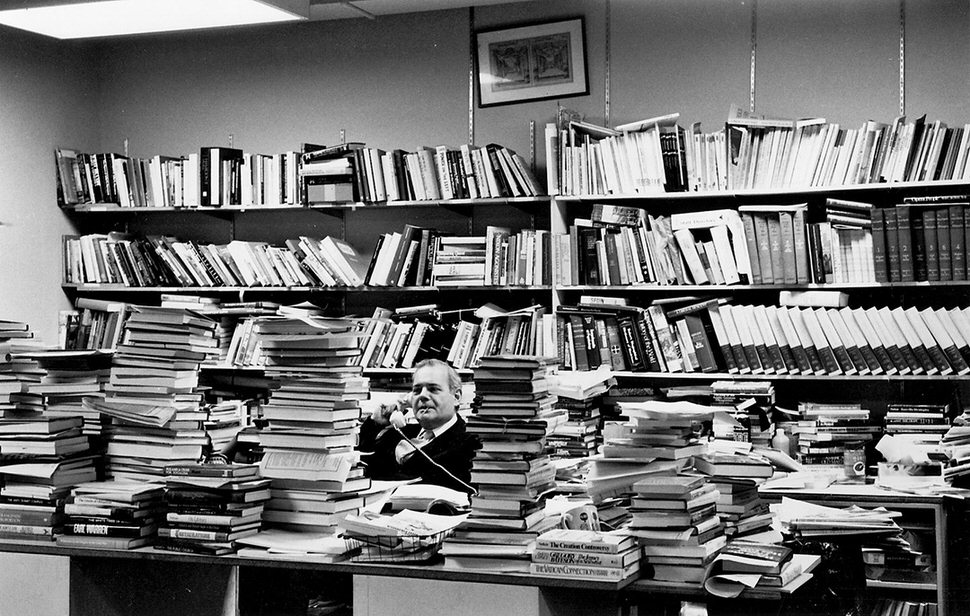

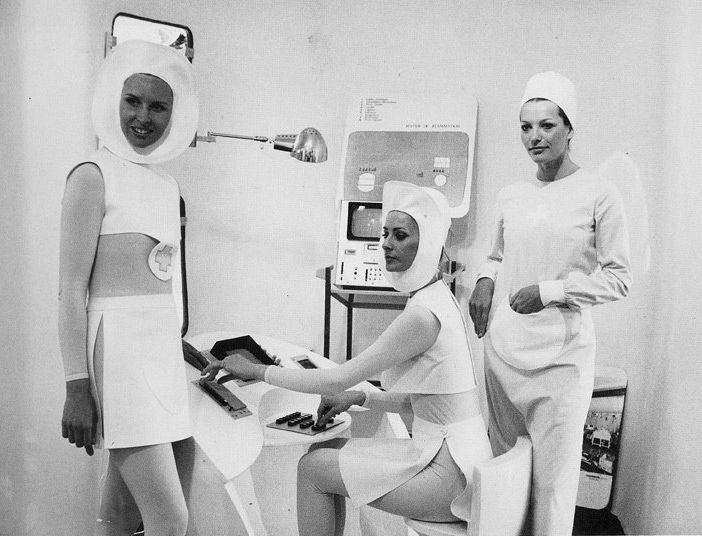

Following up on the post about technological unemployment in the office-support sector, here’s an excerpt from a 1975 Bloomberg piece that wondered about the office of the future. A business revolution threatened to not only decrease paper but people as well (though this article focused more on the former than the latter.) A “collection of these electronic terminals linked to each other and to electronic filing cabinets…will change our daily life,” one analyst promised, and it certainly has.

The opening:

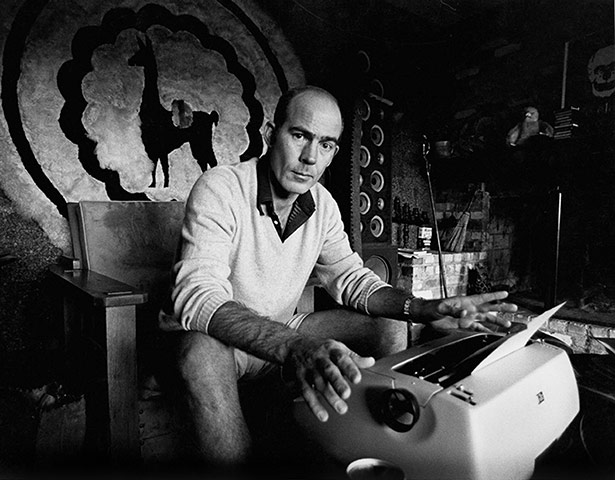

The office is the last corporate holdout to the automation tide that has swept through the factory and the accounting department. It has changed little since the invention of the typewriter 100 years ago. But in almost a matter of months, office automation has emerged as a full-blown systems approach that will revolutionize how offices work.

At least this is the gospel being preached by office equipment makers and the research community. And because the labor-intensive office desperately needs the help of technology, nearly every company with large offices is trying to determine how this onrushing wave of new hardware and procedures can help to improve its office productivity.

Will the office change all that much? Listen to George E. Pake, who heads Xerox Corp.’s Palo Alto (Calif.) Research Center, a new think tank already having a significant impact on the copier giant’s strategies for going after the office systems market: “There is absolutely no question that there will be a revolution in the office over the next 20 years. What we are doing will change the office like the jet plane revolutionized travel and the way that TV has altered family life.”

Pake says that in 1995 his office will be completely different; there will be a TV-display terminal with keyboard sitting on his desk. “I’ll be able to call up documents from my files on the screen, or by pressing a button,” he says. “I can get my mail or any messages. I don’t know how much hard copy [printed paper] I’ll want in this world.”

The Paperless Office

Some believe that the paperless office is not that far off. Vincent E. Giuliano of Arthur D. Little, Inc., figures that the use of paper in business for records and correspondence should be declining by 1980, “and by 1990, most record-handling will be electronic.”

But there seem to be just as many industry experts who feel that the office of the future is not around the corner. “It will be a long time—it always takes longer than we expect to change the way people customarily do their business,” says Evelyn Berezin, president of Redactron Corp., which has the second-largest installed base (after International Business Machines Corp.) of text-editing typewriters. “The EDP [data-processing] industry in the 1950s thought that the whole world would have made the transition to computers by 1960. And it hasn’t happened yet.”•