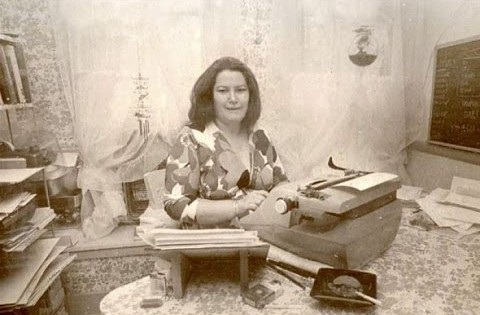

William Butler Yeats famously pined for his muse, Maud Gonne, who rejected him. When her daughter, Iseult, turned 22, the now-midlife poet tried for her hand and was likewise turned away. While apparently no one in the family would fuck Yeats, Maud did apparently have sex in the grave of her infant son who had died at two, believing some mystical hooey which said the soul of the deceased boy would transmigrate into the new baby if she conceived next to his coffin. Well, okay. From Hugh Schofield at the BBC:

Actress, activist, feminist, mystic, Maud Gonne was also the muse and inspiration for the poet W B Yeats, who immortalised her in some of his most famous verses.

After the Free State was established in 1922, Maud Gonne remained a vocal figure in Irish politics and civil rights. Born in 1866, she died in Dublin in 1953.

But for many years in her youth and early adulthood, Maud Gonne lived in France.

Of this part of her life, much less is known. There is one long-secret and bizarre episode, however, that has now been established as almost certainly true.

This was the attempt in late 1893 to reincarnate her two-year-old son, through an act of sexual intercourse next to the dead infant’s coffin. …

Having inherited a large sum of money on the death of her father, she paid for a memorial chapel – the biggest in the cemetery. In a crypt beneath, the child’s coffin was laid.

In late 1893 Gonne re-contacted Lucien Millevoye, from whom she had separated after Georges’ death.

She asked him to meet her in Samois-sur-Seine. First the couple entered the small chapel, then opened the metal doors leading down to the crypt.

They descended the small metal ladder – just five or six steps. And then – next to the dead baby’s coffin – they had sexual intercourse.•