“It is not yet possible to create a computerized voice that is indistinguishable from a human one for anything longer than short phrases,” writes John Markoff in his latest probing NYT article about technology, this one about “conversational agents.”

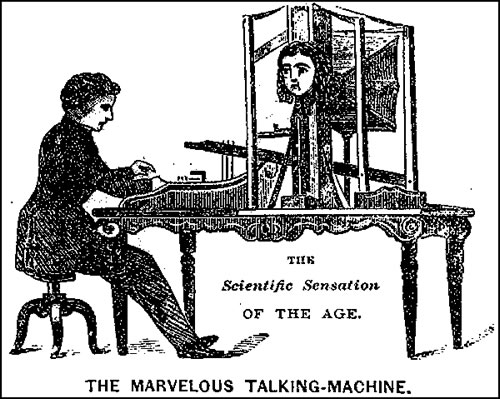

The dream of giving voices like ours to contraptions were realized with varying degrees of success by 19th-century inventors like Joseph Faber and Thomas Edison, who awed their audiences, but the modern attempt is to replace marvel with mundanity, a post-Siri scenario in which the interaction no longer seems novel.

Machines that can listen are the ones that cause the most paranoia, but talking ones that could pass for human would pose a challenge as well. As Markoff notes in the above quote, a truly conversational computer isn’t currently achievable, but it will be eventually. At first we might give such devices a verbal tell to inform people of their non-carbon chat partner, but won’t we ultimately make the conversation seamless?

In his piece, Markoff surveys the many people trying to make that seamlessness a reality. The opening:

When computers speak, how human should they sound?

This was a question that a team of six IBM linguists, engineers and marketers faced in 2009, when they began designing a function that turned text into speech for Watson, the company’s “Jeopardy!”-playing artificial intelligence program.

Eighteen months later, a carefully crafted voice — sounding not quite human but also not quite like HAL 9000 from the movie 2001: A Space Odyssey — expressed Watson’s synthetic character in a highly publicized match in which the program defeated two of the best human Jeopardy! players.

The challenge of creating a computer “personality” is now one that a growing number of software designers are grappling with as computers become portable and users with busy hands and eyes increasingly use voice interaction.

Machines are listening, understanding and speaking, and not just computers and smartphones. Voices have been added to a wide range of everyday objects like cars and toys, as well as household information “appliances” like the home-companion robots Pepper and Jibo, and Alexa, the voice of the Amazon Echo speaker device.

A new design science is emerging in the pursuit of building what are called “conversational agents,” software programs that understand natural language and speech and can respond to human voice commands.

However, the creation of such systems, led by researchers in a field known as human-computer interaction design, is still as much an art as it is a science.

It is not yet possible to create a computerized voice that is indistinguishable from a human one for anything longer than short phrases that might be used for weather forecasts or communicating driving directions.

Most software designers acknowledge that they are still faced with crossing the “uncanny valley,” in which voices that are almost human-sounding are actually disturbing or jarring.•

Tags: John Markoff