What’s so disquieting about machines thinking like us–out-thinking us, even!–is probably that it means we’re not so special. I sense, though, that such a feeling of insecurity occurs only at first blush. It was supposedly so terrible if computers could best us at chess, and then the world went on, inferior humans still competing in the game and working in tandem with software to create hybrid super-teams. We adapt after the disruption. Our job is to continually redefine why we’re here. From Tania Lombrozo at NPR:

Part of what’s fascinating about the idea of thinking machines, after all, is that they seem to approach and encroach on a uniquely human niche, homo sapiens — the wise.

Consider, for contrast, encountering “thinking” aliens, some alternative life form that rivals or exceeds our own intelligence. The experience would be strange, to be sure, but there may be something uniquely uncanny about thinking machines. While they can (or will some day) mirror us in capabilities, they are unlikely to do so in composition. My hypothetical aliens, at least, would have biological origins of some kind, whereas today’s computers do so only in the sense that they are human artifacts and, therefore, have an origin that follows from our own.

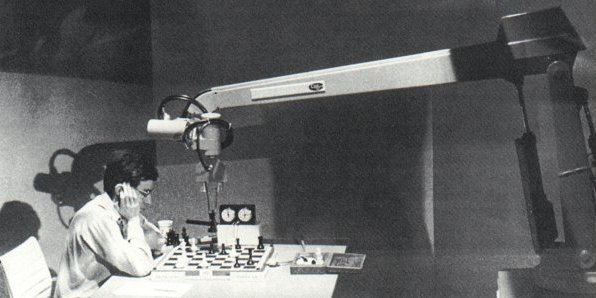

When it comes to human-like robots and other artifacts, some have described an “uncanny valley“: a level of similarity to natural beings that may be too close for comfort, compelling yet off. We might be slightly revolted by a mechanical appendage, for instance, or made uneasy by a realistically human robot face.

Examples of the “uncanny valley” phenomenon are overwhelmingly visual and behavioral, but is there also an uncanny valley when it comes to thinking? In other words, is there something uncanny or uncomfortable about intelligence that’s almost like ours — but not quite? For instance, is there something uncanny about a chat bot that effectively mimics human conversation, but through a process of keyword matching that bears little resemblance to human learning and language production?

My sense is that the valley of “uncanny thinking” is real, but elicits a more existential than visceral response. And if that’s so, perhaps it’s because we’re threatened by the idea that human thinking isn’t unique, and that maybe human thinking isn’t so special.•

Tags: Tania Lombrozo