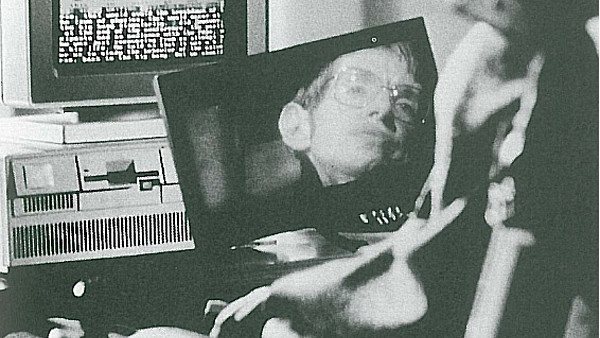

If we play our cards right, humans might be able to survive in this universe for a 100 billion years, but we’re not working the deck very well in some key ways. Human-made climate change, of course, is a gigantic near-term danger. Some see AI and technology as another existential threat, which of course it is in the long run, though the rub is we’ll need advanced technologies of all kinds to last into the deep future. A Financial Times piece by Sally Davies reports on Stephen Hawking’s warnings about technological catastrophe, something he seems more alarmed by as time passes:

“The astrophysicist Stephen Hawking has warned that artificial intelligence ‘could outsmart us all’ and is calling for humans to establish colonies on other planets to avoid ultimately a ‘near-certainty’ of technological catastrophe.

His dire predictions join recent warnings by several Silicon Valley tycoons about artificial intelligence even as many have piled more money into it.

Prof Hawking, who has motor neurone disease and uses a system designed by Intel to speak, said artificial intelligence could become ‘a real danger in the not-too-distant future’ if it became capable of designing improvements to itself.

Genetic engineering will allow us to increase the complexity of our DNA and ‘improve the human race,’ he told the Financial Times. But he added it would be a slow process and would take about 18 years before human beings saw any of the benefits.

‘By contrast, according to Moore’s Law, computers double their speed and memory capacity every 18 months. The risk is that computers develop intelligence and take over. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded,’ he said.”

Tags: Sally Davies, Stephen Hawking