In his 1970 Apollo 11 account, Of a Fire on the Moon, Norman Mailer realized that his rocket wasn’t the biggest after all, that the mission was a passing of the torch, that technology, an expression of the human mind, had diminished its creators. “Space travel proposed a future world of brains attached to wires,” Mailer wrote, his ego having suffered a TKO. And just as the Space Race ended the greater race began, the one between carbon and silicon, and it’s really just a matter of time before the pace grows too brisk for humans.

Supercomputers will ultimately be a threat to us, but we’re certainly doomed without them, so we have to navigate the future the best we can, even if it’s one not of our control. Gary Marcus addresses this and other issues in his latest New Yorker blog piece, “Why We Should Think About the Threat of Artificial Intelligence.” An excerpt:

“It’s likely that machines will be smarter than us before the end of the century—not just at chess or trivia questions but at just about everything, from mathematics and engineering to science and medicine. There might be a few jobs left for entertainers, writers, and other creative types, but computers will eventually be able to program themselves, absorb vast quantities of new information, and reason in ways that we carbon-based units can only dimly imagine. And they will be able to do it every second of every day, without sleep or coffee breaks.

For some people, that future is a wonderful thing. [Ray] Kurzweil has written about a rapturous singularity in which we merge with machines and upload our souls for immortality; Peter Diamandis has argued that advances in A.I. will be one key to ushering in a new era of ‘abundance,’ with enough food, water, and consumer gadgets for all. Skeptics like Eric Brynjolfsson and I have worried about the consequences of A.I. and robotics for employment. But even if you put aside the sort of worries about what super-advanced A.I. might do to the labor market, there’s another concern, too: that powerful A.I. might threaten us more directly, by battling us for resources.

Most people see that sort of fear as silly science-fiction drivel—the stuff of The Terminator and The Matrix. To the extent that we plan for our medium-term future, we worry about asteroids, the decline of fossil fuels, and global warming, not robots. But a dark new book by James Barrat, Our Final Invention: Artificial Intelligence and the End of the Human Era, lays out a strong case for why we should be at least a little worried.

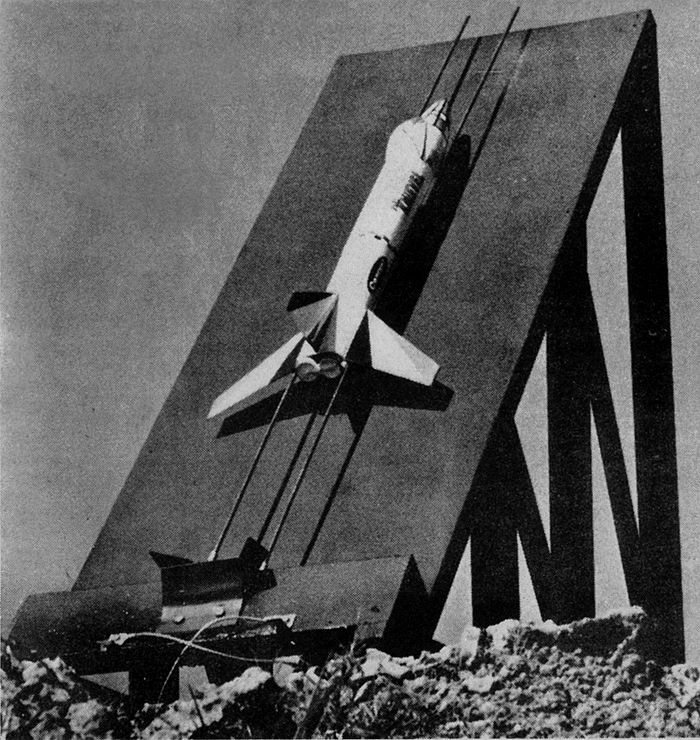

Barrat’s core argument, which he borrows from the A.I. researcher Steve Omohundro, is that the drive for self-preservation and resource acquisition may be inherent in all goal-driven systems of a certain degree of intelligence. In Omohundro’s words, ‘if it is smart enough, a robot that is designed to play chess might also want to build a spaceship,’ in order to obtain more resources for whatever goals it might have.”

Tags: Eric Brynjolfsson, Gary Marcus, Norman Mailer, Peter Diamandis, Ray Kurzweil