In his Wired article, Patrick Lin articulates the new driverless car thought experiment, “the school-bus problem,” though like everyone else he doesn’t mention that hitting a bus would likely kill the car’s driver as well. The example could use a more logical spin, though I get the point. An excerpt:

“Ethical dilemmas with robot cars aren’t just theoretical, and many new applied problems could arise: emergencies, abuse, theft, equipment failure, manual overrides, and many more that represent the spectrum of scenarios drivers currently face every day.

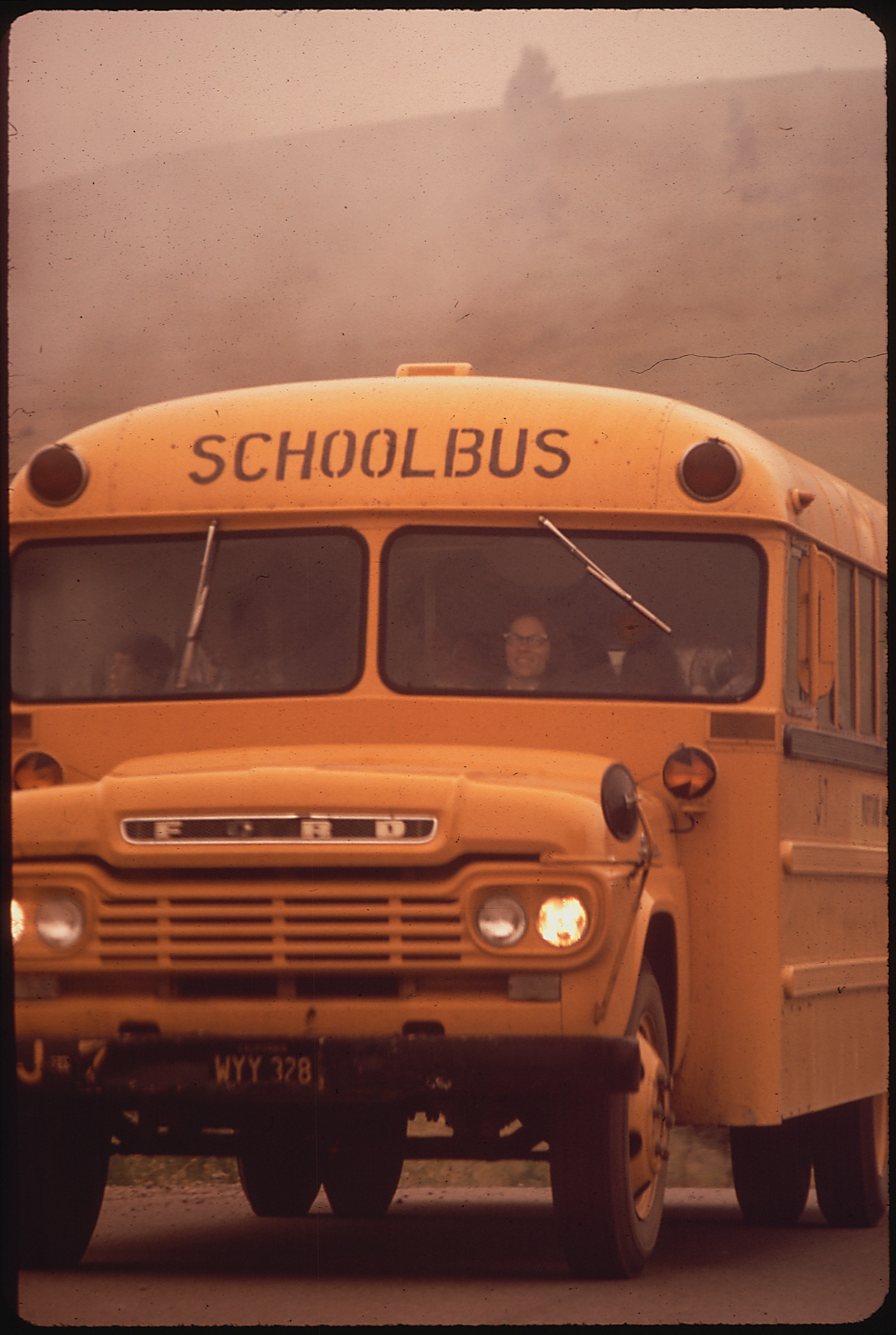

One of the most popular examples is the school-bus variant of the classic trolley problem in philosophy: On a narrow road, your robotic car detects an imminent head-on crash with a non-robotic vehicle — a school bus full of kids, or perhaps a carload of teenagers bent on playing ‘chicken” with you, knowing that your car is programmed to avoid crashes. Your car, naturally, swerves to avoid the crash, sending it into a ditch or a tree and killing you in the process.

At least with the bus, this is probably the right thing to do: to sacrifice yourself to save 30 or so schoolchildren. The automated car was stuck in a no-win situation and chose the lesser evil; it couldn’t plot a better solution than a human could.”

Tags: Patrick Lin