Was looking at the wonderful The Browser earlier today, and the quote of the day was from William Carlos Williams: “That which is possible is inevitable.” So true, especially when that sentiment is applied to technology. That doesn’t mean all hope is lost and we should just idly allow the creeping — and sometimes leaping — advances of tech to roll over us, but it does speak to the competition among corporations and states that often moves forward agendas for reasons that have nothing to do with common sense or public good.

It’s dubious we’ll come to some global consensus on inviolate rules governing genetic modifications of life forms or autonomous weapons systems. Of the two, there’s more hope for the latter than the former, considering the costs involved, though neither seems particularly promising. It won’t take a great deal of resources soon enough to rework the genome, with terrorist organizations as well as educational institutions in the game. Eventually, even startups in garages and “lone gunmen” will be able to create and destroy in this manner. This field will be, in time, decentralized.

Killer robots, conversely, aren’t going to be fast, cheap and out of control for the foreseeable future, though that doesn’t mean they won’t be developed. In fact, it’s plausible they will, even if the barrier of entry is much higher. There are currently reasons for America, China, Russia and other players to shy away from these weapons that guide themselves, but all it will take is for one major competitor to blink for everyone to rush into the future. And all sides have to keep gradually moving toward such capacity in the meantime in order to respond rapidly should a competing nation jump across the divide. Ultimately, everyone will probably blink.

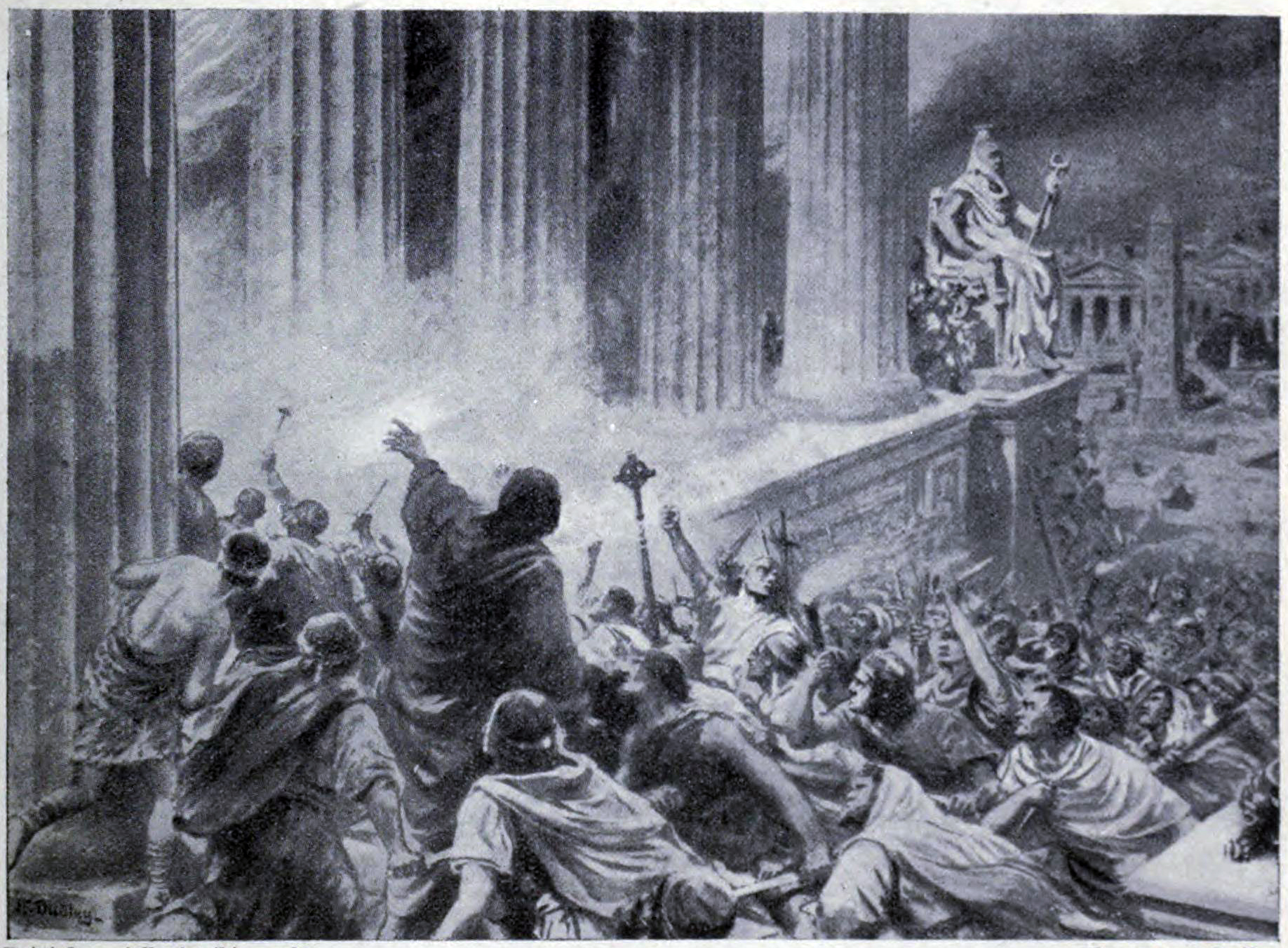

“Once this Pandora’s box is opened, it will be hard to close,” advised an open letter from leading AI and robotics experts to the UN, encouraging the intergovernmental body to urgently address the matter of autonomous weapons. That’s certainly accurate, though the box opening seems more likely than a total ban succeeding.

· · ·

From “Sorry, Banning ‘Killer Robots’ Just Isn’t Practical,” a smart Wired piece by Tom Simonite, which speaks to how the nebulous definition of “autonomous weapons” will aid in their development:

LATE SUNDAY, 116 entrepreneurs, including Elon Musk, released a letter to the United Nations warning of the dangerous “Pandora’s Box” presented by weapons that make their own decisions about when to kill. Publications including The Guardian and The Washington Post ran headlines saying Musk and his cosigners had called for a “ban” on “killer robots.”

Those headlines were misleading. The letter doesn’t explicitly call for a ban, although one of the organizers has suggested it does. Rather, it offers technical advice to a UN committee on autonomous weapons formed in December. The group’s warning that autonomous machines “can be weapons of terror” makes sense. But trying to ban them outright is probably a waste of time.

That’s not because it’s impossible to ban weapons technologies. Some 192 nations have signed the Chemical Weapons Convention that bans chemical weapons, for example. An international agreement blocking use of laser weapons intended to cause permanent blindness is holding up nicely.

Weapons systems that make their own decisions are a very different, and much broader, category. The line between weapons controlled by humans and those that fire autonomously is blurry, and many nations—including the US—have begun the process of crossing it. Moreover, technologies such as robotic aircraft and ground vehicles have proved so useful that armed forces may find giving them more independence—including to kill—irresistible.•