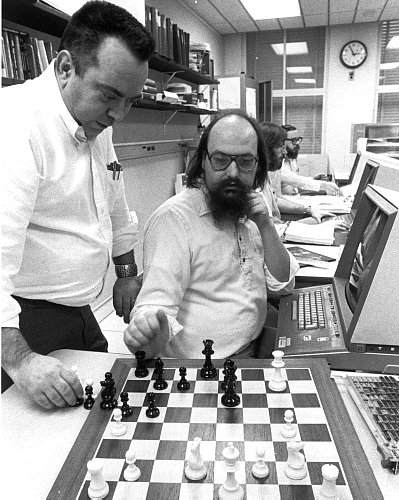

Humans are so terribly at hiring other humans for jobs that it seems plausible software couldn’t do much worse. I think that will certainly be true eventually, if it isn’t already, though algorithms won’t likely be much better at identifying non-traditional candidates with deeply embedded talents. Perhaps a human-machine hybrid à la freestyle chess would work best for the foreseeable future?

In arguing that journalists aren’t being rigorous enough when reporting on HR software systems, Andrew Gelman and Kaiser Fung of the Daily Beast point out that data doesn’t necessarily mitigate bias. An excerpt:

Software is said to be “free of human biases.” This is a false statement. Every statistical model is a composite of data and assumptions; and both data and assumptions carry biases.

The fact that data itself is biased may be shocking to some. Occasionally, the bias is so potent that it could invalidate entire projects. Consider those startups that are building models to predict who should be hired. The data to build such machines typically come from recruiting databases, including the characteristics of past applicants, and indicators of which applicants were successful. But this historical database is tainted by past hiring practices, which reflected a lack of diversity. If these employers never had diverse applicants, or never made many minority hires, there is scant data available to create a predictive model that can increase diversity! Ironically, to accomplish this goal, the scientists should code human bias into the software.•