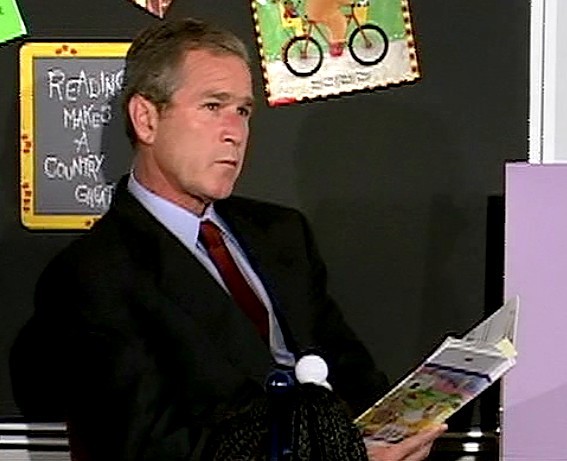

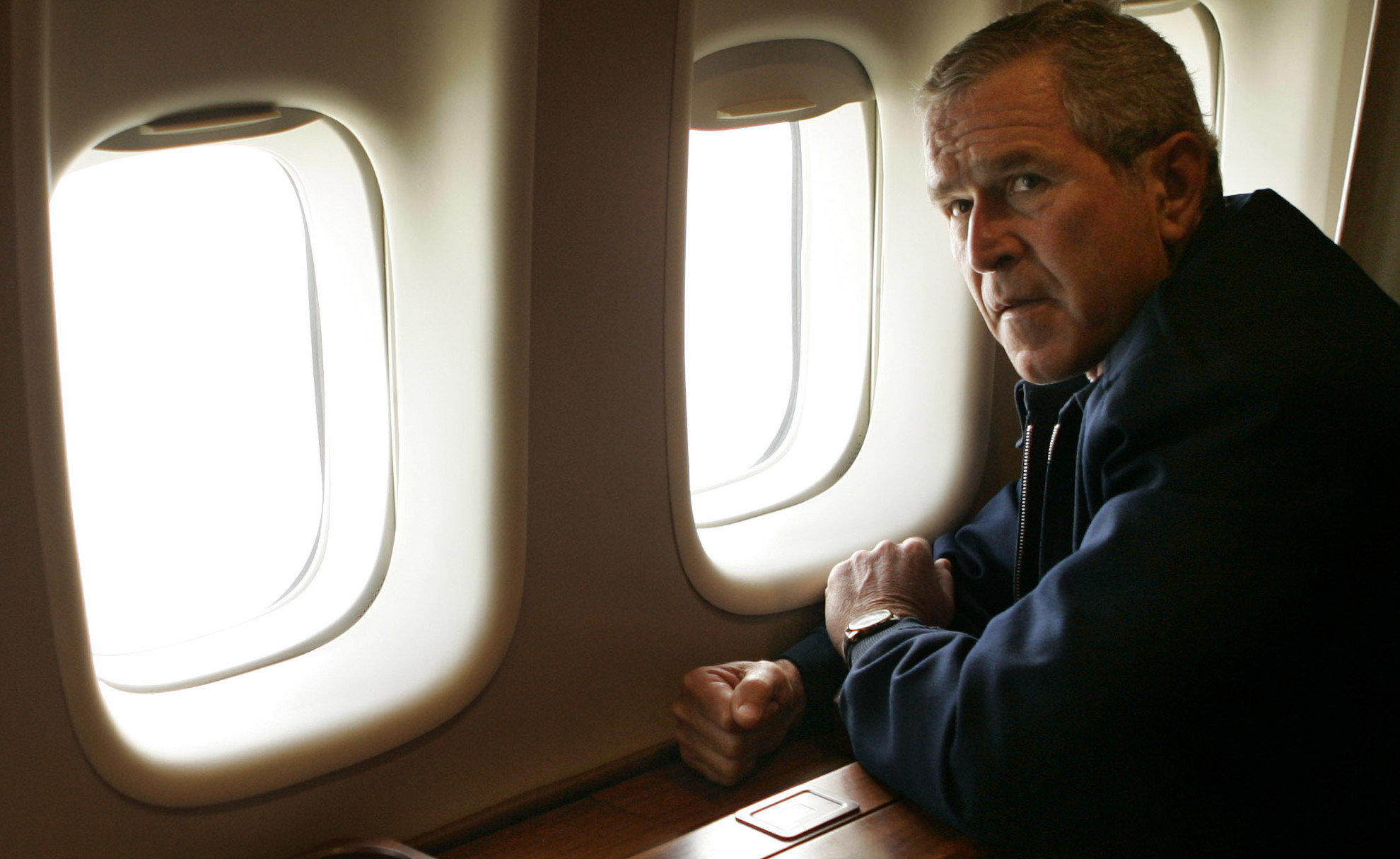

Ah, to be a fly on the wall in the White House in the aftermath of 9/11, once President Bush finally rested his copy of The Pet Goat and returned to the business at hand. If Al-Qaeda’s destruction of the World Trade Center was merely Step 1 of its plan to damage America, it was a scheme ultimately realized on a grand level. Our decisions in response to the large-scale terrorism, for the better part of the decade, did more harm to us than even the initial attack. Of course, in retrospect, there were potential reactions with even more far-reaching implications that went unrealized.

In a Spiegel Q&A, René Pfister and Gordon Repinski ask longtime German diplomat Michael Steiner about an alternative history that might have unfolded in the wake of September 11. An excerpt:

Spiegel:

The attacks in the United States on Sept. 11, 2001 came during your stint as Chancellor Gerhard Schröder’s foreign policy advisor. Do you remember that day?

Michael Steiner:

Of course, just like everybody, probably. Schröder was actually supposed to hold a speech that day at the German Council on Foreign Relations in Berlin. My people had prepared a nice text for him, but when he was supposed to head out, he — like all of us — couldn’t wrest himself away from the TV images of the burning Twin Towers. Schröder said: “Michael, you go there and explain to the people that I can’t come today.”

Spiegel:

What was it like in the days following the attacks?

Michael Steiner:

Condoleezza Rice was George W. Bush’s security advisor at the time. I actually had quite a good relationship with her. But after Sept. 11, the entire administration positively dug in. We no longer had access to Rice, much less to the president. It wasn’t just our experience, but also that of the French and British as well. Of course that made us enormously worried.

Spiegel:

Why?

Michael Steiner:

Because we thought that the Americans would overreact in response to the initial shock. For the US, it was a shocking experience to be attacked on their own soil.

Spiegel:

What do you mean, overreact? Were you afraid that Bush would attack Afghanistan with nuclear weapons?

Michael Steiner:

The Americans said at the time that all options were on the table. When I visited Condoleezza Rice in the White House a few days later, I realized that it was more than just a figure of speech.

Spiegel:

The Americans had developed concrete plans for the use of nuclear weapons in Afghanistan?

Michael Steiner:

They really had thought through all scenarios. The papers had been written.•