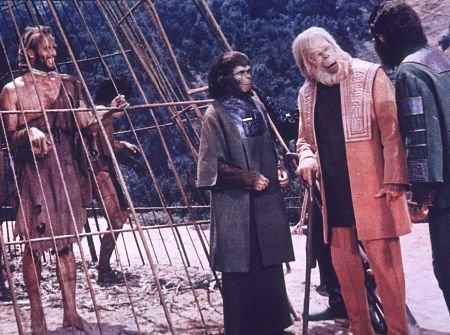

There’s a decent chance that we become the “monsters” we fear.

Superintelligent machines that become our conquerors are often said to be on the horizon, but I think they’re a further distance away. When we do get close enough to see them, they may resemble us. They will be another version of ourselves, a new “human” resulting from a souped-up evolution of our own design. We will be the end of us. Not soon, but someday.

In Stephen Hsu’s excellent Nautilus essay about a co-evolution of carbon and silicon, he envisions a “future with a diversity of both human and machine intelligences.” An excerpt:

By 2050, there will be another rapidly evolving and advancing intelligence besides that of machines: our own. The cost to sequence a human genome has fallen below $1,000, and powerful methods have been developed to unravel the genetic architecture of complex traits such as human cognitive ability. Technologies already exist which allow genomic selection of embryos during in vitro fertilization—an embryo’s DNA can be sequenced from a single extracted cell. Recent advances such as CRISPR allow highly targeted editing of genomes, and will eventually find their uses in human reproduction.

The potential for improved human intelligence is enormous. Cognitive ability is influenced by thousands of genetic loci, each of small effect. If all were simultaneously improved, it would be possible to achieve, very roughly, about 100 standard deviations of improvement, corresponding to an IQ of over 1,000. We can’t imagine what capabilities this level of intelligence represents, but we can be sure it is far beyond our own. Cognitive engineering, via direct edits to embryonic human DNA, will eventually produce individuals who are well beyond all historical figures in cognitive ability. By 2050, this process will likely have begun.

These two threads—smarter people and smarter machines—will inevitably intersect. Just as machines will be much smarter in 2050, we can expect that the humans who design, build, and program them will also be smarter.•