The murder rate has declined pretty much all over America, in cities enamored with the Broken Windows Theory and those not, and Los Angeles is no exception, having seen a remarkable drop since the bad old days of the early 1990s. One cloud in the silver lining of fewer murders is that those committed in L.A. have a very low arrest rate. From Hillel Aron in the LA Weekly:

The number of murders in Los Angeles County are down – way down. In 1993, the year after the Rodney King riots, there were 1,944 homicides. In 2014, there were 551. That’s nearly a 75 percent drop in less than 20 years, an astonishing reversal of fortune that occurred all over the country, though it was especially prominent in L.A., known as the gang capital of the world.

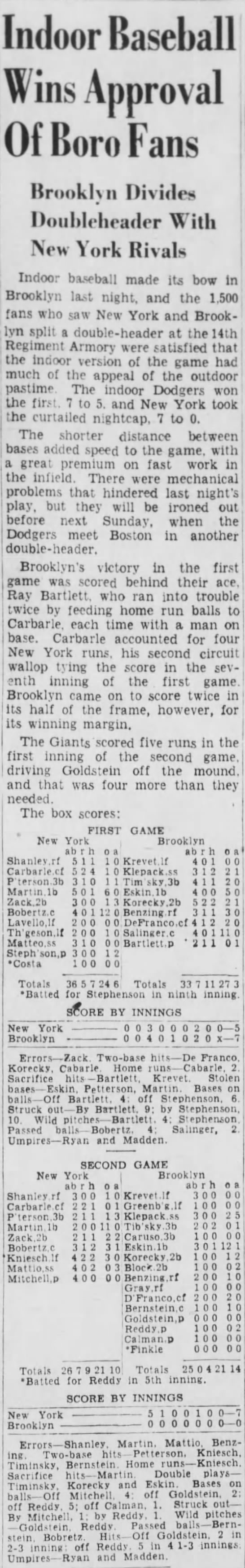

But there’s a dark cloud to that silver lining, as it turns out. The Los Angeles News Group published a disturbing package on Sunday, “Getting Away With Murder,” the product of an 18-month study in which its Los Angeles Daily News and other papers found that between 2000 and 2010, 46 percent of all homicides went unsolved.

That’s a lot of murderers who got away clean. Over the same period, the national average was 37 percent.

Perhaps even more depressing, 51 percent of murders where the victim was black went unsolved. When the victim was white, only 30 percent went unsolved. Over half of all unsolved homicide victims were Latino.

And actually, the real story might be even worse than the numbers suggest. The Daily News also found that LAPD listed some homicides as “cleared” (i.e., solved) even though no one had been convicted:

596 homicide cases from 2000 through 2010 that the LAPD has classified as “cleared other” — cop speak for solving a crime without arresting and filing charges against a suspect….

The LAPD cleared some of these cases because the D.A. declined to prosecute, but when asked for the reason each case was cleared, police officials did not respond.

In other words, the police think they know who the killer is, in many cases, but knowing is not the same as being able to convict before a jury.•