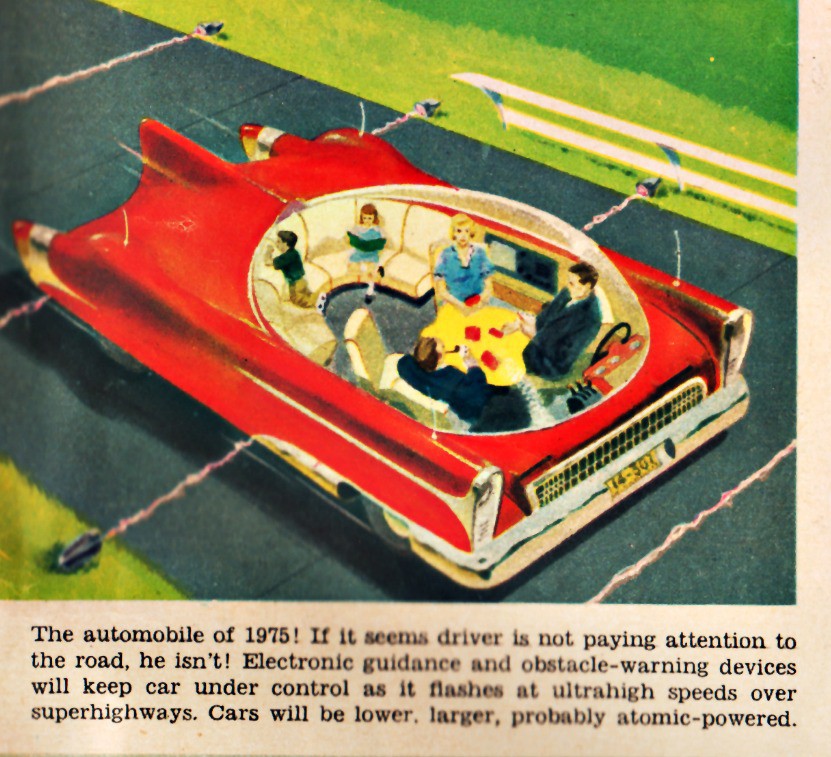

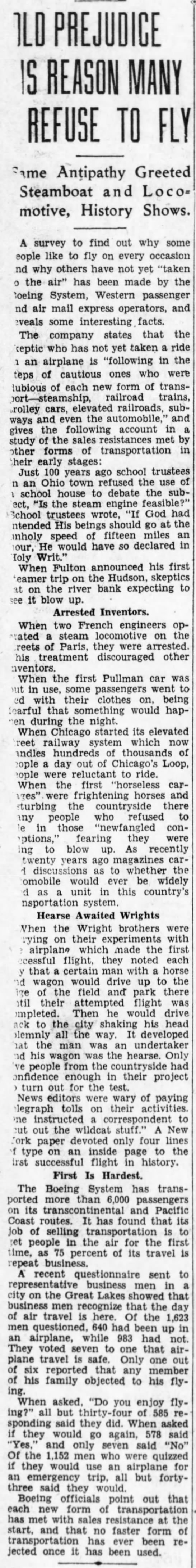

In an Atlantic piece, Derek Thompson notes that while CDs are under siege, digital music is itself being disrupted, with abundance making profits scarce. Music is desired, but the record store–in any form–is not. The opening:

CDs are dead.

That doesn’t seem like such a controversial statement. Maybe it should be. The music business sold 141 million CDs in the U.S. last year. That’s more than the combined number of tickets sold to the most popular movies in 2014 (Guardians) and 2013 (Iron Man 3). So “dead,” in this familiar construction, isn’t the same as zero. It’s more like a commonly accepted short-cut for a formerly popular thing is now withering at a commercially meaningful rate.

And if CDs are truly dead, then digital music sales are lying in the adjacent grave. Both categories are down double-digits in the last year, with iTunes sales diving at least 13 percent.

The recorded music industry is being eaten, not by one simple digital revolution, but rather by revolutions inside of revolutions, mouths inside of mouths, Alien-style. Digitization and illegal downloads kicked it all off. MP3 players and iTunes liquified the album. That was enough to send recorded music’s profits cascading. But today the disruption is being disrupted: Digital track sales are falling at nearly the same rate as CD sales, as music fans are turning to streaming—on iTunes, SoundCloud, Spotify, Pandora, iHeartRadio, and music blogs. Now that music is superabundant, the business (beyond selling subscriptions to music sites) thrives only where scarcity can be manufactured—in concert halls, where there are only so many seats, or in advertising, where one song or band can anchor a branding campaign.•

_____________________________

“In your home or in your car, protect your valuable tapes.”