The future usually arrives gradually, even frustratingly slowly, often wearing the clothes of the past, but what if it got here today or soon thereafter?

The benefits of profound technologies rushing headlong at us would be amazing and amazingly challenging. Gill Pratt, who oversaw the DARPA Robotics Challenge, wonders in a new Journal of Economic Perspectives essay if the field is to have a wild growth spurt, a synthetic analog to the biological eruption of the Cambrian Period. He thinks that once the “generalizable knowledge representation problem” is addressed, no easy feat, the field will speed forward. The opening:

About half a billion years ago, life on earth experienced a short period of very rapid diversification called the “Cambrian Explosion.” Many theories have been proposed for the cause of the Cambrian Explosion, with one of the most provocative being the evolution of vision, which allowed animals to dramatically increase their ability to hunt and find mates (for discussion, see Parker 2003). Today, technological developments on several fronts are fomenting a similar explosion in the diversification and applicability of robotics. Many of the base hardware technologies on which robots depend—particularly computing, data storage, and communications—have been improving at exponential growth rates. Two newly blossoming technologies—“Cloud Robotics” and “Deep Learning”—could leverage these base technologies in a virtuous cycle of explosive growth. In Cloud Robotics— a term coined by James Kuffner (2010)—every robot learns from the experiences of all robots, which leads to rapid growth of robot competence, particularly as the number of robots grows. Deep Learning algorithms are a method for robots to learn and generalize their associations based on very large (and often cloud-based) “training sets” that typically include millions of examples. Interestingly, Li (2014) noted that one of the robotic capabilities recently enabled by these combined technologies is vision—the same capability that may have played a leading role in the Cambrian Explosion. Is a Cambrian Explosion Coming for Robotics?

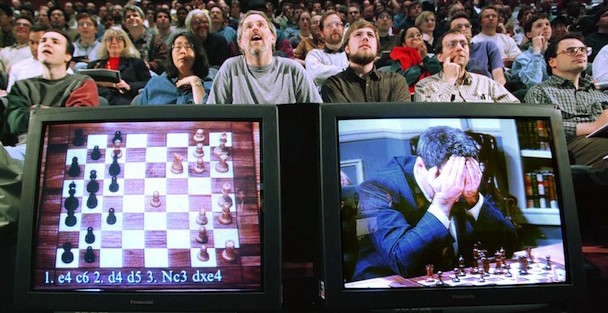

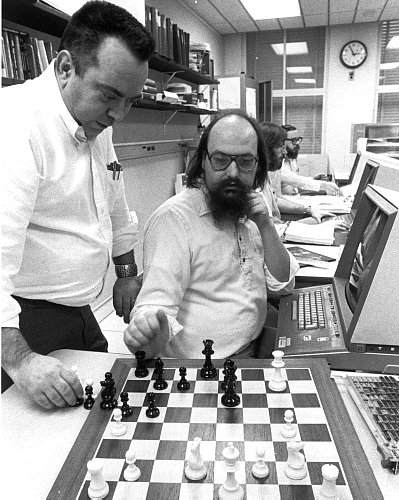

How soon might a Cambrian Explosion of robotics occur? It is hard to tell. Some say we should consider the history of computer chess, where brute force search and heuristic algorithms can now beat the best human player yet no chess-playing program inherently knows how to handle even a simple adjacent problem, like how to win at a straightforward game like tic-tac-toe (Brooks 2015). In this view, specialized robots will improve at performing well-defined tasks, but in the real world, there are far more problems yet to be solved than ways presently known to solve them.

But unlike computer chess programs, where the rules of chess are built in, today’s Deep Learning algorithms use general learning techniques with little domain-specific structure. They have been applied to a range of perception problems, like speech recognition and now vision. It is reasonable to assume that robots will in the not-too-distant future be able perform any associative memory problem at human levels, even those with high-dimensional inputs, with the use of Deep Learning algorithms. Furthermore, unlike computer chess, where improvements have occurred at a gradual and expected rate, the very fast improvement of Deep Learning has been surprising, even to experts in the field. The recent availability of large amounts of training data and computing resources on the cloud has made this possible; the algorithms being used have existed for some time and the learning process has actually become simpler as performance has improved.•