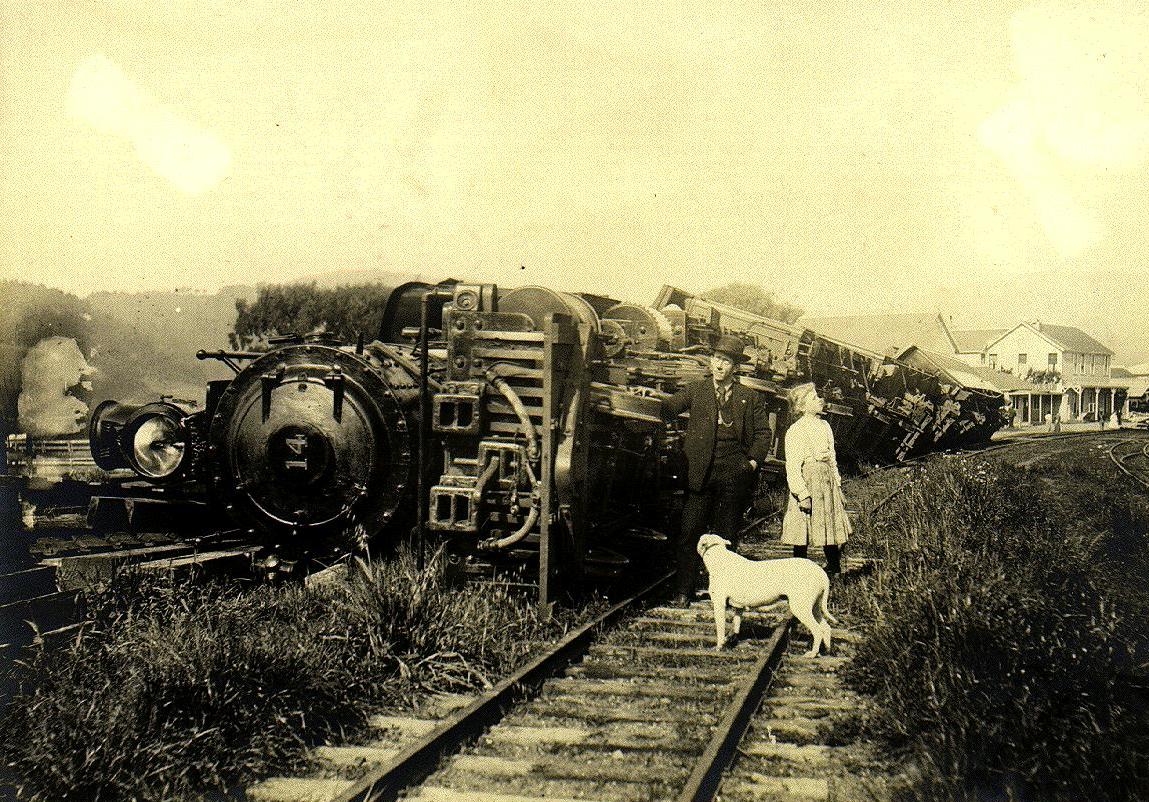

In 2016, GM promises to begin selling the first affordable EV possessing a 200-mile range. That might merely be a short-term victory for Big Auto, though history seems to suggest otherwise. 3D Printers may ultimately disrupt the business of car manufacture, seriously lower the barrier to entry and allow for the extreme customization of cars, but that may very likely not be the case. If I had been around in the early days of Homebrew, I probably would have felt the same of personal computers or at the very least, software, but I would have been wrong. The same may hold true for the auto sector.

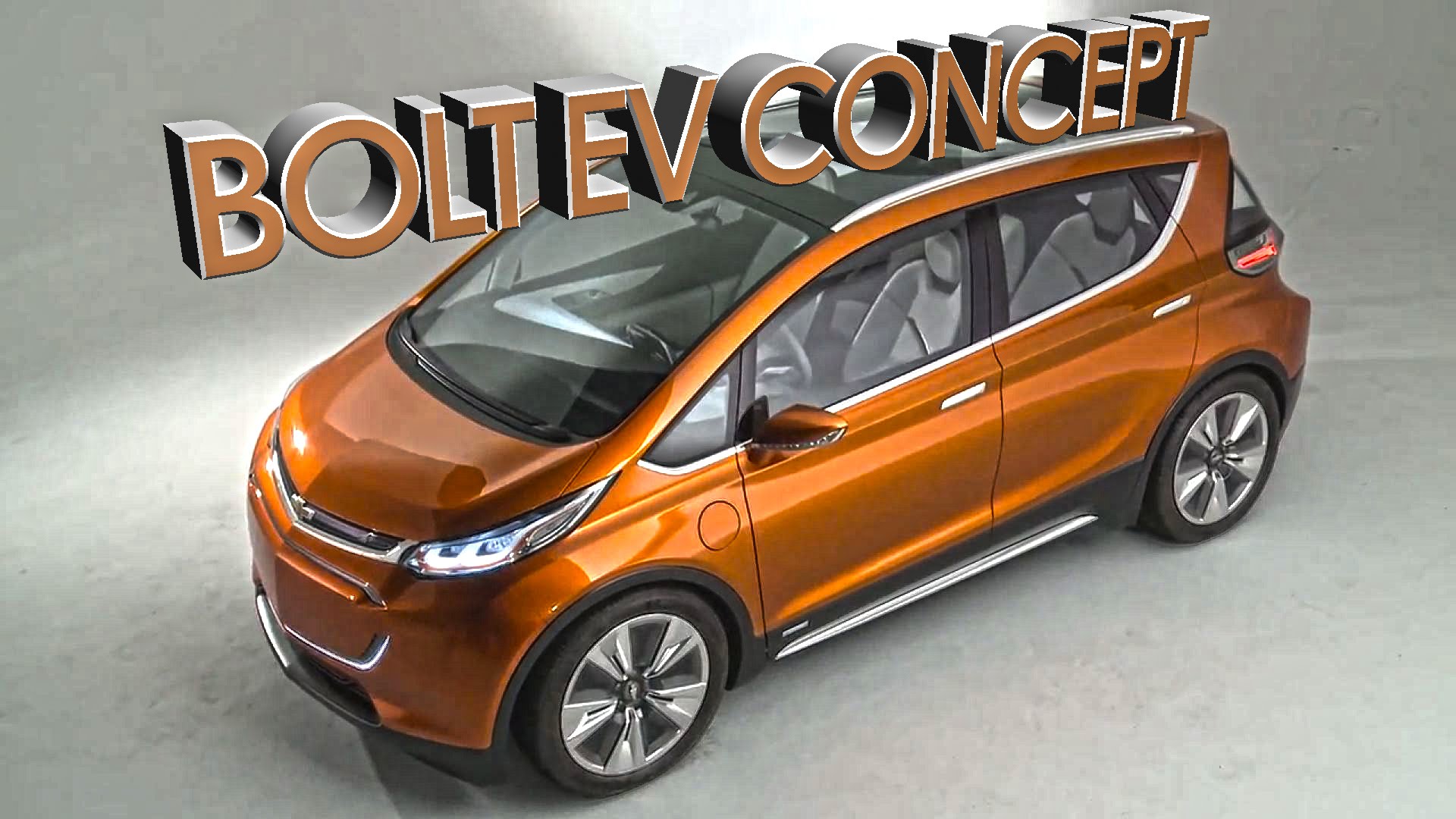

In a Wired cover story about GM seemingly outfoxing Tesla thus far in the EV market, an unlikely twist to be sure, Alex Davies writes about the urgency of the Chevy Bolt’s creation. An excerpt:

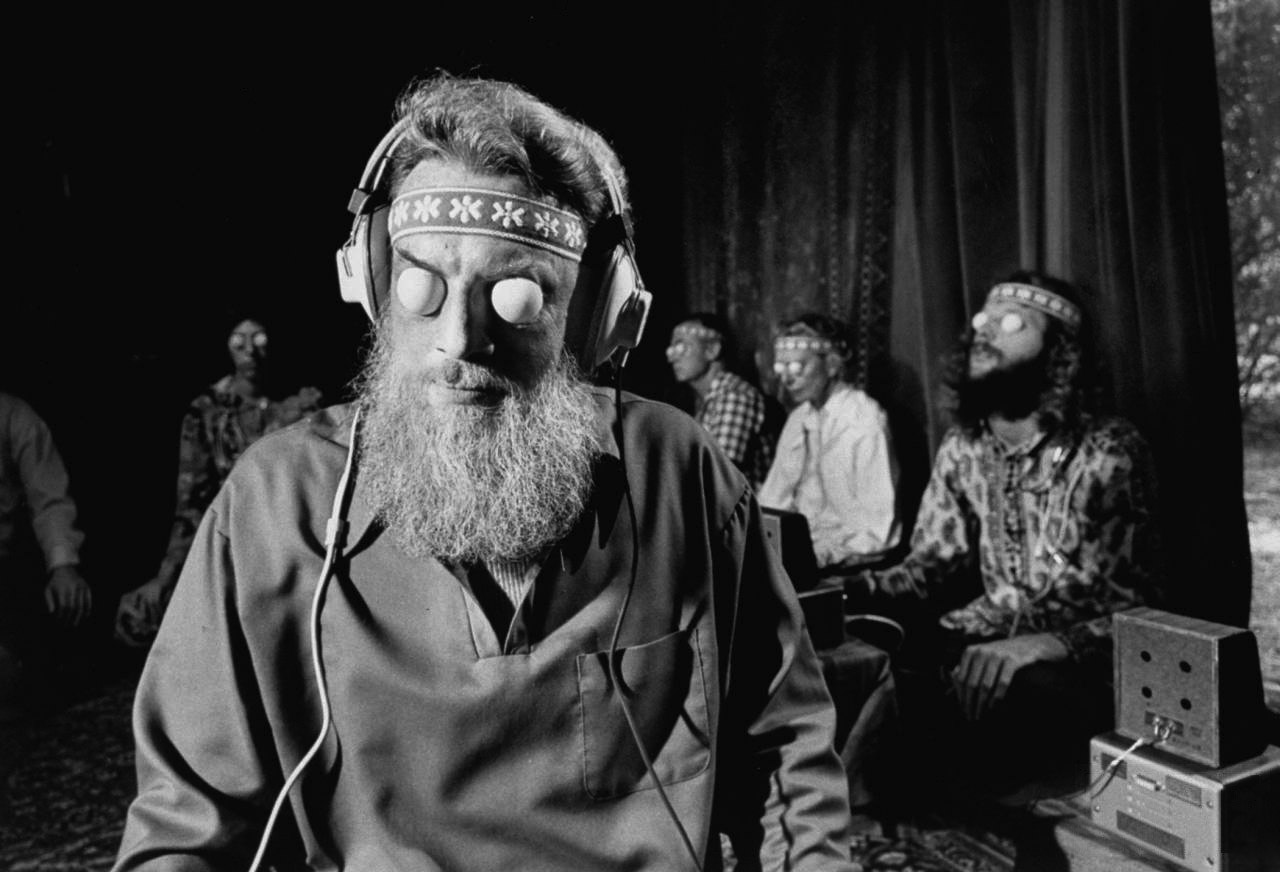

These days it’s a refrain among GM executives that in the next five to 10 years, the auto industry will change as much as it has in the past 50. As batteries get better and cheaper, the propagation of electric cars will reinforce the need to build out charging infrastructure and develop clean ways to generate electricity. Cars will start speaking to each other and to our infrastructure. They will drive themselves, smudging the line between driver and passenger. Google, Apple, Uber, and other tech companies are invading the transportation marketplace with fresh technology and no ingrained attitudes about how things are done.

The Bolt is the most concrete evidence yet that the largest car companies in the world are contemplating a very different kind of future too. GM knows the move from gasoline to electricity will be a minor one compared to where customers are headed next: away from driving and away from owning cars. In 2017, GM will give Cadillac sedans the ability to control themselves on the highway. Instead of dismissing Google as a smart-aleck kid grabbing a seat at the adults’ table, GM is talking about partnering with the tech firm on a variety of efforts. Last year GM launched car-sharing programs in Manhattan and Germany and has promised more to come. In January the company announced that it’s investing $500 million in Lyft, and that it plans to work with the ride-sharing company to develop a national network of self-driving cars. GM is thinking about how to use those new business models as it enters emerging markets like India, where lower incomes and already packed metro areas make its standard move—put two cars in every garage—unworkable.

This all feels strange coming from GM because, for all the changes of the past decade and despite the use of words like disruption and mobility, it’s no Silicon Valley outfit.•