On the 20th anniversary of John Perry Barlow’s idealistic Davos edict, “A Declaration of the Independence of Cyberspace.” the Economist asked him to revisit those words and consider what he hit and missed. The Internet is no longer an utter Wild West but now an odd mix of anarchy and surveillance, a conflict unlikely to ever be fully settled, and one that will continue to produce positive and negative developments. Barlow’s idea that state governments were remnants of the Industrial Age now seems naive, though his argument that a global world demands a digital openness is a good one.

An excerpt:

The Economist:

What do you think you got especially right—or wrong?

John Perry Barlow:

I will stand by much of the document as written. I believe that it is still true that the governments of the physical world have found it very difficult to impose their will on cyberspace. Of course, they are as good as they ever were at imposing their will on people whose bodies they can lay a hand on, though it is increasingly easy, as it was then, to use technical means to make the physical location of those bodies difficult to determine.

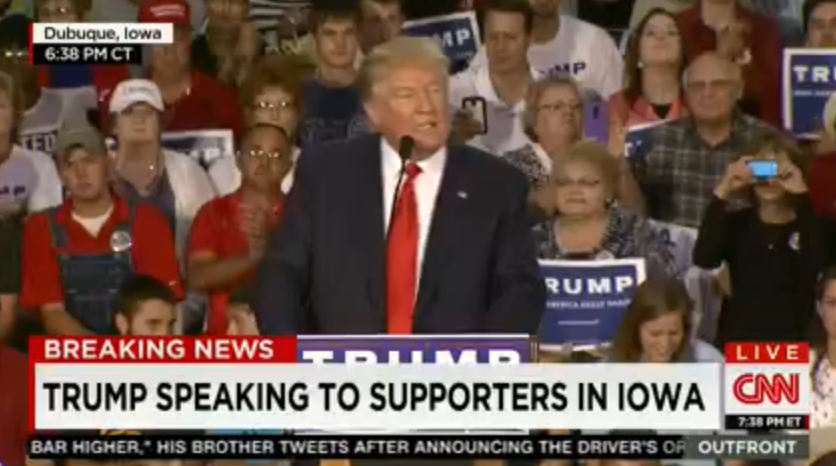

Even when they do get someone cornered, like Chelsea Manning, or Julian Assange, or [Edward] Snowden, they’re not much good at shutting them up. Ed regularly does $50,000 speeches to big corporate audiences and is obviously able to speak very freely. Ditto Julian. And even ditto Chelsea Manning, who despite the fact that she’s serving a 35-year sentence, is still able to speak her mind to all who will listen.

People will sputter, but what about China, what about Silk Road [an online marketplace that sold drugs before being shut down in 2013], what about Kim Dotcom? Well, I believe that a close examination of Chinese censorship is a little more nuanced than the media here would have us believe and is mostly focused on preventing something like the Cultural Revolution. But the Chinese know that they can’t compete in a global marketplace if they don’t allow their best minds full contact with our best minds. Silk Road is already reassembling itself in the gloomier recesses of the dark web, and the arrest and persecution of Kim Dotcom was simply an illegal over-reach by the U.S. government. Yeah, they can enforce the rule of law online provided their ability to break it.•