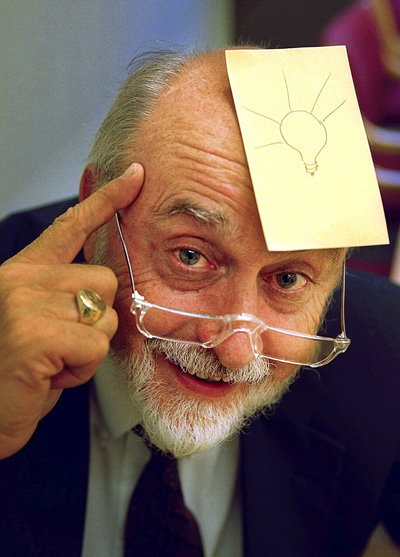

Marshall McLuhan deconstructs a go-go bar, fucking ruining it for everyone.

You are currently browsing the archive for the Science/Tech category.

Tags: Marshall McLuhan

B.F. Skinner, the famed Behaviorist who plays a central role in one of my favorite New Yorker articles, Calvin Trillin’s “The Chicken Vanishes” (subscription required), is responsible for this 1954 video about his pre-personal computer Teaching Machine, which provided automated instruction.

Tags: B.F. Skinner, Calvin Trillin

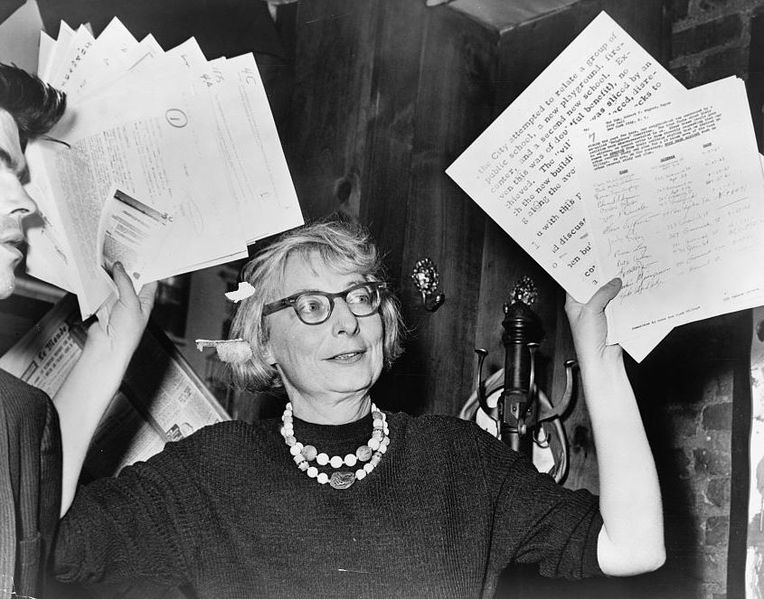

Jonah Lehrer has an intriguing, counterintuitive argument in the Wall Street Journal, which posits that creativity isn’t just something for the chosen few, but a quality everyone possesses. Embedded in the article is the origin story of the Post-It Note. An excerpt:

“Consider the case of Arthur Fry, an engineer at 3M in the paper products division. In the winter of 1974, Mr. Fry attended a presentation by Sheldon Silver, an engineer working on adhesives. Mr. Silver had developed an extremely weak glue, a paste so feeble it could barely hold two pieces of paper together. Like everyone else in the room, Mr. Fry patiently listened to the presentation and then failed to come up with any practical applications for the compound. What good, after all, is a glue that doesn’t stick?

On a frigid Sunday morning, however, the paste would re-enter Mr. Fry’s thoughts, albeit in a rather unlikely context. He sang in the church choir and liked to put little pieces of paper in the hymnal to mark the songs he was supposed to sing. Unfortunately, the little pieces of paper often fell out, forcing Mr. Fry to spend the service frantically thumbing through the book, looking for the right page. It seemed like an unfixable problem, one of those ordinary hassles that we’re forced to live with.

But then, during a particularly tedious sermon, Mr. Fry had an epiphany. He suddenly realized how he might make use of that weak glue: It could be applied to paper to create a reusable bookmark! Because the adhesive was barely sticky, it would adhere to the page but wouldn’t tear it when removed. That revelation in the church would eventually result in one of the most widely used office products in the world: the Post-it Note.”

Tags: Arthur Fry, Jonah Lehrer, Sheldon Silver

So, texting while driving is pretty much fine now. I kid.

Printed knowledge made it possible for us to be intellectuals, to shift attention from land to library, even encouraged us to do so. But what does having a smartphone, with its icons and abbreviations, as our chief means of information gathering portend for us? And what does it mean for the next few generations born to its efficacy?

If we’re mainly typing with our thumbs, mostly communicating in short bursts of letters and symbols, relying largely on algorithms, who are we then? Will an ever-increasing reliance on technology dumb us down? Or will it ultimately free us of ideologies, those abstractions that often conflict violently with reality? And if so, is that a good thing?

In the excellent 2011 Wired article, “The World of the Intellectual vs. the World of the Engineer,” Timothy Ferris makes an argument for rigorous science over intellectual theory. I don’t completely agree with him. Democracy was a pretty potent and wonderful abstraction before it became a reality. And saying that Freud “discovered nothing and cured nobody” isn’t exactly fair since realizing that there are unconscious forces helping to drive our behavior has been an invaluable tool. But Ferris still makes a fascinating argument. An excerpt:

“Being an intellectual had more to do with fashioning fresh ideas than with finding fresh facts. Facts used to be scarce on the ground anyway, so it was easy to skirt or ignore them while constructing an argument. The wildly popular 18th-century thinker Jean-Jacques Rousseau, whose disciples range from Robespierre and Hitler to the anti-vaccination crusaders currently bringing San Francisco to the brink of a public health crisis, built an entire philosophy (nature good, civilization bad) on almost no facts at all. Karl Marx studiously ignored the improving living standards of working-class Londoners — he visited no factories and interviewed not a single worker — while writing Das Kapital, which declared it an ‘iron law’ that the lot of the proletariat must be getting worse. The 20th-century philosopher of science Paul Feyerabend boasted of having lectured on cosmology ‘without mentioning a single fact.’

Eventually it became fashionable in intellectual circles to assert that there was no such thing as a fact, or at least not an objective fact. Instead, many intellectuals maintained, facts depend on the perspective from which are adduced. Millions were taught as much in schools; many still believe it today.

Reform-minded intellectuals found the low-on-facts, high-on-ideas diet well suited to formulating the socially prescriptive systems that came to be called ideologies. The beauty of being an ideologue was (and is) that the real world with all its imperfections could be criticized by comparing it, not to what had actually happened or is happening, but to one’s utopian visions of future perfection. As perfection exists neither in human society nor anywhere else in the material universe, the ideologues were obliged to settle into postures of sustained indignation. ‘Blind resentment of things as they were was thereby given principle, reason, and eschatological force, and directed to definite political goals,’ as the sociologist Daniel Bell observed.

While the intellectuals were busy with all that, the world’s scientists and engineers took a very different path. They judged ideas (‘hypotheses’) not by their brilliance but by whether they survived experimental tests. Hypotheses that failed such tests were eventually discarded, no matter how wonderful they might have seemed to be. In this, the careers of scientists and engineers resemble those of batters in major-league baseball: Everybody fails most of the time; the great ones fail a little less often.”

••••••••••

Intellectualism allows for uncertainty:

Tags: Timothy Ferris

As their boy was newly named an astronaut in 1962, Neil Armstong’s parents appeared on I’ve Got a Secret.

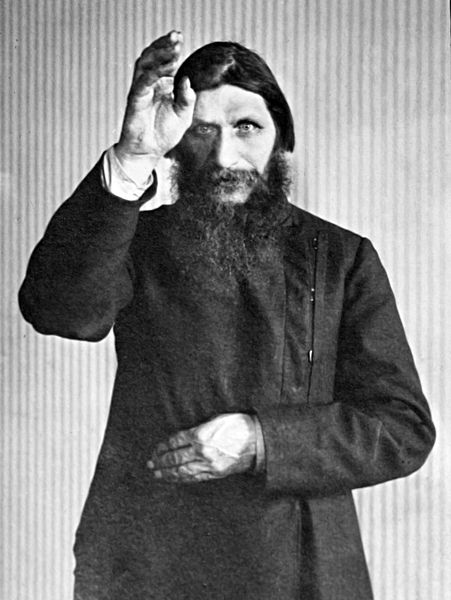

Jane Jacobs saw patterns in the disorder, benevolence in so-called blight, sublimity in street life, angels in the anarchic, and she was (thankfully) a huge pain in the ass until others could see the same. The opening of her landmark 1958 Fortune story, “Downtown Is for People“:

“This year is going to be a critical one for the future of the city. All over the country civic leaders and planners are preparing a series of redevelopment projects that will set the character of the center of our cities for generations to come. Great tracts, many blocks wide, are being razed; only a few cities have their new downtown projects already under construction; but almost every big city is getting ready to build, and the plans will soon be set.

What will the projects look like? They will be spacious, parklike, and uncrowded. They will feature long green vistas. They will be stable and symmetrical and orderly. They will be clean, impressive, and monumental. They will have all the attributes of a well-kept, dignified cemetery. And each project will look very much like the next one: the Golden Gateway office and apartment center planned for San Francisco; the Civic Center for New Orleans; the Lower Hill auditorium and apartment project for Pittsburgh; the Convention Center for Cleveland; the Quality Hill offices and apartments for Kansas City; the downtown scheme for Little Rock; the Capitol Hill project for Nashville. From city to city the architects’ sketches conjure up the same dreary scene; here is no hint of individuality or whim or surprise, no hint that here is a city with a tradition and flavor all its own.

These projects will not revitalize downtown; they will deaden it.”

•••••••••••

“For the answer, we went to the lady over there,” 1969:

Tags: Jane Jacobs

In 1967, William F. Buckley welcomed beaded LSD guru Timothy Leary, who later became mortal enemies with Art Linkletter.

Tags: Timothy Leary, William F. Buckley

There was a theory during Web 1.0, when possibilities seemed infinite, that in the near term the majority of workers would either telecommute or plop down in offices that had communal desks. Why would anyone need to be anchored when the Internet had connected us virtually and set us free? More people certainly telecommute today and many offices have far more democratic architecture, though anonymous desks still aren’t the norm.

But what if the thing that liberated us made us more anchored in other ways? What if being so connected means that our impetus to shift our material lives for new stimuli and opportunities has diminished? In an otherwise interesting Opinion article by Victoria and Todd G. Bucholz in the New York Times about the rising number of young Americans remaining moored in their home states–a trend that’s been increasing since before the recessesion–too little attention is paid to the role new media has played in reducing relocation in the U.S. I would assume a significant percent of that demographic change has to be assigned to technology: More money is spent on tablets, less on motorcycyles. At any rate, here’s an excerpt from the essay:

“The likelihood of 20-somethings moving to another state has dropped well over 40 percent since the 1980s, according to calculations based on Census Bureau data. The stuck-at-home mentality hits college-educated Americans as well as those without high school degrees. According to the Pew Research Center, the proportion of young adults living at home nearly doubled between 1980 and 2008, before the Great Recession hit. Even bicycle sales are lower now than they were in 2000. Today’s generation is literally going nowhere. This is the Occupy movement we should really be worried about.”

Sleazy, yes, but I need the traffic.

Neil deGrasse Tyson testifying in D.C. about the foolishness of an absence of a comprehensive U.S. space program.

Tags: Neil deGrasse Tyson

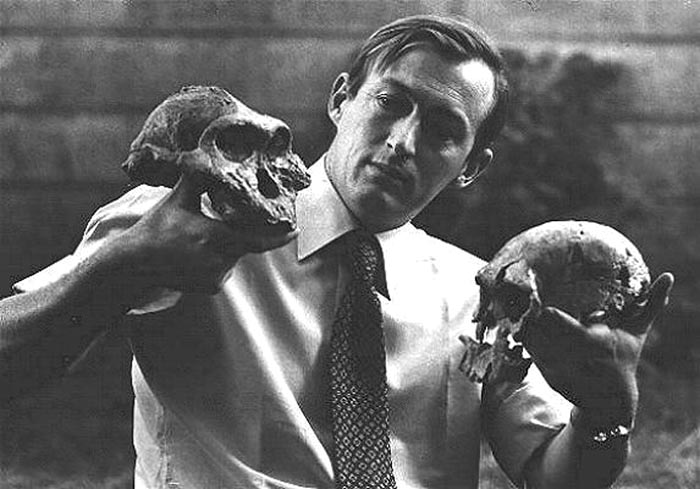

From “The Last Famine,” Paul Salopek’s sweeping Foreign Policy piece on hunger, a contemporary portrait of legendary paleoanthropologist Richard Leakey:

“The Turkana Basin is a freakishly beautiful place. A gargantuan wilderness of hot wind and thorn stubble, it covers all of northwestern Kenya and spills into neighboring Uganda, Ethiopia, and South Sudan. Black volcanoes knuckle up from its pale-ocher horizons. Lake Turkana — the largest alkaline lake in the world, 150 miles long — pools improbably in its arid heart. The lake is sometimes referred to, romantically, as the Jade Sea; from the air, its brackish waters appear a bad shade of green, like tarnished brass. Turkana, Pokot, Gabra, Daasanach, and other cattle nomads eke out a marginal existence around its shores. The basin’s dry sediments, which form part of the Great Rift Valley, hold a dazzling array of hominid remains. Because of this, the Turkana badlands are considered one of the cradles of our species.

Richard and Meave Leakey, the scions of the eminent Kenyan fossil-hunting family, have been probing deep history here for 45 years. Their oldest discovery, a pre-human skull, about 4 million years old, was found on a 1994 expedition led by Meave. An earlier dig headed by Richard uncovered a fabulous, nearly intact skeleton of Homo ergaster, dating back 1.6 million years, dubbed the Turkana Boy. Both Leakeys told me the modern landscape had changed nearly beyond recognition since their excavations began in the 1960s. The influx of food aid and better medical services had more than tripled the human population and stripped the region of most of its wild meat, wiping out the local buffalo, giraffe, and zebra. Domestic livestock — exploding and then crashing with successive droughts — had scalped the savannas’ fragile grasses. While driving one day near his headquarters, the Turkana Basin Institute, Richard pointed at a dusty cargo truck, its bed piled high with illegally cut wood. ‘Charcoal for the Somali refugee camps,” he said with a puckish smile. ‘The U.N. pays for it.’

Leakey is not only a celebrity thinker. He is also an incorrigible provocateur and a man of big and restless ambitions. Bored with the squabbles of academic research (‘I could never go back to measuring one tooth against another’), he abandoned the summit of paleoanthropology in the late 1980s to assume the directorship of Kenya’s enfeebled wildlife service, where he became a hero to conservationists by ordering elephant poachers shot on sight. A few years later, he helped organize Kenya’s first serious opposition party, and those activities invited years of police harassment. (A 1993 plane crash, which Leakey blames on sabotage, resulted in the loss of both his legs below the knee; he now gets around — driving Land Rovers, piloting planes — on artificial feet.) At one point, I asked him about heavy bandages on his head and hands at a recent lecture at New York’s Museum of Natural History. He had been suffering from skin cancers, he explained, that metastasized from old police-baton injuries. Leakey tends to view humankind through a very long lens, and pessimistically.”

••••••••••

Two Richards, Leakey and Dawkins:

Tags: Meave Leakey, Paul Salopek', Richard Leakey

From a list of urban innovations at the Next Web, a section about using pedestrian energy to illuminate streetlights:

“Think about all the energy expended by pedestrians walking down the street. What if it could be harnessed to power street lighting? The Viha concept involves an electro-active slab with a lower portion that is embedded in the floor, and a mobile upper part that can produce energy.

While it won’t be able to replace power stations, this solution is designed to produce enough energy for use in the immediate vicinity, thus taking strain off the power grid and reducing the power bill of the local authority. In particularly crowded areas, this would work well.”

••••••••••

The Viha concept in practice:

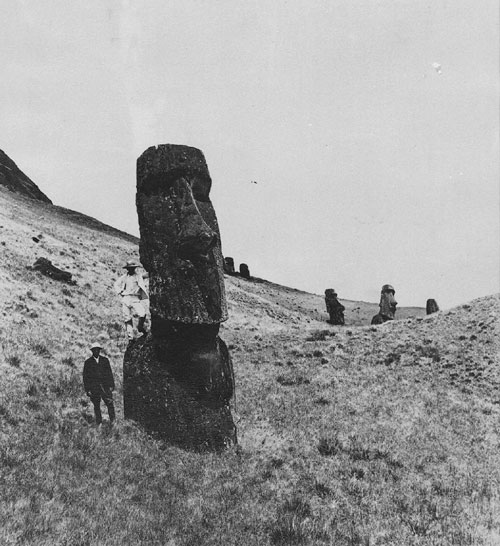

In his new Atlantic piece, “We’re Underestimating the Risk of Human Extinction,” Ross Andersen conducts a smart interview with Oxford’s Professor Nick Bostrom about the possibility of a global Easter Island, in which all of humanity vanishes from Earth. The conversation focuses on the threats we face not from our stars but from ourselves. I think Bostrom’s attitude is too dire, but he only has to be right once, of course. An excerpt:

“What technology, or potential technology, worries you the most?

Bostrom: Well, I can mention a few. In the nearer term I think various developments in biotechnology and synthetic biology are quite disconcerting. We are gaining the ability to create designer pathogens and there are these blueprints of various disease organisms that are in the public domain—you can download the gene sequence for smallpox or the 1918 flu virus from the Internet. So far the ordinary person will only have a digital representation of it on their computer screen, but we’re also developing better and better DNA synthesis machines, which are machines that can take one of these digital blueprints as an input, and then print out the actual RNA string or DNA string. Soon they will become powerful enough that they can actually print out these kinds of viruses. So already there you have a kind of predictable risk, and then once you can start modifying these organisms in certain kinds of ways, there is a whole additional frontier of danger that you can foresee.

In the longer run, I think artificial intelligence—once it gains human and then superhuman capabilities—will present us with a major risk area. There are also different kinds of population control that worry me, things like surveillance and psychological manipulation pharmaceuticals.”

Read also:

Tags: Nick Bostrom, Ross Andersen

The Handroid has potential for creating better prosthetics and for the remote handling of dangerous materials. By the good people at ITK. (Thanks Singularity Hub.)

In the Atlantic, celebrity scientist Neil deGrasse Tyson discusses his new book about America’s foundering space program. An excerpt:

“You write that space exploration is a ‘necessity.’ Why do you think others don’t agree?

I don’t think they’ve thought it through. Most people who don’t agree say, ‘We have problems here on Earth. Let’s focus on them.’ Well, we are focusing on them. The budget of social programs in the federal tax base is 50 times greater for social programs than it is for NASA. We’re already focused in ways that many people who are NASA naysayers would rather it become. NASA is getting half a penny on a dollar — I’m saying let’s double it. A penny on a dollar would be enough to have a real Mars mission in the near future.

Can the United States catch up in the 21st-century space race?

When everyone agrees to a single solution and a single plan, there’s nothing more efficient in the world than an efficient democracy. But unfortunately the opposite is also true, there’s nothing less efficient in the world than an inefficient democracy. That’s when dictatorships and other sort of autocratic societies can pass you by while you’re bickering over one thing or another.

But, I can tell you that when everything aligns, this is a nation where people are inventing the future every day. And that future is brought to you by scientists, engineers, and technologists. That’s how I’ve always viewed it. Once people understand that, I don’t see why they wouldn’t say, ‘Sure, let’s double NASA’s budget to an entire penny on a dollar! And by the way, here’s my other 25 pennies for social programs.’ I think it’s possible and I think it can happen, but people need to stop thinking that NASA is some kind of luxury project that can be done on disposable income that we happen to have left over. That’s like letting your seed corn rot in the storage basin.”

Tags: Neil deGrasse Tyson

The opening of the new Economist article, “Computing with Soup,” which examines the medical benefits of using DNA-laced liquid instead of silicon chips:

“EVER since the advent of the integrated circuit in the 1960s, computing has been synonymous with chips of solid silicon. But some researchers have been taking an alternative approach: building liquid computers using DNA and its cousin RNA, the naturally occurring nucleic-acid molecules that encode genetic information inside cells. Rather than encoding ones and zeroes into high and low voltages that switch transistors on and off, the idea is to use high and low concentrations of these molecules to propagate signals through a kind of computational soup.

Computing with nucleic acids is much slower than using transistors. Unlike silicon chips, however, DNA-based computers could be made small enough to operate inside cells and control their activity. ‘If you can programme events at a molecular level in cells, you can cure or kill cells which are sick or in trouble and leave the other ones intact. You cannot do this with electronics,’ says Luca Cardelli of Microsoft’s research centre in Cambridge, England, where the software giant is developing tools for designing molecular circuits.” (Thanks Browser.)

Look up in the sky and you will see neither bird nor plane but the future–it is here now. From Farhood Manjoo’s new Slate piece, “I Love You, Killer Robots“:

“It was way back in May 2010 that I first spotted the flying drones that will take over the world. They were in a video that Daniel Mellinger, one of the robots’ apparently too-trusting creators, proudly posted on YouTube. The clip, titled ‘Aggressive Maneuvers for Autonomous Quadrotor Flight,’ depicts a scene at a robotics lab at the University of Pennsylvania, though a better term for this den might be “drone training camp.”

In the video, an insectlike, laptop-sized ‘quadrotor’ performs a series of increasingly difficult tricks. First, it flies up and does a single flip in the air. Then a double flip. Then a triple flip. In a voice-over so dry it suggests he has no idea the power he’s dealing with, Mellinger says, ‘We developed a method for flying to any position in space with any reasonable velocity or pitch angle.’ What does this mean? It means the drone can fly through or around pretty much any obstacle. We see it dance through an open window with fewer than 3 inches of clearance on either side. Next, it flies and perches on an inverted surface—lying in wait.”

Tags: Farhood Manjoo

Up to 18 mph. From the good folks at Boston Dynamics.

From “Can You Build a Human Body?” an interactive BBC feature that explains how close we are to artificially creating a complete array of functioning organs. From the section on skin:

“One of the greatest challenges in bionics is to replicate skin – its ability to feel pressure, temperature and pain is incredibly difficult to reproduce.

Prof Ali Javey, from the University of California, Berkeley, is trying to develop an ‘e-skin’ a material that ‘mechanically has the same properties as skin.’ He has already woven a web of complex electronics and pressure sensors into a plastic which can bend and stretch.

Getting those sensors to send data to a computer could give a sense of touch to robots.”

••••••••••

Printing electronic skin:

Tags: Ali Javey

"These realms of knowledge contain concepts such as data mining, non-linear dynamics and chaos theory." (Image by Steve Jurvetson.)

From “School for Quants,” Sam Knight’s interesting Financial Times look at the training of the next generation of financial-sector math geniuses who will likely be building and destroying and building and destroying the world’s economy in the near future:

“As of this winter, the centre had about 60 PhD students, of whom 80 per cent were men. Virtually all hailed from such forbiddingly numerate subjects as electrical engineering, computational statistics, pure mathematics and artificial intelligence. These realms of knowledge contain concepts such as data mining, non-linear dynamics and chaos theory that make many of us nervous just to see written down. Philip Treleaven, the centre’s director, is delighted by this. ‘Bright buggers,’ he calls his students. ‘They want to do great things.’

In one sense, the centre is the logical culmination of a relationship between the financial industry and the natural sciences that has been deepening for the past 40 years. The first postgraduate scientists began to crop up on trading floors in the early 1970s, when rising interest rates transformed the previously staid calculations of bond trading into a field of complex mathematics. The most successful financial equation of all time – the Black-Scholes model of options pricing – was published in 1973 (the authors were awarded a Nobel prize in 1997).

By the mid-1980s, the figure of the ‘quantitative analyst’ or ‘quant’ or ‘rocket scientist’ (most contemporary quants disdain this nickname, pointing out that rocket science is not all that complicated any more) was a rare but not unheard-of species in most investment houses. Twenty years later, the twin explosions of cheap credit and cheap computing power made quants into the banking equivalent of super-charged particles. Given freedom to roam, the best were able – it seemed – to summon ever more refined, risk-free and sophisticated financial products from the edges of the known universe.

Of course it all looks rather different now. Derivatives so fancy you need a degree in calculus to understand them are hardly flavour of the month these days. Proprietary trading desks in banks, the traditional home of quants, have been decimated by losses and attempts at regulation since the start of the financial crisis. There is nothing like the number of jobs there used to be.” (Thanks Browser.)

Tags: Sam Knight

Two Texas doctors, Billy Cohn and Bud Frazier, create life without a pulse.

Tags: Billy Cohn, Bud Frazier

We can’t unlearn what is learned, although we do have the capacity to change how we use knowledge, to improve or deteriorate ethically. From a story about civilian drones in the Economist:

“Safety is not the only concern associated with the greater use of civilian drones, however. There is also the question of privacy. In America, at least, neither the constitution nor common law prohibits the police, the media or anyone else from operating surveillance drones. As the law stands, citizens do not have a reasonable expectation of privacy in a public place. That includes parts of their own backyards that are visible from a public vantage point, including the sky. The Supreme Court has been very clear on the matter. The American Civil Liberties Union, a campaign group, says drones raise ‘very serious privacy issues’ and are pushing America ‘willy-nilly toward an era of aerial surveillance without any steps to protect the traditional privacy that Americans have always enjoyed and expected.'”

"The oceans will boil away and the atmosphere will dry out as water vapor leaks into space." (Image by Pierre Cardin.)

As the sun ages, its light becomes stronger, not weaker. What to do when the future grows too bright, if we even make it that far? From Andrew Grant’s excellent new Discover article, “How to Survive the End of the Universe“:

“For [theoretical physicist Glenn] Starkman and other futurists, the fun begins a billion years from now, a span 5,000 times as long as the era in which Homo sapiens has roamed Earth. Making the generous assumption that humans can survive multiple ice ages and deflect an inevitable asteroid or comet strike (NASA predicts that between now and then, no fewer than 10 the size of the rock that wiped out the dinosaurs will hit), the researchers forecast we will then encounter a much bigger problem: an aging sun.

Stable stars like the sun shine by fusing hydrogen atoms together to produce helium and energy. But as a star grows older, the accumulating helium at the core pushes those energetic hydrogen reactions outward. As a result, the star expands and throws more and more heat into the universe. Today’s sun is already 40 percent brighter than it was when it was born 4.6 billion years ago. According to a 2008 model by astronomers K.P. Schröder and Robert Connon Smith of the University of Sussex, England, in a billion years the sun will unleash 10 percent more energy than it does now, inducing an irrefutable case of global warming here on Earth. The oceans will boil away and the atmosphere will dry out as water vapor leaks into space, and temperatures will soar past 700 degrees Fahrenheit, all of which will transform our planet into a Venusian hell-scape choked with thick clouds of sulfur and carbon dioxide. Bacteria might temporarily persist in tiny pockets of liquid water deep beneath the surface, but humanity’s run in these parts would be over.

[Astronomer Greg] Laughlin was intrigued by the idea of using simulations to traverse enormous gulfs of time: ‘It opened my eyes to the fact that things will still be there in timescales that dwarf the current age of the universe.’

Such a cataclysmic outcome might not matter, though, if proactive Earthlings figure out a way to colonize Mars first. The Red Planet offers a lot of advantages as a safety spot: It is relatively close and appears to contain many of life’s required ingredients. A series of robotic missions, from Viking in the 1970s to the Spirit rover still roaming Mars today, have observed ancient riverbeds and polar ice caps storing enough water to submerge the entire planet in an ocean 40 feet deep. This past August the Mars Reconnaissance Orbiter beamed back time-lapse photos suggesting that salty liquid water still flows on the surface.

The main deterrent to human habitation on Mars is that it is too cold. A brightening sun could solve that—or humans could get the job started without having to wait a billion years. ‘From what we know, Mars did have life and oceans and a thick atmosphere,’ says NASA planetary scientist Christopher McKay. ‘And we could bring that back.'”

Tags: Andrew Grant, Christopher McKay, Glenn Starkman, Greg Laughlin