In his Aeon think-piece about environmentalism, Liam Heneghan suggests that in order to save nature we need to free ourselves of some accepted notions of preservation in favor of a more integrative approach:

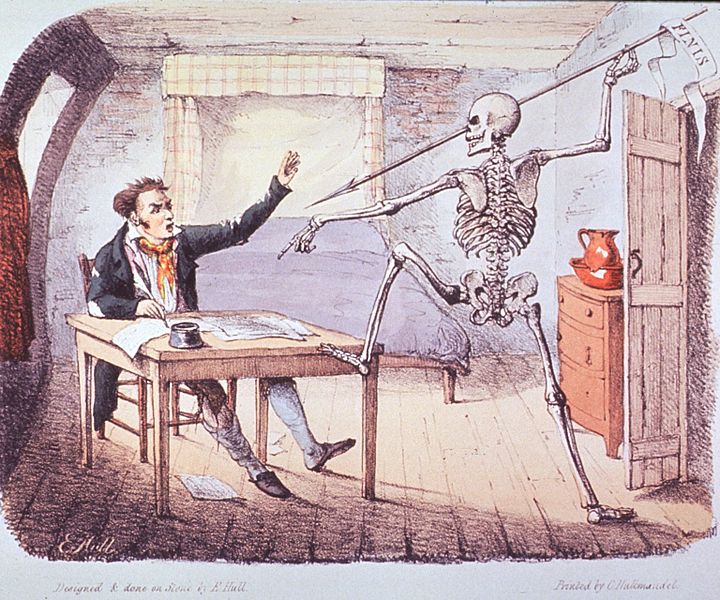

“The environmental historian Donald Worster writes about the fall of the ‘balance of nature’ as an idea, and points out that this disruptive world-view makes nature seem awfully like the human sphere. ‘All history,’ he notes, ‘has become a record of disturbance, and that disturbance comes from both cultural and natural agents.’ Thus he places droughts and pests alongside corporate takeovers and the invasion of the academy by French literary theory. If the idea of a balance resurrects Plato and Aristotle, the non-equilibrium, disturbance-inclined view may have its own Greek hero: Heraclitus, pagan saint of flux. ‘Thunderbolt,’ Heraclitus wrote in Fragment 64, ‘steers all things.’

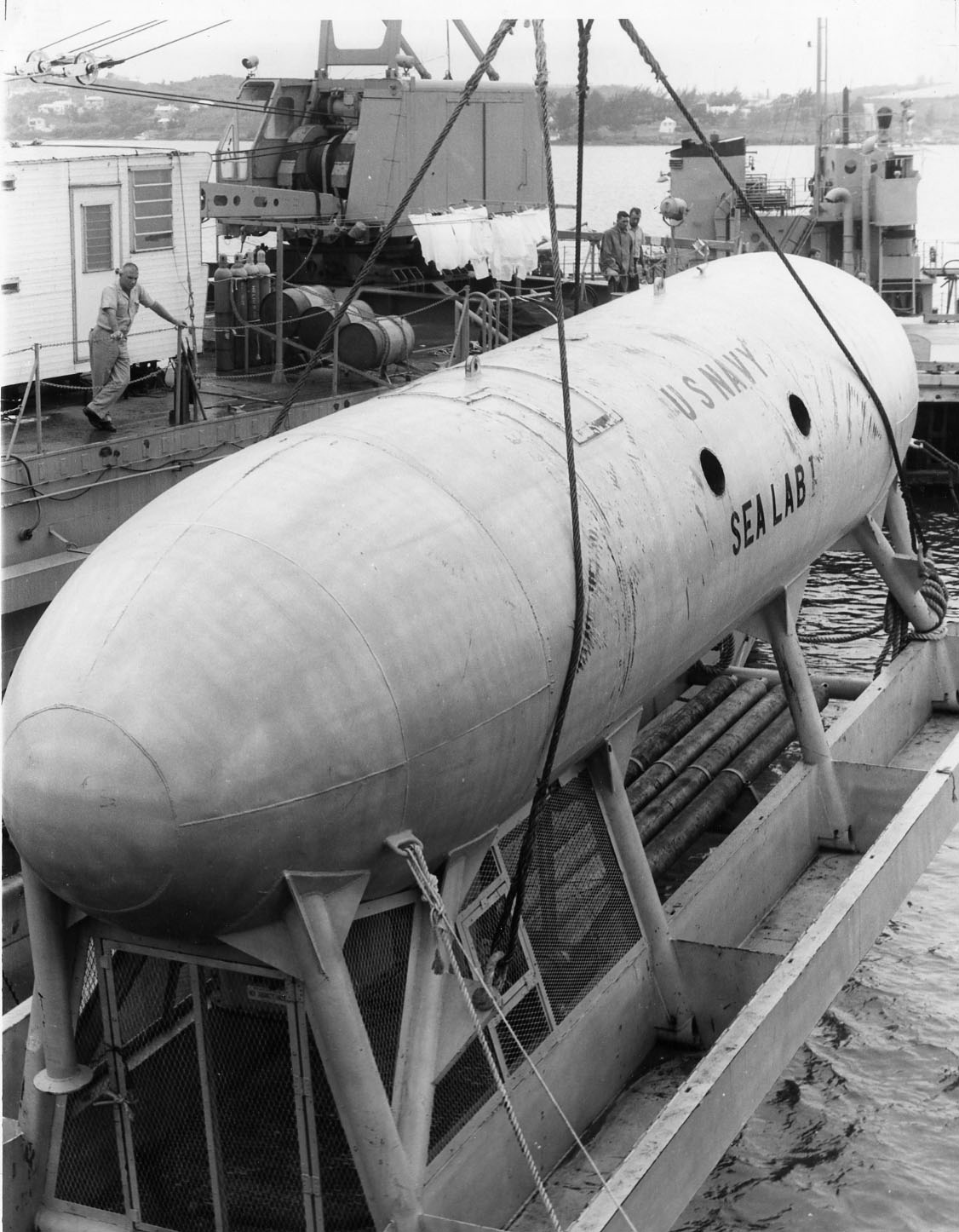

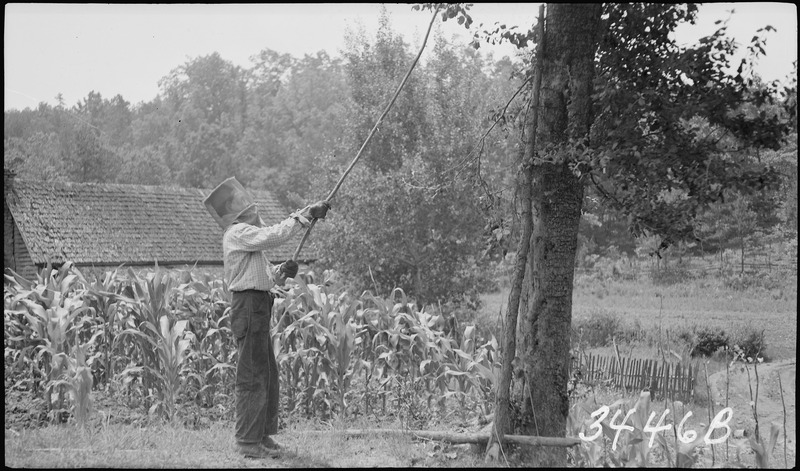

In its brief history, the science of ecology appears to have smuggled in enough ancient metaphysics to make any Greek philosopher nod with approval. However, the question remains. If the handsaw and hurricane are equivalents in their ability to lay a forest low, it is hard to see how we can scientifically criticise the human destruction of ecosystems. Why should we, for instance, concern ourselves with the fate of the Western Ghats if alien introductions are just another disturbance, no different from the more natural-seeming migration of species? The point of conservation in the popular imagination and in many policy directives is that it resists human depredations to preserve important species in ancient, intact, fully functional natural ecosystems. If we have no ‘balance of nature’, this is much harder to defend.

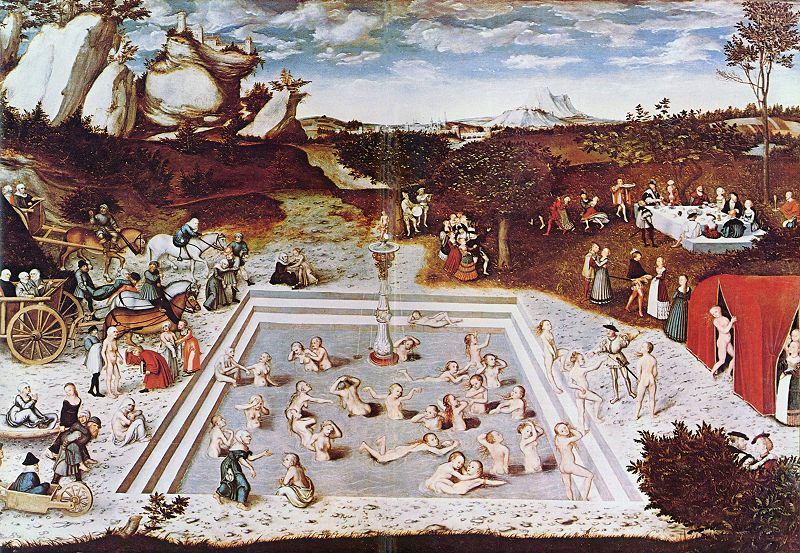

If we lose the ideal of balance, then, we lose a powerful motive for environmental conservation. However, there might be some unintended benefits. A dynamic, ‘disturbance’ approach has fostered some of the most promising new approaches to environmental problems such as urban ecology and restoration ecology. That’s because it is much less concerned with keeping humans and nature separate from one another.“