There’s no better source for thought-provoking essays on the web than the remarkable Aeon site. There are several pieces each week that make me glad the Internet exists. The latest pair of examples are Leo Hollis’ exploration of future-proofing cities in an age of extreme weather and Jesse Gamble’s study of technological “remedies” for sleep.

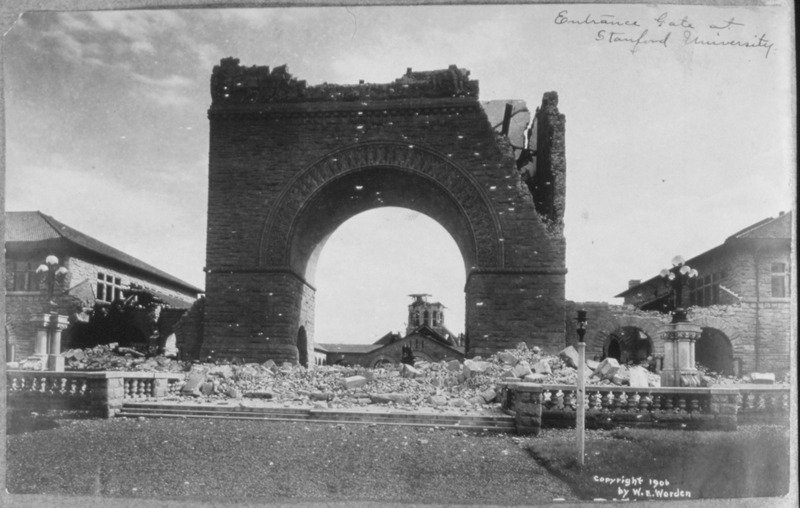

“We do not, however, need to rely on speculation to imagine the impact of extreme weather events on the city. We have seen this scenario unfold before.

On Thursday, 13 July, 1995, the temperature in downtown Chicago rose to a record 104ºF (40ºC), the high point in an unrelenting week of heat. Combined with high humidity, it was so hot that it was almost impossible to move around without discomfort. At the beginning of the week, people made jokes, broke open beers and celebrated the arrival of the good weather. But after seven days and nights of ceaseless heat, according to the Chicago Tribune:

Overheated Chicagoans opened an estimated 3,000 fire hydrants, leading to record water use. The Chicago Park District curtailed programs to keep children from exerting themselves in the heat. Swimming pools were packed, while some sought relief in cool basements. People attended baseball games with wet towels on their heads. Roads buckled and some drawbridges had to be hosed down to close properly.

Only once the worst of the heatwave had passed were authorities able to audit the damage. More than 739 people died from heat exhaustion, dehydration, or kidney failure, despite warnings from meteorologists that dangerous weather was on its way. Hospitals found it impossible to cope. In a vain attempt to help, one owner of a meatpacking firm offered one of his refrigeration trucks to store the dead; it was so quickly filled with the bodies of the poor, infirm and elderly that he had to send eight more vehicles. Afterwards, the autopsies told a grim, predictable tale: the majority of the dead were old people who had run out of water, or had been stuck in overheated apartments, abandoned by their neighbours.

It is easy to forget that cities are made out of people who live and thrive in the spaces between buildings

In response to the crisis, a team from the US Centers for Disease Control and Prevention (CDC) scoured the city for the causes of such a high number of deaths, hoping to prevent a similar disaster elsewhere. The results were predictable: the people who died had failed to find assistance or refuge. They had died on their own, without help. In effect, the report blamed the dead for their failure to leave their apartments, ensure that they had enough water, or check that the air conditioning was working.

These two scenarios offer a bleak condemnation of our urban future. Natural disasters appear to be inevitable, and yet we seem largely incapable of readying ourselves for the unexpected. What can we do to prepare, and perhaps prevent, coming catastrophe?”

“Work, friendships, exercise, parenting, eating, reading — there just aren’t enough hours in the day. To live fully, many of us carve those extra hours out of our sleep time. Then we pay for it the next day. A thirst for life leads many to pine for a drastic reduction, if not elimination, of the human need for sleep. Little wonder: if there were a widespread disease that similarly deprived people of a third of their conscious lives, the search for a cure would be lavishly funded. It’s the Holy Grail of sleep researchers, and they might be closing in.

As with most human behaviours, it’s hard to tease out our biological need for sleep from the cultural practices that interpret it. The practice of sleeping for eight hours on a soft, raised platform, alone or in pairs, is actually atypical for humans. Many traditional societies sleep more sporadically, and social activity carries on throughout the night. Group members get up when something interesting is going on, and sometimes they fall asleep in the middle of a conversation as a polite way of exiting an argument. Sleeping is universal, but there is glorious diversity in the ways we accomplish it.

Different species also seem to vary widely in their sleeping behaviours. Herbivores sleep far less than carnivores — four hours for an elephant, compared with almost 20 hours for a lion — presumably because it takes them longer to feed themselves, and vigilance is selected for. As omnivores, humans fall between the two sleep orientations. Circadian rhythms, the body’s master clock, allow us to anticipate daily environmental cycles and arrange our organ’s functions along a timeline so that they do not interfere with one another.

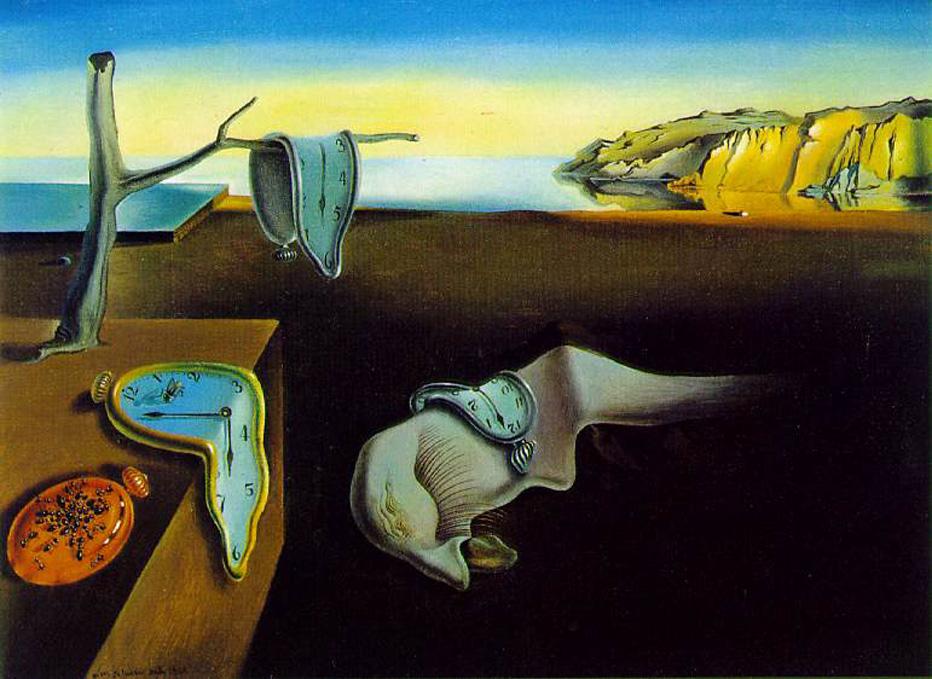

Our internal clock is based on a chemical oscillation, a feedback loop on the cellular level that takes 24 hours to complete and is overseen by a clump of brain cells behind our eyes (near the meeting point of our optic nerves). Even deep in a cave with no access to light or clocks, our bodies keep an internal schedule of almost exactly 24 hours. This isolated state is called ‘free-running’, and we know it’s driven from within because our body clock runs just a bit slow. When there is no light to reset it, we wake up a few minutes later each day. It’s a deeply engrained cycle found in every known multi-cellular organism, as inevitable as the rotation of the Earth — and the corresponding day-night cycles — that shaped it.

Human sleep comprises several 90-minute cycles of brain activity. In a person who is awake, electroencephalogram (EEG) readings are very complex, but as sleep sets in, the brain waves get slower, descending through Stage 1 (relaxation) and Stage 2 (light sleep) down to Stage 3 and slow-wave deep sleep. After this restorative phase, the brain has a spurt of rapid eye movement (REM) sleep, which in many ways resembles the waking brain. Woken from this phase, sleepers are likely to report dreaming.

One of the most valuable outcomes of work on sleep deprivation is the emergence of clear individual differences — groups of people who reliably perform better after sleepless nights, as well as those who suffer disproportionately. The division is quite stark and seems based on a few gene variants that code for neurotransmitter receptors, opening the possibility that it will soon be possible to tailor stimulant variety and dosage to genetic type.

Around the turn of this millennium, the biological imperative to sleep for a third of every 24-hour period began to seem quaint and unnecessary. Just as the birth control pill had uncoupled sex from reproduction, designer stimulants seemed poised to remove us yet further from the archaic requirements of the animal kingdom.”