Drugs have always been for polite people, too, though the packaging is often nicer. Prescriptions written on clean, white sheets of paper dispense pain killers with alarming regularity now, but it’s always been one high or another. From “White-Collar Pill Party,” Bruce Jackson’s 1966 Atlantic article:

Think for a moment: how many people do you know who cannot stop stuffing themselves without an amphetamine and who cannot go to sleep without a barbiturate (over nine billion of those produced last year) or make it through a workday without a sequence of tranquilizers? And what about those six million alcoholics, who daily ingest quantities of what is, by sheer force of numbers, the most addicting drug in America?

The publicity goes to the junkies, lately to the college kids, but these account for only a small portion of the American drug problem. Far more worrisome are the millions of people who have become dependent on commercial drugs. The junkie knows he is hooked; the housewife on amphetamine and the businessman on meprobamate hardly ever realize what has gone wrong.

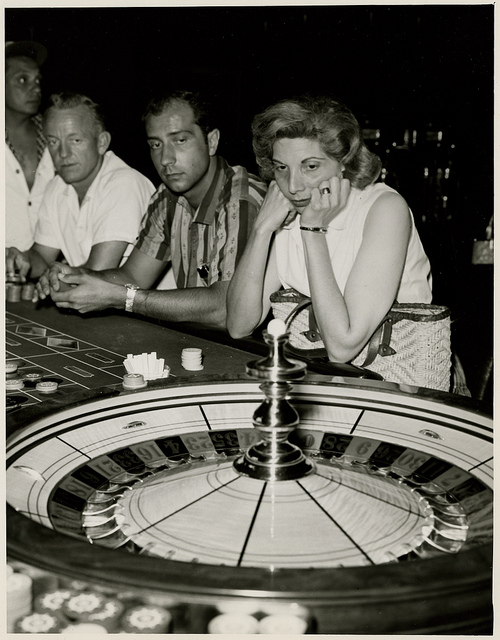

Sometimes the pill-takers meet other pill-takers, and an odd thing happens: instead of using the drug to cope with the world, they begin to use their time to take drugs. Taking drugs becomes something to do. When this stage is reached, the drug-taking pattern broadens: the user takes a wider variety of drugs with increasing frequency. For want of a better term, one might call it the white collar drug scene.

I first learned about it during a party in Chicago last winter, and the best way to introduce you will be to tell you something about that evening, the people I met, what I think was happening.

There were about a dozen people in the room, and over the noise from the record player scraps of conversation came through:

“Now the Desbutal, if you take it with this stuff, has a peculiar effect, contraindication, at least it did for me. You let me know if you … “

“I don’t have one legitimate prescription, Harry, not one! Can you imagine that?” “I’ll get you some tomorrow, dear.”

“… and this pharmacist on Fifth will sell you all the leapers [amphetamines] you can carry—just like that. Right off the street. I don’t think he’d know a prescription if it bit him.” “As long as he can read the labels, what the hell.”

“You know, a funny thing happened to me. I got this green and yellow capsule, and I looked it up in the Book, and it wasn’t anything I’d been using, and I thought, great! It’s not something I’ve built a tolerance to. And I took it. A couple of them. And you know what happened? Nothing! That’s what happened, not a goddamned thing.”

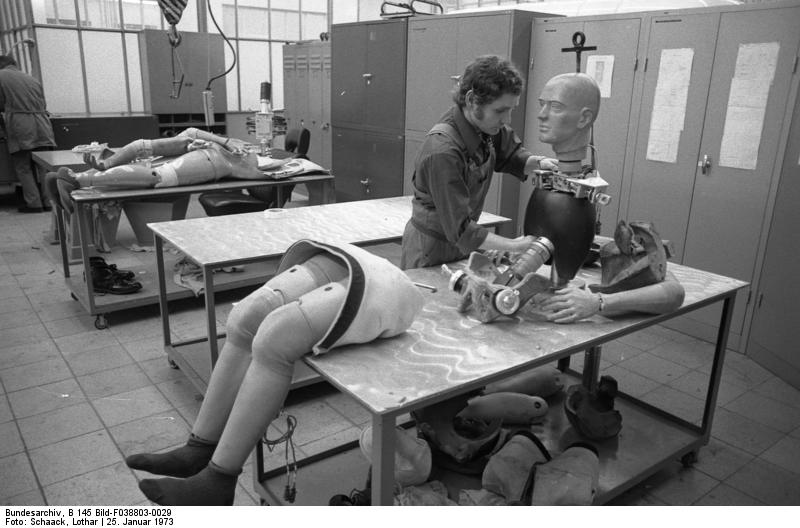

The Book—the Physicians’ Desk Reference, which lists the composition and effects of almost all commercial pharmaceuticals produced in this country—passes back and forth, and two or three people at a time look up the contents and possible values of a drug one of them has just discovered or heard about or acquired or taken. The Book is the pillhead’s Yellow Pages: you look up the effect you want (“Sympathomimetics” or “Cerebral Stimulants,” for example), and it tells you the magic columns. The pillheads swap stories of kicks and sound like professional chemists discussing recent developments; others listen, then examine the PDR to see if the drug discussed really could do that.

Eddie, the host, a painter who has received some recognition, had been awake three or four days, he was not exactly sure. He consumes between 150 and 200 milligrams of amphetamine a day, needs a large part of that to stay awake, even when he has slipped a night’s sleep in somewhere. The dose would cause most people some difficulty; the familiar diet pill, a capsule of Dexamyl or Eskatrol, which makes the new user edgy and overenergetic and slightly insomniac the first few days, contains only 10 or 15 milligrams of amphetamine. But amphetamine is one of the few central nervous system stimulants to which one can develop a tolerance, and over the months and years Ed and his friends have built up massive tolerances and dependencies. “Leapers aren’t so hard to give up,” he told me. “I mean, I sleep almost constantly when I’m off, but you get over that. But everything is so damned boring without the pills.”

I asked him if he knew many amphetamine users who have given up the pills.

“For good?”

I nodded.

“I haven’t known anybody that’s given it up for good.” He reached out and took a few pills from the candy dish in the middle of the coffee table, then washed them down with some Coke.•