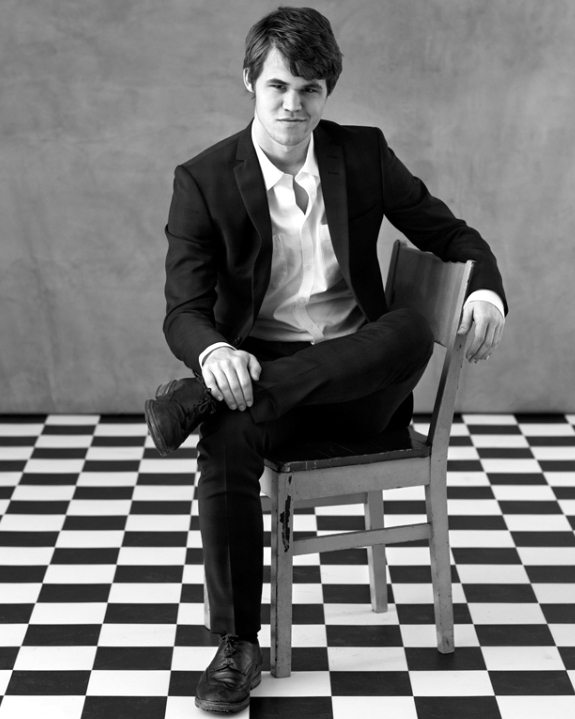

Magnus Carlsen, best chess player in the world, destroys Bill Gates in nine moves.

You are currently browsing the archive for the Science/Tech category.

Tags: Bill Gates, Magnus Carlsen

A patent has been awarded to Google for a service in which the tech company would dispatch an autonomous taxi to your door, which would whisk you for free to a designated destination that wants your business (a casino or a mall, say). Will things go a step further and a car be sent without your beckoning, before you know even you wanted to go out, à la Amazon’s reported shipping plans? From Frederic Lardinois at TechCrunch:

“Google may soon offer a new service that combines its advertising business with its knowledge about local transport options, taxis and – in the long run – autonomous cars. The U.S. Patent and Trademark Office last week granted Google a patent for arranging free (or highly discounted) transportation to an advertiser’s business location.

Here’s how it works. Say a Vegas casino really wants your business. Not only could it offer you some free coins, but if it deems the cost worthwhile (using Google’s automated algorithms, of course), it could just offer you a free taxi ride or send an autonomous car to pick you up.”

Tags: Frederic Lardinois

Orwell, of course, was the main inspiration for Apple’s “1984” Super Bowl ad by Ridley Scott, but it also riffed on Fritz Lang’s Metropolis, which is perhaps more influential from a visual perspective than any other work of art ever. Sure, Lang’s plot was overheated, but, my god, those images. You can’t truly be literate about media without having seen it.

The Apple spot “went viral” thirty years ago, even though it was shown only once, and there was yet no infrastructure for it to be propelled by person to person. What careered around the world wasn’t the actual spot but verbal descriptions of it. It was the collision of a new thing (computers) and an old thing (oral history). And soon enough, the centralized media was smashed, though that didn’t make the world perfect. Tyranny doesn’t disappear; it just attempts to reinvent itself.

Steve Jobs introduces the commercial at the 1984 Apple keynote.

Tags: Fritz Lang, George Orwell, Ridley Scott., Steve Jobs

The opening of “Making Good Use of Bad Timing,” Matthew Hutson’s Nautilus article which reminds that while memory is usually (though not always) inelastic, it is malleable:

“Suppose a woman suffering a headache blames it on a car accident she had. Her story is plausible at first, but on closer examination it has flaws. She says the car accident happened four weeks ago, rather than the six weeks when it actually occurred. Plus she recalls her headache coming on sooner than it actually did. Steven Novella, a neurology professor at Yale University, says it’s an honest mistake. Novella’s patients frequently manipulate time, he says, something made clear by comparing their stories to their medical records. ‘People are horrible historians,’ Novella says. ‘Human memory is a malleable subjective story that we tell ourselves.’

We tend to think that coffee makes us alert and pills soothe our aches faster than they actually do, for the same reason that a patient might move their accident closer in time to their headache. In our inner narratives, less time passes between perceived causes and effects than between unrelated events. Such mistakes, called temporal binding, slip into the conjectures we make when connecting the scenes of our lives. Like photos in an album, the causal links between them must be inferred. And we do that, in part, by considering their sequence and the minutes, days, or years that pass between them. Perceptions of time and causality each lean on the other, transforming reality into an unreliable swirl.”

Tags: Matthew Hutson

If I had titled this post “Cuddly Panda Tries To Recover From Penis Injury,” it would have had a much better chance of going viral. For a couple thousand years–and never more than now during the Internet Age–philosophers and scientists have wondered why certain content is spread from person to person. Why is some information more likely to connect us, even if it’s not the most vital to our safety and survival? The answer does not flatter us. From Maria Konnikova at the New Yorker blog:

“In 350 B.C., Aristotle was already wondering what could make content—in his case, a speech—persuasive and memorable, so that its ideas would pass from person to person. The answer, he argued, was three principles: ethos, pathos, and logos. Content should have an ethical appeal, an emotional appeal, or a logical appeal. A rhetorician strong on all three was likely to leave behind a persuaded audience. Replace rhetorician with online content creator, and Aristotle’s insights seem entirely modern. Ethics, emotion, logic—it’s credible and worthy, it appeals to me, it makes sense. If you look at the last few links you shared on your Facebook page or Twitter stream, or the last article you e-mailed or recommended to a friend, chances are good that they’ll fit into those categories.

Aristotle’s diagnosis was broad, and tweets, of course, differ from Greek oratory. So Berger, who is now a professor of marketing at the University of Pennsylvania’s Wharton School, worked with another Penn professor, Katherine Milkman, to put his interest in content-sharing to an empirical test. Together, they analyzed just under seven thousand articles that had appeared in the Times in 2008, between August 30th and November 30th, to try to determine what distinguished pieces that made the most-emailed list. After controlling for online and print placement, timing, author popularity, author gender, length, and complexity, Berger and Milkman found that two features predictably determined an article’s success: how positive its message was and how much it excited its reader. Articles that evoked some emotion did better than those that evoked none—an article with the headline ‘BABY POLAR BEAR’S FEEDER DIES’ did better than ‘TEAMS PREPARE FOR THE COURTSHIP OF LEBRON JAMES.’ But happy emotions (‘WIDE-EYED NEW ARRIVALS FALLING IN LOVE WITH THE CITY’) outperformed sad ones (‘WEB RUMORS TIED TO KOREAN ACTRESS’S SUICIDE’).

Just how arousing each emotion was also made a difference.”

Tags: Maria Konnikova

In his latest Slate column, “Stunt the Growth,” Evgeny Morozov attempts to apply the “Degrowth Movement” of economics to technology. It seems to me pretty hopeless to suggest that we diminish the collateral damage of Big Data (government surveillance, corporations using our personal information for profit, etc.) by volunteering to accept inferior products and paying more for them (financially or otherwise). That’s not the way of markets nor do I think it’s the way of human nature. That’s not how to tame a giant.

Electric cars and solar power will only become predominant if they offer the same (or better) or better utility and price as their more environmentally wasteful competitors. In much the same way, people didn’t stick to buying newspapers in print because that was better for journalism and therefor better for democracy; they gravitated to the better publishing platform because it was the better publishing platform. If we want to curb technology’s ill effects then we can’t demand that people move backwards but that the products move forward. The answer, if it comes, will be borne of evolution, not devolution. From Morozov:

“Instead of challenging Silicon Valley on the specifics, why not just acknowledge that the benefits it offers are real—but, like an SUV or always-on air conditioning, they might not be worth the costs? Yes, the personalization of search can give us fabulous results, directing us to the nearest pizza joint in two seconds instead of five. But these three seconds in savings require a storage of data somewhere on Google’s servers. After Snowden, no one is really sure what exactly happens to that data and the many ways in which it can be abused.

For most people, Silicon Valley offers a great and convenient product. But if this great product will eventually smother the democratic system, then, perhaps, we should lower our expectations and accept the fact that two extra seconds of search—like a smaller and slower car—might be a reasonable price to pay for preserving the spaces where democratic politics can still flourish.”

Tags: Evgeny Morozov

If recognize years in advance that a good-sized asteroid is to strike Earth, there are methods of deflection. But what if the notice is much shorter? From an Ask Me Anything at Reddit by Ed Liu, a former U.S. astronaut who’s now in the asteroid-nullification business:

“Question:

Theoretically, if we learned that a moderately large asteroid was going to impact populated land on earth in 72 hours, would we do anything about it or do we not have the capability right now?

Ed Liu:

Evacuation would be our only option then, and depending on the size of the asteroid, that may not even be possible. Best not to get into this situation and instead lets find out years or even decades in advance. BTW, I was one of millions of people who evacuated Houston for a hurricane back in 2004. Believe me, evacuating that many people is not an easy thing! It took us nearly 12 hours just to drive to Austin.”

Tags: Ed Liu

Relatively slow and expensive, the Navia from Induct is nonetheless revolutionary for being the first completely autonomous car to come to market. Built for a campus-shuttle level of transportation, the robotic, electric vehicle speeds to only 20 miles per hour and costs $250K, but Brad Templeton, a consultant to Google’s driverless-car division, explains in a short list why it’s still an important step.

“Now 20km/h (12mph) is not very fast, though suitable for a campus shuttle. This slow speed and limited territory may make some skeptical that this is an important development, but it is.

- This is a real product, ready to deploy with civilians, without its own dedicated track or modified infrastructure.

- The price point is actually quite justifiable to people who operate shuttles today, as a shuttle with human driver can cost this much in 1.5 years or less of operation.

- It smashes the concept of the NHTSA and SAE ‘Levels’ which have unmanned operation as the ultimate level after a series of steps. The Navia is at the final level already, just over a constrained area and at low speed. If people imagined the levels were a roadmap of predicted progress, that was incorrect.

- Real deployment is teaching us important things. For example, Navia found that once in operation, teen-agers would deliberately throw themselves in front of the vehicle to test it. Pretty stupid, but a reminder of what can happen.

The low speed does make it much easier to make the vehicle safe. But now it become much easier to show that over time, the safe speed can rise as the technology gets better and better.”

___________________________

Working the city center in Lyon, France, which is also home to an IBM pilot program for new traffic-management technology:

Tags: Brad Templeton

In thinking about the big picture, the multiverse is the thing that makes the most sense to me, but the most sensible thing need not be the true thing. But let’s say the dice is rolled a billions of times–maybe more, maybe an infinite number of times–and you end up with Earth. We have a nice supply of carbon and other building blocks but hurricanes and disease and drought. We’re amazing, but far from ideal. We’re the vivified Frankenstein monster, wonderful and terrible all at once. Perhaps the ideal version is out there somewhere, or not.

And maybe it’s all a computer simulation. It could be that what’s being run infinitely is a program. God is that woman with the punch cards. The opening of Matthew Francis’ excellent Aeon essay, “Is Life Real?“:

“Our species is not going to last forever. One way or another, humanity will vanish from the Universe, but before it does, it might summon together sufficient computing power to emulate human experience, in all of its rich detail. Some philosophers and physicists have begun to wonder if we’re already there. Maybe we are in a computer simulation, and the reality we experience is just part of the program.

Modern computer technology is extremely sophisticated, and with the advent of quantum computing, it’s likely to become more so. With these more powerful machines, we’ll be able to perform large-scale simulations of more complex physical systems, including, possibly, complete living organisms, maybe even humans. But why stop there?

The idea isn’t as crazy as it sounds. A pair of philosophers recently argued that if we accept the eventual complexity of computer hardware, it’s quite probable we’re already part of an ‘ancestor simulation’, a virtual recreation of humanity’s past. Meanwhile, a trio of nuclear physicists has proposed a way to test this hypothesis, based on the notion that every scientific programme makes simplifying assumptions. If we live in a simulation, the thinking goes, we might be able to use experiments to detect these assumptions.

However, both of these perspectives, logical and empirical, leave open the possibility that we could be living in a simulation without being able to tell the difference. Indeed, the results of the proposed simulation experiment could potentially be explained without us living in a simulated world. And so, the question remains: is there a way to know whether we live a simulated life or not?”

Tags: Matthew Francis

I have major philosophical differences with Facebook, but I seriously doubt it will be a virtual ghost town by 2017. But Princeton researchers, acting as social-media epidemiologists, disagree. So on the day that Sheryl Sandberg became a billionaire (was so pulling for her), some are predicting that the plague will soon be over. From Juliette Garside in the Guardian:

“Facebook has spread like an infectious disease but we are slowly becoming immune to its attractions, and the platform will be largely abandoned by 2017, say researchers at Princeton University.

The forecast of Facebook’s impending doom was made by comparing the growth curve of epidemics to those of online social networks. Scientists argue that, like bubonic plague, Facebook will eventually die out.

The social network, which celebrates its 10th birthday on 4 February, has survived longer than rivals such as Myspace and Bebo, but the Princeton forecast says it will lose 80% of its peak user base within the next three years.

John Cannarella and Joshua Spechler, from the US university’s mechanical and aerospace engineering department, have based their prediction on the number of times Facebook is typed into Google as a search term. The charts produced by the Google Trends service show Facebook searches peaked in December 2012 and have since begun to trail off.

‘Ideas, like diseases, have been shown to spread infectiously between people before eventually dying out, and have been successfully described with epidemiological models,’ the authors claim in a paper entitled Epidemiological modelling of online social network dynamics.”

Tags: John Cannarella, Joshua Spechler, Juliette Garside, Mark Zuckerberg, Sheryl Sandberg

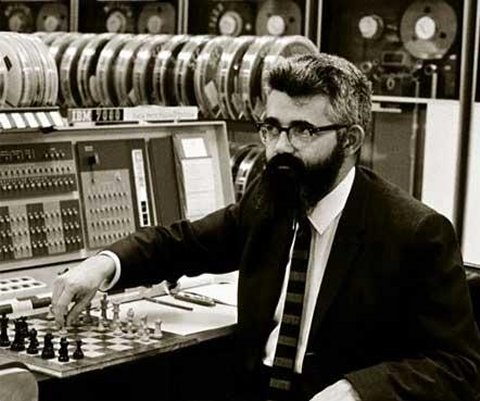

The photo I used to accompany the earlier post about computer intelligence and the photo above are 1966 pictures of John McCarthy, the person who coined the term “Artificial Intelligence.” It was in that year the technologist organized a correspondence chess match (via telegraph) between his computer program and one in Russia. McCarthy lost the series of matches but obviously won in a larger sense. A brief passage about the competition from his Stanford obituary:

“In 1960, McCarthy authored a paper titled, ‘Programs with Common Sense,’ laying out the principles of his programming philosophy and describing ‘a system which is to evolve intelligence of human order.’

McCarthy garnered attention in 1966 by hosting a series of four simultaneous computer chess matches carried out via telegraph against rivals in Russia. The matches, played with two pieces per side, lasted several months. McCarthy lost two of the matches and drew two. ‘They clobbered us,’ recalled [Les] Earnest.

Chess and other board games, McCarthy would later say, were the ‘Drosophila of artificial intelligence,’ a reference to the scientific name for fruit flies that are similarly important in the study of genetics.”

Tags: John McCarthy, Les Earnest

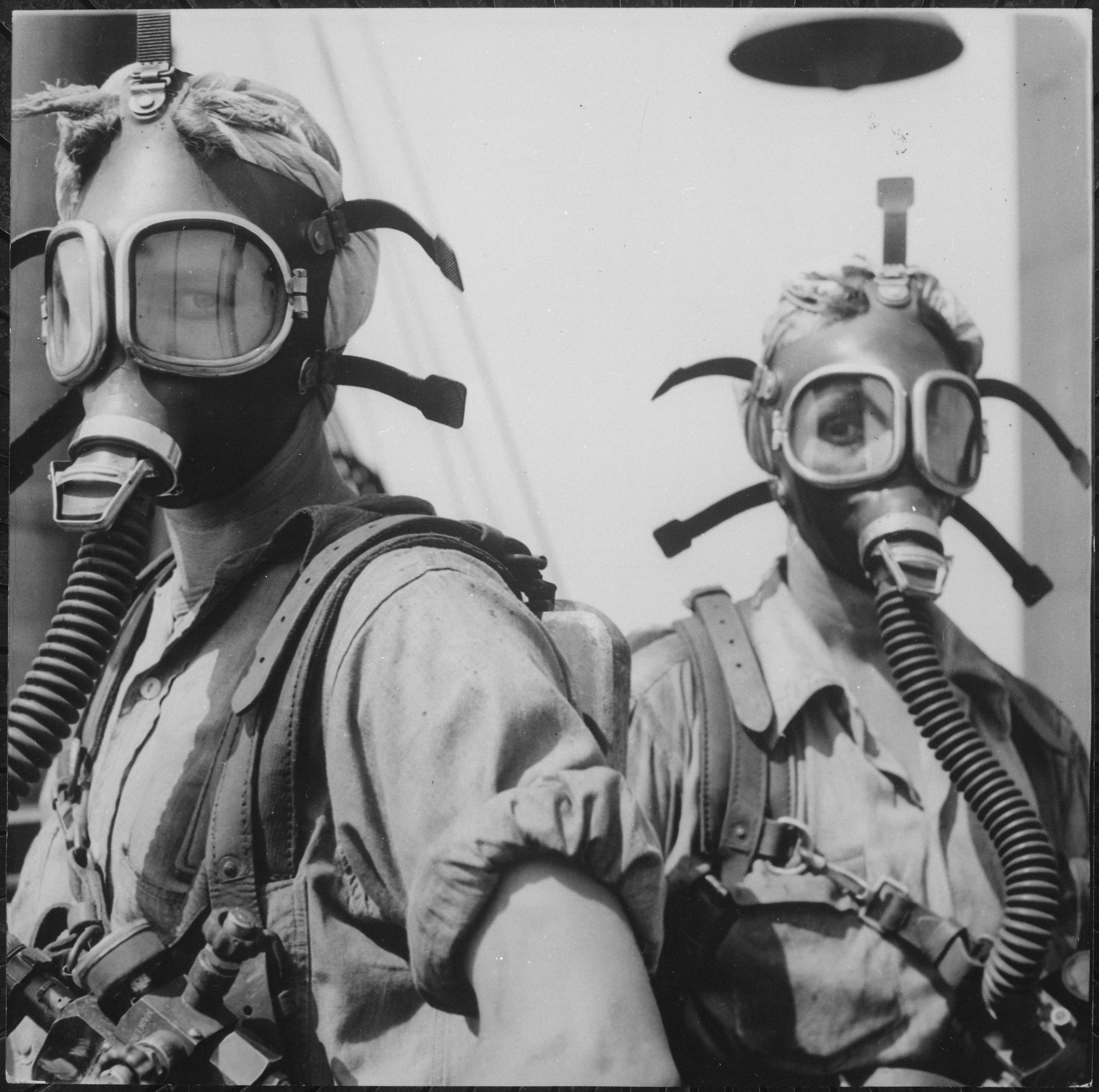

AI can’t do everything humans can do, but any responsibilities that both are capable of handling will be assumed, almost completely, by robots. There’s pretty much no way around that. From a Popular Science report by Kelsey D. Atherton about the proposed robotization of the U.S. military:

“By the middle of this century, U.S. Army soldiers may well be fighting alongside robotic squadmates. General Robert Cone revealed the news at an Army Aviation symposium last week, noting that the Army is considering reducing the size of a Brigade Combat Team from 4,000 soldiers to 3,000, with robots and drones making up for the lost firepower. Cone is in charge of U.S. Army Training and Doctrine Command (TRADOC), the part of the Army responsible for future planning and organization. If the Army can still be as effective with fewer people to a unit, TRADOC will figure out what technology is needed to make that happen.

While not explicitly stated, a major motivation behind replacing humans with robots is that humans are expensive. Training, feeding, and supplying them while at war is pricey, and after the soldiers leave the service, there’s a lifetime of medical care to cover. In 2012, benefits for serving and retired members of the military comprised one-quarter of the Pentagon’s budget request.”

As “film” ceases to be an actual thing and becomes just an idea, what will be different? We’ve always worried about film degrading, but should we worry now that it won’t? Digital has its own flaws, but they’re very different flaws.

While experiencing the imperfections of music on vinyl or movies on film holds an attraction for those of us who enjoy a lo-fi aesthetic, I doubt that these flaws have any inherent value. They just trigger memories and it’s those memories that contain the real value. People who never know such decaying media likely won’t miss anything. Their memories will have their own triggers. From Richard Verrier in the Los Angeles Times:

“In a historic step for Hollywood, Paramount Pictures has become the first major studio to stop releasing movies on film in the United States.

Paramount recently notified theater owners that the Will Ferrell comedy Anchorman 2: The Legend Continues, which opened in December, would be the last movie that would it would release on 35-millimeter film.

The studio’s Oscar-nominated film The Wolf of Wall Street from director Martin Scorsese is the first major studio film that was released all digitally, according to theater industry executives who were briefed on the plans but not authorized to speak about them.

The decision is significant because it is likely to encourage other studios to follow suit, accelerating the complete phase-out of film, possibly by the end of the year. That would mark the end of an era: film has been the medium for the motion picture industry for more than a century.”

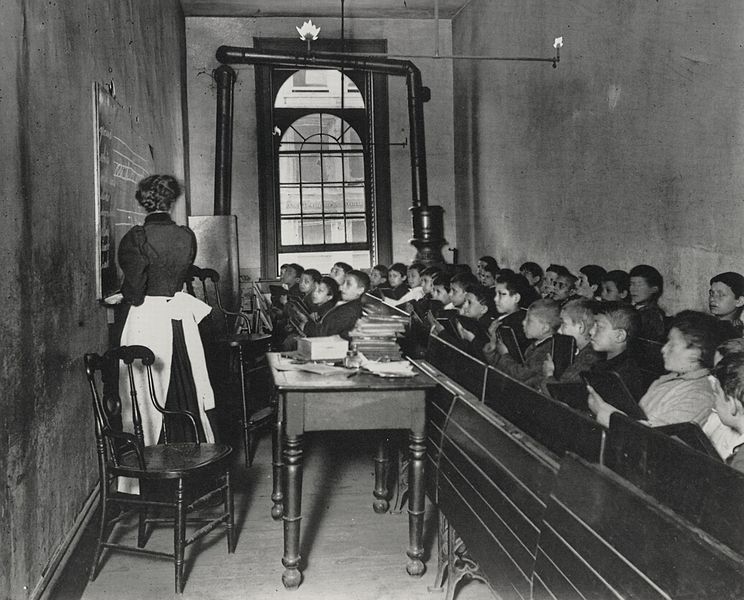

History professors who’ve lectured about Luddites have, in some instances, become Luddites themselves. Massively Open Online Courses (MOOCS) threaten to disrupt universities, largely a luxury good for mostly economy shoppers. It’s a deserved challenge to the system, and that’s the type of challenge that wrankles the most. From Thomas Rodham Wells’ essay on the subject at the Philosopher’s Beard:

“The most significant feature of MOOCs is that they have the potential to mitigate the cost disease phenomenon in higher education, and thus disrupt its economic conventions, rather as the recorded music industry did for string quartets. Of course MOOCs aren’t the same thing as residential degree programme classroom courses with tenured professors. In at least some respects they are a clearly inferior product. But then, listening to a CD isn’t the same experience as listening to a string quartet, nor are movies the same as theatre. But they are pretty good substitutes for many purposes, especially when the difference in price between them is so dramatic. And, like MOOCs, they also have non-pecuniary advantages over the original, such as user control and enormous quality improvements on some dimensions.

I think this cost advantage is the real challenge the opponents of MOOCs have to address. Why isn’t this cheap alternative good enough? Given that one can now distribute recordings of lectures by the most brilliant and eloquent academics in the world for a marginal cost of close to zero, the idea that a higher education requires collecting millions of 18-25 year olds together in residential schools in order to attend lectures by relative mediocrities and read the books collected in the university library needs a justification. Otherwise it will come to seem an expensive and elitist affectation. Like paying for a real string quartet at your party, or a handmade mechanical watch rather than just one that works.”

______________________________

“The classroom no longer has anything comparable to the answers outside the classroom”:

Tags: Thomas Rodham Wells

Pioneering computer scientist Roger Schank answered “Artificial Intelligence” when Edge asked him the question, “What Scientific Idea Is Ready for Retirement?” That’s a good reply since we can’t “explain” to computers how the human brain works because we don’t yet know ourselves how it works. An excerpt:

“It was always a terrible name, but it was also a bad idea. Bad ideas come and go but this particular idea, that we would build machines that are just like people, has captivated popular culture for a long time. Nearly every year, a new movie with a new kind of robot that is just like a person appears in the movies or in fiction. But that robot will never appear in reality. It is not that Artificial Intelligence has failed, no one actually ever tried. (There I have said it.)

David Deutsch, a physicist at Oxford said: ‘No brain on Earth is yet close to knowing what brains do. The enterprise of achieving it artificially — the field of ‘artificial intelligence’ has made no progress whatever during the entire six decades of its existence.’ He adds that he thinks machines that think like people will happen some day.

Let me put that remark a different light. Will we eventually have machines that feel emotions like people? When that question is asked of someone in AI, they might respond about how we could get a computer to laugh or to cry or to be angry. But actually feeling?

Or let’s talk about learning. A computer can learn can’t it? That is Artificial Intelligence right there. No machine would be smart if it couldn’t learn, but does the fact that Machine Learning has enabled the creation of a computer that can play Jeopardy or provide data about purchasing habits of consumers mean that AI is on its way?

The fact is that the name AI made outsiders to AI imagine goals for AI that AI never had. The founders of AI (with the exception of Marvin Minsky) were obsessed with chess playing, and problem solving (the Tower of Hanoi problem was a big one.) A machine that plays chess well does just that, it isn’t thinking nor is it smart. It certainly isn’t acting like a human. The chess playing computer won’t play worse one day because it drank too much the night before or had a fight with its wife.

Why does this matter? Because a field that started out with a goal different from what its goal was perceived to be is headed for trouble. The founders of AI, and those who work on AI still (me included), want to make computers do things they cannot now do in the hope that something will be learned from this effort or that something will have been created that is of use. A computer that can hold an intelligent conversation with you would be potentially useful. I am working on a program now that will hold an intelligent conversation about medical issues with a user. Is my program intelligent? No. The program has no self knowledge. It doesn’t know what it is saying and it doesn’t know what it knows. The fact that we have stuck ourselves with this silly idea of intelligent machines or AI causes people to misperceive the real issues.”

Tags: Roger Schank

Now that almost all the walls have ears, it doesn’t matter so much if you’re surrounded by actual prison walls or not. The jailers come to you. From a new Spiegel Q&A with Chinese artist and activist Ai Weiwei, a public figure in a time when that description has come to mean something else:

“Spiegel:

Why are you put under such manic surveillance? There are more than a dozen cameras around your house.

Ai:

There’s a unit, I think it’s called ‘Office 608,’ which follows people with certain categories and degrees of surveillance. I am sure I am in the top one. They don’t just tap my telephone, check my computer and install their cameras everywhere — they’re even after me when I’m walking in the park with my son.

Spiegel:

What do the people who observe you want to find out, what don’t they know yet?

Ai:

A year ago, I got a bit aggressive and pulled the camera off one of them. I took out the memory card and asked him if he was a police officer. He said ‘No.’ Then why are you following me and constantly photographing me? He said, ‘No, I never did.’ I said, ‘OK, go back to your boss and tell him I want to talk to him. And if you keep on following me, then you should be a bit more careful and make sure that I don’t notice.’ I was really curious to see what he had on that memory card.

Spiegel:

And?

Ai:

I was shocked because he had photographed the restaurant I had eaten in the previous day from all angles: every room, the cash till, the corridor, the entrance from every angle, every table. I asked myself: Gosh, why do they have to go to so much trouble? Then there were photos of my driver, first of him sitting on a park bench, then a portrait from the front, a portrait from the back, his shoes, from the left, from the right, then me again, then my stroller.

Spiegel:

And he was only one out of several people who follow you?

Ai:

Yes. They must have a huge file on me. But when I gave him back the camera, he asked me not to post a photo of his face on the Internet.

Spiegel:

The person monitoring you asked not to be exposed?

Ai:

Yes. He said he had a wife and children, so I fulfilled his wish. Later I went through the photos we had taken years before at the Great Wall — and there he was again, the same guy. That often happens to me, because I always take so many photos: I keep recognizing my old guards.”

Tags: Ai Weiwei

I’ve not read much sci-fi, so I’m not very familiar with The Forever War author Joe Haldeman. In a new new Wired podcast, the writer and Vietnam Vet shares his thoughts about warfare in this age of miracles and wonders, when science and science fiction are difficult to entangle. An excerpt:

“‘I suspect that war will become obsolete only when something worse supercedes it,’ says Joe Haldeman in this week’s episode of the Geek’s Guide to the Galaxy podcast. ‘I’m thinking in terms of weapons that don’t look like weapons. I’m thinking of ways you could win a war without obviously declaring war in the first place.’

Increasingly sophisticated biological and nanotech weapons are one direction war might go, he says, along with advanced forms of propaganda and mind control that would persuade enemy soldiers to switch sides or compel foreign governments to accede to their rivals’ demands. It’s a prospect he finds chilling.

‘One hopes that they’ll never be able to use mind control weapons,’ says Haldeman, ‘because we’re all done for if that happens. I don’t want military people, or political people, to have that type of power over those of us who just get by from day to day.'”

Tags: Joe Haldeman

In “The Texas Flautist and the Fetus,” a variation on Judith Jarvis Thomson’s “violinist analogy” thought experiment about abortion, Dominic Wilkinson of Practical Ethics points out the unintended moral precedent set by the Texas law which says that pregnant brain-dead woman Marlise Munoz must be kept alive over the objections of her family:

“The Texan law seems to accept that the woman’s interests are reduced by being in a state close to death (or already being dead). It appears to be justified to ignore her previous wishes and to cause distress to her family in order to save the life of another. If this argument is sound, though, it appears to have much wider implications. For though brain death in pregnant women is rare, there are many patients who die in intensive care who could save the lives of others– by donating their organs. Indeed there are more potential lives at stake, since the organs of a patient dying in intensive care may be used to save the life of up to seven other people.

If it is justified to continue life support machines for Marlise Munoz against her and her family’s wishes, it would also appear be justified to remove the organs of dying or brain dead patients in intensive care against their and their family’s wishes. Texas would appear to be committed to organ conscription.”

In 1987, when Omni asked Bill Gates and Timothy Leary to predict the future of tech and Robert Heilbroner to speculate on the next phase of economics, David Byrne was asked to prognosticate about the arts two decades hence. It depends on how you parse certain words, but Byrne got a lot right–more channels, narrowcasting, etc. One thing I think he erred on is just how democratized it all would become. “I don’t think we’ll see the participatory art that so many people predict, Some people will use new equipment to make art, but they will be the same people who would have been making art anyway.” Kim and Snooki and cats at piano would not have been making art anyway. Certainly you can argue that reality television, home-made Youtube videos and fan fiction aren’t art in the traditional sense, but I would disagree. Reality TV and the such holds no interest for me on the granular level, but the decentralization of media, the unloosing of the cord, is as fascinating to me as anything right now. It’s art writ large, a paradigm shift we have never known before. It’s democracy. The excerpt:

“David Byrne, Lead Singer, Talking Heads:

The line between so-called serious and popular art will blur even more than it already has because people’s altitudes are changing. When organized religion began to lose touch with new ideas and discoveries, it started failing to accomplish its purpose in people’s lives. More and more people will turn to the arts tor the kind of support and inspiration religion used to of- fer them. The large pop-art audience remains receptive to the serious content they’re not getting from religion. Eventually some new kind of formula — an equivalent of religion — will emerge and encompass art, physics, psychiatry, and genetic engineering without denying evolution or any of the possible cosmologies.

I think that people have exaggerated greatly the effects new technology has on the arts and on the number of people who will make art in the future. I realize that computers are in their infancy, but they’re pretty pathetic, and I’m not the only one who’s said that. Computers won’t take into account nuances or vagueness or presumptions or anything like intuition.

I don’t think computers will have any important effect on the arts in 2007. When it comes to the arts they’re just big or small adding machines. And if they can’t ‘think,’ that’s all they’ll ever be. They may help creative people with their bookkeeping, but they won’t help in the creative process.

The video revolution, however, will have some real impact on the arts in the next 20 years. It already has. Because people’s attention spans are getting shorter, more fiction and drama will be done on television, a perfect medium for them. But I don’t think anything will be wiped out; books will always be there; everything will find its place.

Outlets for art, in the marketplace and on television, will multiply and spread. Even the three big TV networks will feature looser, more specialized programming to appeal to special-interest groups. The networks will be freed from the need to try to please everybody, which they do now and inevitably end up with a show so stupid nobody likes it. Obviously this multiplication of outlets will benefit the arts.

I don’t think we’ll see the participatory art that so many people predict. Some people will use new equipment to make art, but they will be the same people who would have been making art anyway. Still, I definitely think that the general public will be interested in art that was once considered avant-garde.

I can’t stand the cult of personality in pop music. I don’t know if that will disappear in the next 20 years, but I hope we see a healthier balance between that phenomenon and the knowledge that being part of a community has its rewards as well.

I don’t think that global video and satellites will produce any global concept of community in the next 20 years, but people will have a greater awareness of their immediate communities. We will begin to notice the great artistic work going on out- side of the major cities — outside of New York, L.A., Paris, and London.”•

____________________________________

“Music and performance does not make any sense”:

Tags: David Byrne

Automation and robotics will make us wealthy in the aggregate, but how will most of us share in those riches if employment becomes scarce? In the past, technological innovation has disappeared jobs, but others have come along to replace them, often in fields that didn’t even exist before. But what happens if the second part of the shift never arrives? From “The Onrushing Wave” in the Economist:

“For much of the 20th century, those arguing that technology brought ever more jobs and prosperity looked to have the better of the debate. Real incomes in Britain scarcely doubled between the beginning of the common era and 1570. They then tripled from 1570 to 1875. And they more than tripled from 1875 to 1975. Industrialisation did not end up eliminating the need for human workers. On the contrary, it created employment opportunities sufficient to soak up the 20th century’s exploding population. Keynes’s vision of everyone in the 2030s being a lot richer is largely achieved. His belief they would work just 15 hours or so a week has not come to pass.

Yet some now fear that a new era of automation enabled by ever more powerful and capable computers could work out differently. They start from the observation that, across the rich world, all is far from well in the world of work. The essence of what they see as a work crisis is that in rich countries the wages of the typical worker, adjusted for cost of living, are stagnant. In America the real wage has hardly budged over the past four decades. Even in places like Britain and Germany, where employment is touching new highs, wages have been flat for a decade. Recent research suggests that this is because substituting capital for labour through automation is increasingly attractive; as a result owners of capital have captured ever more of the world’s income since the 1980s, while the share going to labour has fallen.

At the same time, even in relatively egalitarian places like Sweden, inequality among the employed has risen sharply, with the share going to the highest earners soaring. For those not in the elite, argues David Graeber, an anthropologist at the London School of Economics, much of modern labour consists of stultifying ‘bullshit jobs’—low- and mid-level screen-sitting that serves simply to occupy workers for whom the economy no longer has much use. Keeping them employed, Mr Graeber argues, is not an economic choice; it is something the ruling class does to keep control over the lives of others.

Be that as it may, drudgery may soon enough give way to frank unemployment.”

From “Trick of the Eye,” Iwan Rhys Morus’ wonderful Aeon essay about the nature and value of optical illusions:

“People who said that they saw ghosts really did see them, according to Brewster. But they were images produced by the (deluded) mind rather than by any external object: ‘when the mind possesses a control over its powers, the impressions of external objects alone occupy the attention, but in the unhealthy condition of the mind, the impressions of its own creation, either overpower, or combine themselves with the impressions of external objects’. These ‘mental spectra’ were imprinted on the retina just like any others, but they were still products of the mind not the external world. So ghosts were in the eye, but put there by the mind: ‘the ‘mind’s eye’ is actually the body’s eye’, said Brewster.

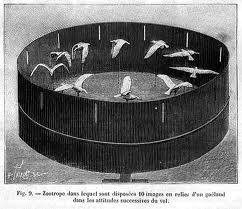

Seeing ghosts demonstrated how the mind-eye co-ordination that generated vision could break down. Philosophical toys such as phenakistiscopes and zoetropes — which exploited the phenomenon of persistence of vision to generate the illusion of movement — did the same thing. The thaumatrope, first described in 1827 by the British physician John Ayrton Paris, juxtaposed two different images on opposite sides of a disc to make a single one by rapid rotation — ‘a very striking and magical effect’. A popular Victorian version had a little girl on one side, a boy on the other. They were positioned so that their lips met in a kiss when the disc rotated.

The daedaleum (later renamed the zoetrope), invented in 1834 by the mathematician William George Horner, was ‘a hollow cylinder … with apertures at equal distances, and placed cylindrically round the edge of a revolving disk’ with drawings on the inside of the cylinder. The device produced ‘the same surprising play of relative motions as the common magic disk does when spun before a mirror’. (The ‘magic disk’ was the phenakistiscope, invented a few years earlier by the Belgian natural philosopher Joseph Plateau). In the zoetrope, the viewer looked through one of the slits in the rotating cylinder to see a moving image — often, a juggling clown or a horse galloping. In the phenakistiscope, they looked through a slit in the rotating disc to see the moving image reflected in a mirror. Faraday, too, experimented with persistence of vision.

The daedaleum (later renamed the zoetrope), invented in 1834 by the mathematician William George Horner, was ‘a hollow cylinder … with apertures at equal distances, and placed cylindrically round the edge of a revolving disk’ with drawings on the inside of the cylinder. The device produced ‘the same surprising play of relative motions as the common magic disk does when spun before a mirror’. (The ‘magic disk’ was the phenakistiscope, invented a few years earlier by the Belgian natural philosopher Joseph Plateau). In the zoetrope, the viewer looked through one of the slits in the rotating cylinder to see a moving image — often, a juggling clown or a horse galloping. In the phenakistiscope, they looked through a slit in the rotating disc to see the moving image reflected in a mirror. Faraday, too, experimented with persistence of vision.

Brewster’s kaleidoscope was another philosophical toy that fooled the eye. Brewster described it as an ‘ocular harpsichord’, explaining how the ‘combination of fine forms, and ever-varying tints, which it presents in succession to the eye, have already been found, by experience, to communicate to those who have a taste for this kind of beauty, a pleasure as intense and as permanent as that which the finest ear derives from musical sounds’. The kaleidoscopic illusion was supposed to teach the viewer how to see things properly; it was also, interestingly, meant to be a technology that could mechanise art. It ‘effects what is beyond the reach of manual labour’, said Brewster, exhibiting ‘a concentration of talent and skill which could not have been obtained by uniting the separate exertions of living agents.’“

Tags: Iwan Rhys Morus

Did you grow up sort of poor? I did. Not on food stamps but close. Not in the projects but a couple of buildings away. It leaves a mark. The general theory of poverty has long been that if a poor person received a windfall of cash, it wouldn’t matter because the poverty resides within them. They would be back to square one and in need in no time. A study by Duke epidemiologist Jane Costello about casino money being dispensed to previously poor Cherokee Indians pushed back at that idea to an extent that surprised even the academic herself. From Moises Velasquez-Manoff’s New York Times op-ed, “What Happen When the Poor Receive a Stipend?“:

“When the casino opened, Professor Costello had already been following 1,420 rural children in the area, a quarter of whom were Cherokee, for four years. That gave her a solid baseline measure. Roughly one-fifth of the rural non-Indians in her study lived in poverty, compared with more than half of the Cherokee. By 2001, when casino profits amounted to $6,000 per person yearly, the number of Cherokee living below the poverty line had declined by half.

The poorest children tended to have the greatest risk of psychiatric disorders, including emotional and behavioral problems. But just four years after the supplements began, Professor Costello observed marked improvements among those who moved out of poverty. The frequency of behavioral problems declined by 40 percent, nearly reaching the risk of children who had never been poor. Already well-off Cherokee children, on the other hand, showed no improvement. The supplements seemed to benefit the poorest children most dramatically.

When Professor Costello published her first study, in 2003, the field of mental health remained on the fence over whether poverty caused psychiatric problems, or psychiatric problems led to poverty. So she was surprised by the results. Even she hadn’t expected the cash to make much difference. ‘The expectation is that social interventions have relatively small effects,’ she told me. ‘This one had quite large effects.’

She and her colleagues kept following the children. Minor crimes committed by Cherokee youth declined. On-time high school graduation rates improved. And by 2006, when the supplements had grown to about $9,000 yearly per member, Professor Costello could make another observation: The earlier the supplements arrived in a child’s life, the better that child’s mental health in early adulthood.”

Placing an image from a commercial for Pele’s Soccer Atari video game in a post yesterday reminded of the gag game, George Plimpton’s Video Falconry, a faux ColecoVision cartridge that was hatched in the wonderfully odd mind of John Hodgman a few years ago. What makes the joke so special is that while it’s a ridiculous concept, it feels like it could be real because it plays on truths of both Plimpton (who was a wonderfully wooden Intellivision pitchman) and ’80s gaming (which wasn’t directed only by market research but by hunches, sometimes awful hunches). You have to be of a certain age and culture to get it, but if you are, it may be the most brilliantly specific joke ever.

It was all over the web in 2011, so many of you are probably familiar with Tom Fulp’s realization of Hodgman’s joke, but have a look at this video in case you missed it or want to relive it.

Tags: George Plimpton, John Hodgman, Tom Fulp

Considering that predictive searching is within reach of our fingertips at all times, and Amazon’s warehouses are data-rich operations, I assumed the “anticipatory ordering” was already a highly developed thing–that the company moved products around the country (and the world) based on prognostications made by previous ordering patterns. But apparently it’s only the newest thing, and it may ultimately go a very aggressive step further than I thought it would. From Kwame Opam at the Verge:

“Drawing on its massive store of customer data, Amazon plans on shipping you items it thinks you’ll like before you click the purchase button. The company today gained a new patent for ‘anticipatory shipping,’ a system that allows Amazon to send items to shipping hubs in areas where it believes said item will sell well. This new scheme will potentially cut delivery times down, and put the online vendor ahead of its real-world counterparts.

Amazon plans to box and ship products it expects customers to buy preemptively, based on previous searches and purchases, wish lists, and how long the user’s cursor hovers over an item online. The company may even go so far as to load products onto trucks and have them ‘speculatively shipped to a physical address’ without having a full addressee. Such a scenario might lead to unwanted deliveries and even returns, but Amazon seems willing to take the hit.”

Tags: Kwame Opam