Those who still believe privacy can be preserved by legislation either haven’t thought through the realities or are deceiving themselves. Get ready for your close-up because it’s not the pictures that are getting smaller, but the cameras. Tinier and Tinier. Soon you won’t even notice them. And they can fly.

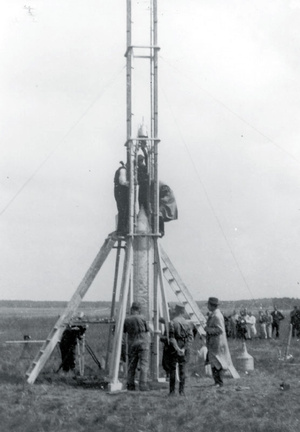

I have no doubt the makers of the Nixie, the wristwatch-drone camera, have nothing but good intentions, but not everyone using it will. From Joseph Flaherty at Wired:

“Being able to wear the drone is a cute gimmick, but it’s powerful software packed into a tiny shell could set Nixie apart from bargain Brookstone quadcopters. Expertise in motion-prediction algorithms and sensor fusion will give the wrist-worn whirlybirds an impressive range of functionality. A ‘Boomerang mode’ allows Nixie to travel a fixed distance from its owner, take a photo, then return. ‘Panorama mode’ takes aerial photos in a 360° arc. ‘Follow me’ mode makes Nixie trail its owner and would capture amateur athletes in a perspective typically reserved for Madden all-stars. ‘Hover mode’ gives any filmmaker easy access to impromptu jib shots. Other drones promise similar functionality, but none promise the same level of portability or user friendliness.

‘We’re not trying to build a quadcopter, we’re trying to build a personal photographer,’ says Jovanovic.

A Changing Perspective on Photography

[Jelena] Jovanovic and her partner Christoph Kohstall, a Stanford postdoc who holds a Ph.D. in quantum physics and a first-author credit in the journal Nature, believe photography is at a tipping point.

Early cameras were bulky, expensive, and difficult to operate. The last hundred years have produced consistently smaller, cheaper, and easier-to-use cameras, but future developments are forking. Google Glass provides the ultimate in portability, but leaves wearers with a fixed perspective. Surveillance drones offer unique vantage points, but are difficult to operate. Nixie attempts to offer the best of both worlds.”•