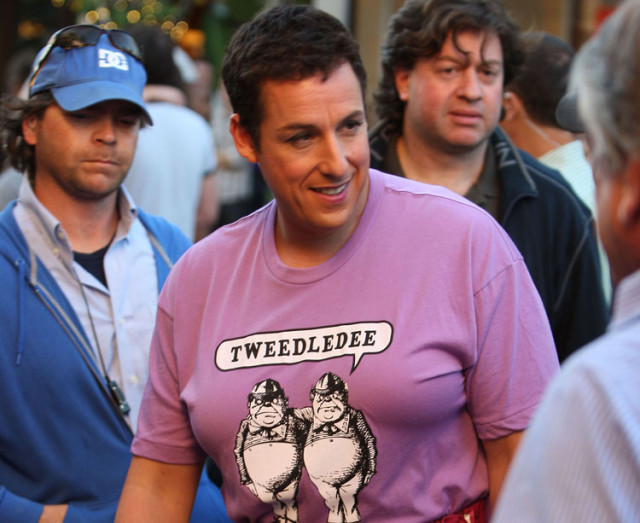

The Economist has a piece about the so-called “Obesity Penalty,” which is supported by a new Swedish study which argues that overweight people earn less than their weed-like co-workers. Probably a good idea to be circumspect about the whole thing–or at least the causes if the effect is real. An excerpt:

“BEING obese is the same as not having an undergraduate degree. That’s the bizarre message from a new paper that looks at the economic fortunes of Swedish men who enlisted in compulsory military service in the 1980s and 1990s. They show that men who are obese aged 18 grow up to earn 16% less than their peers of a normal weight. Even people who were overweight at 18—that is, with a body-mass index from 25 to 30—see significantly lower wages as an adult.

At first glance, a sceptic might be unconvinced by the results. After all, within countries the poorest people tend to be the fattest. One study found that Americans who live in the most poverty-dense counties are those most prone to obesity. If obese people tend to come from impoverished backgrounds, then we might expect them to have lower earnings as an adult.

But the authors get around this problem by mainly focusing on brothers. Every person included in their final sample—which is 150,000 people strong—has at least one male sibling also in that sample. That allows the economists to use ‘fixed-effects,’ a statistical technique that accounts for family characteristics (such as poverty). They also include important family characteristics like the parents’ income. All this statistical trickery allows the economists to isolate the effect of obesity on earnings.

So what does explain the ‘obesity penalty’?”