I think human beings will eventually go extinct without superintelligence to help us ward off big-impact challenges, yet I understand that Strong AI brings its own perils. I just don’t feel incredibly worried about it at the present time, though I think it’s a good idea to start focusing on the challenge today rather than tomorrow. In his Medium essay “Russell, Bostrom and the Risk of AI,” Lyle Cantor wonders whether humans are to computers as chimps are to humans. An excerpt:

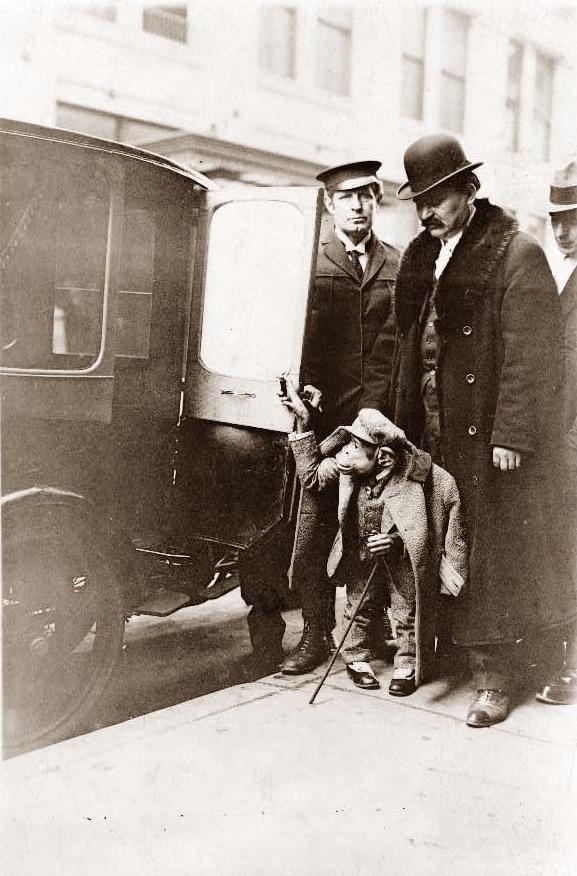

Consider the chimp. If we are grading on a curve, chimps are very, very intelligent. Compare them to any other species besides Homo sapiens and they’re the best of the bunch. They have the rudiments of language, use very simple tools and have complex social hierarchies, and yet chimps are not doing very well. Their population is dwindling, and to the extent they are thriving they are thriving under our sufferance not their own strength.

Why? Because human civilization is little like the paperclip maximizer; We don’t hate chimps or the other animals whose habitats we are rearranging; we just see higher value arrangements of the earth and water they need to survive. And we are only every-so-slighter smarter than chimps.

In many respects our brains are nearly identical. Yes, the average human brain is about three times the size of an average chimp’s, but we still share much of the same gross structure. And our neurons fire at about 100 times per second and communicate through salutatory conduction, just like theirs do.

Compare that with the potential limits of computing. …

In terms of intellectual capacity, there’s an awful lot of room above us. An AI could potentially think millions of times faster than us. Problems that take the smartest humans years to solve it could solve in mintues. If a paperclip maximizer (or value-of-Goldman Sachs-stock maximzer or USA hegemony maximzer or refined-gold maximizer) is created, why should we expect our fate then to be any different that that of chimps now?•

Tags: Lyle Cantor, Nick Bostrom, Stewart Russell