Because of philosopher Nick Bostrom’s new book, Superintelligence, the specter of AI enslaving or eliminating humans has been getting a lot of play lately. In a Guardian piece, UWE Bristol Professor Alan Winfield argues that we should be concerned but not that concerned. He doesn’t think we’re close to capable of building a Frankenstein monster that could write Frankenstein. Of course, machines might not need think like us to surpass us. The opening:

“The singularity – or, to give it its proper title, the technological singularity. It’s an idea that has taken on a life of its own; more of a life, I suspect, than what it predicts ever will. It’s a Thing for techno-utopians: wealthy middle-aged men who regard the singularity as their best chance of immortality. They are Singularitarians, some seemingly prepared to go to extremes to stay alive for long enough to benefit from a benevolent super-artificial intelligence – a man-made god that grants transcendence.

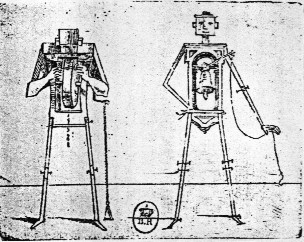

And it’s a thing for the doomsayers, the techno-dystopians. Apocalypsarians who are equally convinced that a super-intelligent AI will have no interest in curing cancer or old age, or ending poverty, but will – malevolently or maybe just accidentally – bring about the end of human civilisation as we know it. History and Hollywood are on their side. From the Golem to Frankenstein’s monster, Skynet and the Matrix, we are fascinated by the old story: man plays god and then things go horribly wrong.

The singularity is basically the idea that as soon as AI exceeds human intelligence, everything changes. There are two central planks to the hypothesis: one is that as soon as we succeed in building AI as smart as humans it rapidly reinvents itself to be even smarter, starting a chain reaction of smarter-AI inventing even-smarter-AI until even the smartest humans cannot possibly comprehend how it works. The other is that the future of humanity becomes in some sense out of control, from the moment of the singularity onwards.

So should we be worried or optimistic about the technological singularity? I think we should be a little worried – cautious and prepared may be a better way of putting it – and at the same time a little optimistic (that’s the part of me that would like to live in Iain M Banks’ The Culture. But I don’t believe we need to be obsessively worried by a hypothesised existential risk to humanity.”

Tags: Alan Winfield