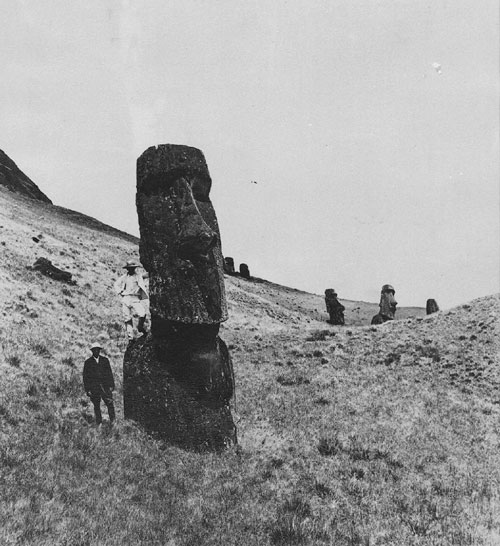

In his new Atlantic piece, “We’re Underestimating the Risk of Human Extinction,” Ross Andersen conducts a smart interview with Oxford’s Professor Nick Bostrom about the possibility of a global Easter Island, in which all of humanity vanishes from Earth. The conversation focuses on the threats we face not from our stars but from ourselves. I think Bostrom’s attitude is too dire, but he only has to be right once, of course. An excerpt:

“What technology, or potential technology, worries you the most?

Bostrom: Well, I can mention a few. In the nearer term I think various developments in biotechnology and synthetic biology are quite disconcerting. We are gaining the ability to create designer pathogens and there are these blueprints of various disease organisms that are in the public domain—you can download the gene sequence for smallpox or the 1918 flu virus from the Internet. So far the ordinary person will only have a digital representation of it on their computer screen, but we’re also developing better and better DNA synthesis machines, which are machines that can take one of these digital blueprints as an input, and then print out the actual RNA string or DNA string. Soon they will become powerful enough that they can actually print out these kinds of viruses. So already there you have a kind of predictable risk, and then once you can start modifying these organisms in certain kinds of ways, there is a whole additional frontier of danger that you can foresee.

In the longer run, I think artificial intelligence—once it gains human and then superhuman capabilities—will present us with a major risk area. There are also different kinds of population control that worry me, things like surveillance and psychological manipulation pharmaceuticals.”

Read also:

Tags: Nick Bostrom, Ross Andersen