In a Popular Science piece, Erik Sofge offers a smackdown of the Singularity, thinking it less science than fiction. An excerpt:

“The most urgent feature of the Singularity is its near-term certainty. [Vernor] Vinge believed it would appear by 2023, or 2030 at the absolute latest. Ray Kurzweil, an accomplished futurist, author (his 2006 book The Singularity is Near popularized the theory) and recent Google hire, has pegged 2029 as the year when computers with match and exceed human intelligence. Depending on which luminary you agree with, that gives humans somewhere between 9 and 16 good years, before a pantheon of machine deities gets to decide what to do with us.

If you’re wondering why the human race is handling the news of its impending irrelevance with such quiet composure, its possible that the species is simply in denial. Maybe we’re unwilling to accept the hard truths preached by Vinge, Kurzweil and other bright minds.

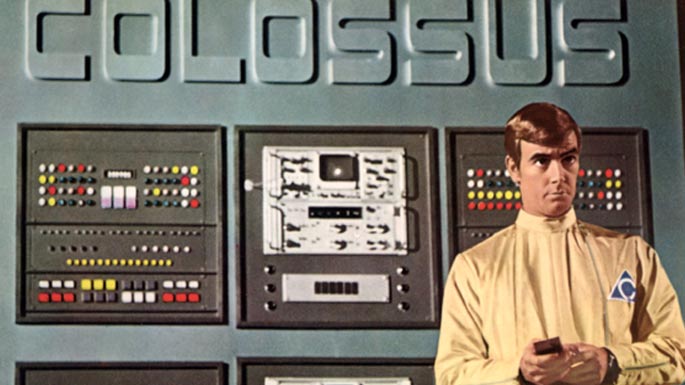

Just as possible, though, is another form of denial. Maybe no one in power cares about the Singularity, because they recognize it as science fiction. It’s a theory that was proposed by a SF writer. Its ramifications are couched in the imagery and language of SF. To believe in the Singularity, you have to believe in one of the greatest myths ever told by SF—that robots are smart, and always on the verge of becoming smarter than us.

More than 60 years of AI research indicates otherwise.”