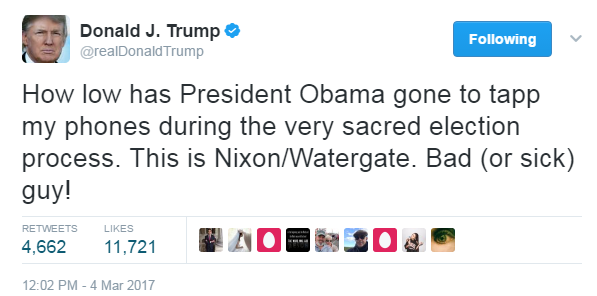

Penny Lane, or some place like it, used to be in our ears and in our eyes. Not so much in the twenty-first century. Now your head is supposed to be inside your phone, while sensors, cameras and computers aim to unobtrusively extract information from you.

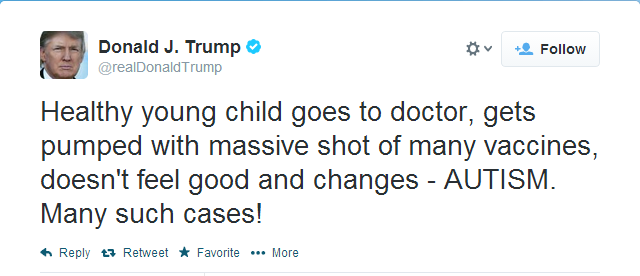

These robots do not resemble us at all, so there’s no uncanny valley—you’re not meant to detect any dips. As cars become driverless and the Internet of Things proliferates, there will be no opting out, no covering up. As Leonard Cohen groaned in 1992, just three years after Tim Berners-Lee unwittingly gifted us with a Trojan Horse, which we gleefully wheeled inside the gates: “There’ll be the breaking of the ancient western code / Your private life will suddenly explode.”

Three excerpts follow.

_______________________

The opening of the Economist article “What Machines Can Tell From Your Face“:

The human face is a remarkable piece of work. The astonishing variety of facial features helps people recognise each other and is crucial to the formation of complex societies. So is the face’s ability to send emotional signals, whether through an involuntary blush or the artifice of a false smile. People spend much of their waking lives, in the office and the courtroom as well as the bar and the bedroom, reading faces, for signs of attraction, hostility, trust and deceit. They also spend plenty of time trying to dissimulate.

Technology is rapidly catching up with the human ability to read faces. In America facial recognition is used by churches to track worshippers’ attendance; in Britain, by retailers to spot past shoplifters. This year Welsh police used it to arrest a suspect outside a football game. In China it verifies the identities of ride-hailing drivers, permits tourists to enter attractions and lets people pay for things with a smile. Apple’s new iPhone is expected to use it to unlock the homescreen (see article).

Set against human skills, such applications might seem incremental. Some breakthroughs, such as flight or the internet, obviously transform human abilities; facial recognition seems merely to encode them. Although faces are peculiar to individuals, they are also public, so technology does not, at first sight, intrude on something that is private. And yet the ability to record, store and analyse images of faces cheaply, quickly and on a vast scale promises one day to bring about fundamental changes to notions of privacy, fairness and trust.

The final frontier

Start with privacy.•

_______________________

From “The Next Challenge for Facial Recognition Is Identifying People Whose Faces Are Covered,” a James Vincent Verge piece:

The challenge of recognizing people when their faces are covered is one that plenty of teams are working on — and making quick progress.

Facebook, for example, has trained neural networks that can recognize people based on characteristics like hair, body shape, and posture. Facial recognition systems that work on portions of the face have also been developed (although, again; not ready for commercial use). And there are other, more exotic methods to identify people. AI-powered gait analysis, for example, can recognize individuals with a high degree of accuracy, and even works with low-resolution footage — the sort you might get from a CCTV camera.

One system for identifying masked individuals developed at the University of Basel in Switzerland recreates a 3D model of the target’s face based on what it can see. Bernhard Egger, one of the scientists behind the work, told The Verge that he expected “lots of development” in this area in the near future, but thought that there would always be ways to fool the machine. “Maybe machines will outperform humans on very specific tasks with partial occlusions,” said Egger. “But, I believe, it will still be possible to not be recognized if you want to avoid this.”

Wearing a rigid mask that covers the whole face, for example, would give current facial recognition systems nothing to go on. And other researchers have developed patterned glasses that are specially designed to trick and confuse AI facial recognition systems. Getting clear pictures is also difficult. Egger points out that we’re used to facial recognition performing quickly and accurately, but that’s in situations where the subject is compliant — scanning their face with a phone, for example, or at a border checkpoint.

Privacy advocates, though, say even if these systems have flaws, they’re still likely to be embraced by law enforcement.•

_______________________

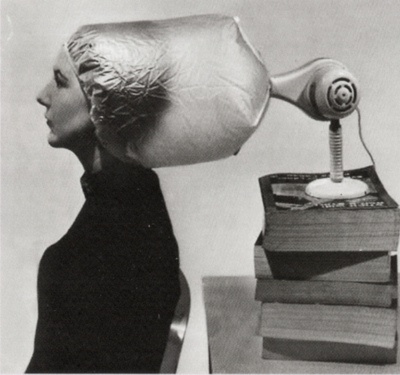

From “How Apple Is Putting Voices in Users’ Heads—Literally,” a Steven Levy Wired story about Apple technology that could be a boon for the hearing impaired—and, potentially, a bane for all of us:

Merging medical technology like Apple’s is a clear benefit to those needing hearing help. But I’m intrigued by some observations that Dr. Biever, the audiologist who’s worked with hearing loss patients for two decades, shared with me. She says that with this system, patients have the ability to control their sound environment in a way that those with good hearing do not—so much so that she is sometimes envious. How cool would it be to listen to a song without anyone in the room hearing it? “When I’m in the noisiest of rooms and take a call on my iPhone, I can’t hold my phone to ear and do a call,” she says. “But my recipient can do this.”

This paradox reminds me of the approach I’m seeing in the early commercial efforts to develop a brain-machine interface: an initial focus on those with cognitive challenges with a long-term goal of supercharging everyone’s brain. We’re already sort of cyborgs, working in a partnership of dependency with those palm-size slabs of glass and silicon that we carry in our pockets and purses. The next few decades may well see them integrated subcutaneously.

I’m not suggesting that we all might undergo surgery to make use of the tools that Apple has developed. But I do see a future where our senses are augmented less invasively. Pulling out a smartphone to fine-tune one’s aural environment (or even sending vibes to a brain-controlled successor to the iPhone) might one day be as common as tweaking bass and treble on a stereo system.•