More information readily available to us–more than we ever dreamed we could possess–has not clearly improved our decision-making process. Why? Perhaps, like Chauncey Gardener, we like to watch, but what we really love is to see what we want to see. Or maybe we just can’t assimilate the endless reams of virtual data.

In an Ask Me Anything at Reddit, behavioral economist Richard Thaler, who’s just published Misbehaving, has an interesting idea: What about online decision engines that help with practical problems the way Expedia does with travel itineraries? Not something feckless like the former Ask Jeeves, but a machine wiser and deeper.

Such a nudge would bring about all sorts of ethical questions. Should we be offloading decisions (or even a significant part of them) to algorithms? Are the people writing the coding manipulating us? But it would be a fascinating experiment.

The exchange:

Question:

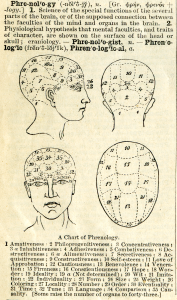

Do you think, with rapid advances in data collection, machine learning, ubiquity of technology that lowers barrier for precise calculation/ data interpretation etc, consumers/ humans will start to behave more like Econs? Do you think that would be OPTIMAL, i.e in our best interests? It seems a big ‘flaw’ in AI/ robotics right now is that they are not ‘human like,’ i.e. they are too much like Econs, they make no mistakes and always make optimal choices. Do you think it’s more optimal for human to become more like robots/ machine that make no ‘irrational’ errors? Do you think it would eventually become that way when technology makes it much lower efforts to actually evaluate rather than rely on intuitive heuristics?

Richard Thaler:

Two parts to this.

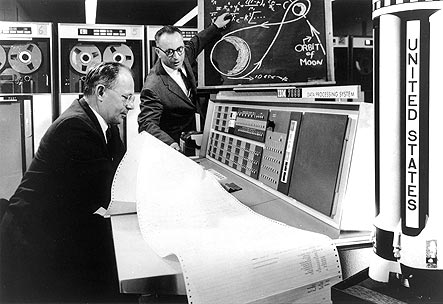

One is: I’ve long advocated using big data to help people make better decisions, an effort i call “smart disclosure.” I’ve a couple of New York Times columns devoted to this topic. The idea is that by making better data available, we can create new businesses that I call “choice engines.”

Think of them like travel websites, that would make, say, choosing a mortgage as easy as finding a plane ticket from New York to Chicago.

More generally, however, the goal is not to turn humans into Econs. Econs (*not economists) are jerks.

Econs don’t leave tips at restaurants they never intend to go back to. Don’t contribute to NPR. And don’t bother to vote.•