We can spend our time discerning patterns, but with the surfeit of information at our avail, no one has that much time.

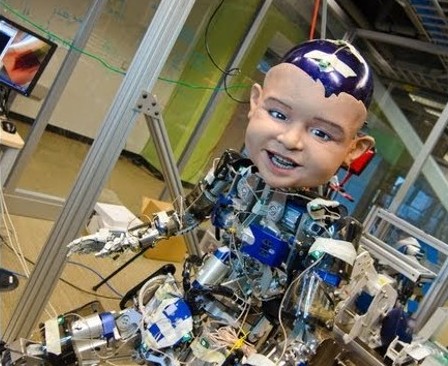

Quentin Hardy of the New York Times, who does reliably excellent reporting about technology, has penned a piece about where the relationship between AI and business may be headed, with an emphasis on recognizing, sorting, mapping and teaching. Whether this means we’ll require a new class of workers or merely new algorithms to make sense of the mountains of data, no one can say for sure, but someone or something will have to explain the new normal. Both carbon and silicon will likely be required, at least initially.

An excerpt:

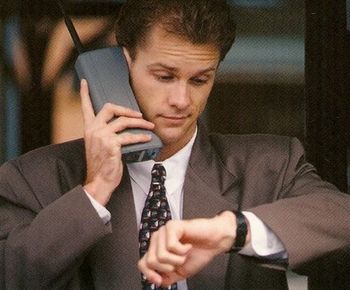

Over the last decade, smartphones, social networks and cloud computing have moved from feeding the growth of companies like Facebook and Twitter, leapfrogging to Uber, Airbnb and others that have used the phones, personal rating systems and powerful remote computers in the cloud to create their own new businesses.

Believe it or not, that stuff may be heading for the rearview mirror already. The tech industry’s new architecture is based not just on the giant public computing clouds of Google, Microsoft and Amazon, but also on their A.I. capabilities. These clouds create more efficient and supple use of computing resources, available for rent. Smaller clouds used in corporate systems are designed to connect to them.

The A.I. resources [Google Compute Engine head Diane B.] Greene is opening up at Google are remarkable. Google’s autocomplete feature that most of us use when doing a search can instantaneously touch 500 computers in several locations as it guesses what we are looking for. Services like Maps and Photos have over a billion users, sorting places and faces by computer. Gmail sifts through 1.4 petabytes of data, or roughly two billion books’ worth of information, every day.

Handling all that, plus tasks like language translation and speech recognition, Google has amassed a wealth of analysis technology that it can offer to customers. Urs Hölzle, Ms. Greene’s chief of technical infrastructure, predicts that the business of renting out machines and software will eventually surpass Google advertising. In 2015, ad profits were $16.4 billion.

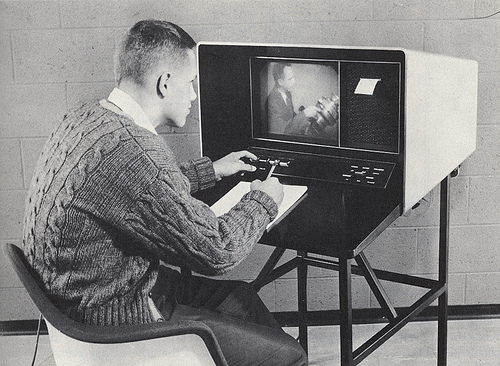

“In the ’80s, it was spreadsheets,” said Andreas Bechtolsheim, a noted computer design expert who was Google’s first investor. “Now it’s what you can do with machine learning.”•