The three Transformations humans must make if we’re to attain a higher plane of living, à la philosopher Nick Bostrom’s “Letter from Utopia“:

“To reach Utopia, you must first discover the means to three fundamental transformations.

The First Transformation: Secure life!

Your body is a deathtrap. This vital machine and mortal vehicle, unless it jams first or crashes, is sure to rust anon. You are lucky to get seven decades of mobility; eight if you be fortune’s darling. That is not sufficient to get started in a serious way, much less to complete the journey. Maturity of the soul takes longer. Why, even a tree-life takes longer.

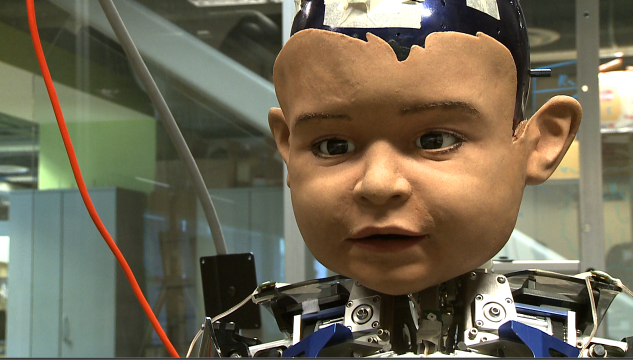

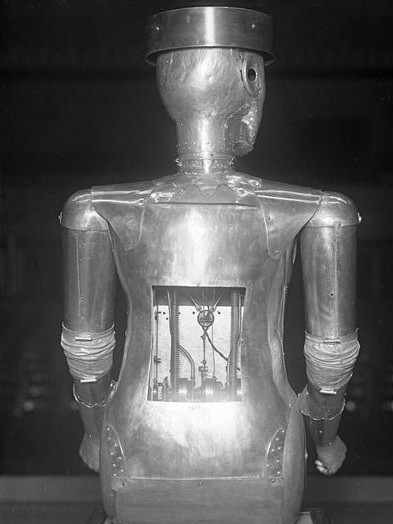

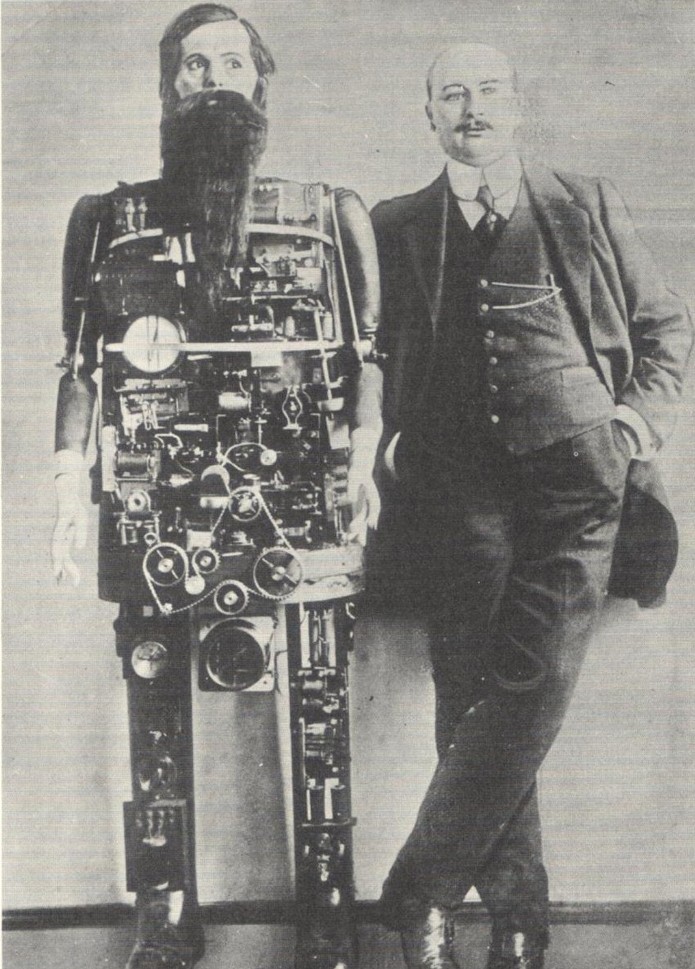

Death is not one but a multitude of assassins. Do you not see them? They are coming at you from every angle. Take aim at the causes of early death – infection, violence, malnutrition, heart attack, cancer. Turn your biggest gun on aging, and fire. You must seize the biochemical processes in your body in order to vanquish, by and by, illness and senescence. In time, you will discover ways to move your mind to more durable media. Then continue to improve the system, so that the risk of death and disease continues to decline. Any death prior to the heat death of the universe is premature if your life is good.

Oh, it is not well to live in a self-combusting paper hut! Keep the flames at bay and be prepared with liquid nitrogen, while you construct yourself a better habitation. One day you or your children should have a secure home. Research, build, redouble your effort!

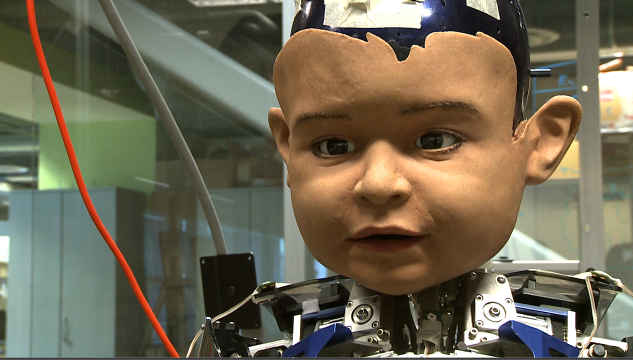

The Second Transformation: Upgrade cognition!

Your brain’s special faculties: music, humor, spirituality, mathematics, eroticism, art, nurturing, narration, gossip! These are fine spirits to pour into the cup of life. Blessed you are if you have a vintage bottle of any of these. Better yet, a cask! Better yet, a vineyard!

Be not afraid to grow. The mind’s cellars have no ceilings!

What other capacities are possible? Imagine a world with all the music dried up: what poverty, what loss. Give your thanks, not to the lyre, but to your ears for the music. And ask yourself, what other harmonies are there in the air, that you lack the ears to hear? What vaults of value are you witlessly debarred from, lacking the key sensibility?

Had you but an inkling, your nails would be clawing at the padlock.

Your brain must grow beyond any genius of humankind, in its special faculties as well as its general intelligence, so that you may better learn, remember, and understand, and so that you may apprehend your own beatitude.

Mind is a means: for without insight you will get bogged down or lose your way, and your journey will fail.

Mind is also an end: for it is in the spacetime of awareness that Utopia will exist. May the measure of your mind be vast and expanding.

Oh, stupidity is a loathsome corral! Gnaw and tug at the posts, and you will slowly loosen them up. One day you’ll break the fence that held your forebears captive. Gnaw and tug, redouble your effort!

The Third Transformation: Elevate well-being!

What is the difference between indifference and interest, boredom and thrill, despair and bliss?

Pleasure! A few grains of this magic ingredient are worth more than a king’s treasure, and we have it aplenty here in Utopia. It pervades into everything we do and everything we experience. We sprinkle it in our tea.

The universe is cold. Fun is the fire that melts the blocks of hardship and creates a bubbling celebration of life.

It is the birth right of every creature, a right no less sacred for having been trampled on since the beginning of time.

There is a beauty and joy here that you cannot fathom. It feels so good that if the sensation were translated into tears of gratitude, rivers would overflow.

I reach in vain for words to convey to you what it all amounts to… It’s like a rain of the most wonderful feeling, where every raindrop has its own unique and indescribable meaning – or rather it has a scent or essence that evokes a whole world… And each such evoked world is subtler, richer, deeper, more multidimensional than the sum total of what you have experienced in your entire life.

I will not speak of the worst pain and misery that is to be got rid of; it is too horrible to dwell upon, and you are already cognizant of the urgency of palliation. My point is that in addition to the removal of the negative, there is also an upside imperative: to enable the full flourishing of enjoyments that are currently out of reach.

The roots of suffering are planted deep in your brain. Weeding them out and replacing them with nutritious crops of well-being will require advanced skills and instruments for the cultivation of your neuronal soil. But take heed, the problem is multiplex! All emotions have a natural function. Prune carefully lest you accidentally reduce the fertility of your plot.

Sustainable yields are possible. Yet fools will build fools’ paradises. I recommend you go easy on your paradise-engineering until you have the wisdom to do it right.

Oh, what a gruesome knot suffering is! Pull and tug on those loops, and you will gradually loosen them up. One day the coils will fall, and you will stretch out in delight. Pull and tug, and be patient in your effort!

May there come a time when rising suns are greeted with joy by all the living creatures they shine upon.”