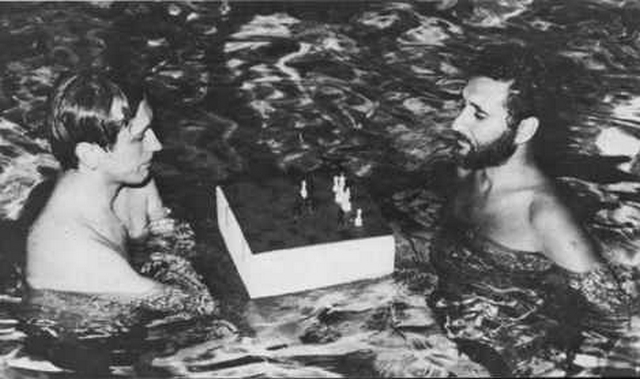

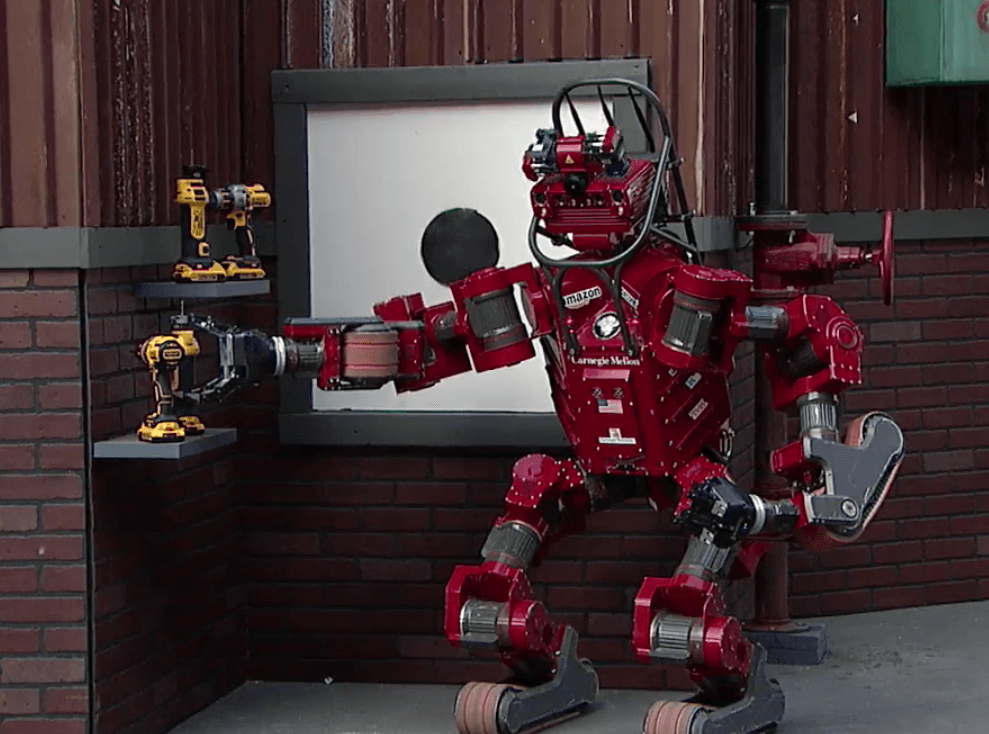

The psychologist Gary Marcus urged caution when Google AI recently defeated a good, but not champion, Go player. Most of qualifications still pertain still pertain, but DeepMind just deep-sixed Lee Se-dol, one of the world’s best players. The human competitor noticed the psychological component of the game was noticeably absent, even disconcerting. “It’s like playing the game alone,” he said.

Below is Choe Sang-hun and John Markoff’s New York Times report of the competition, followed by Bruce Weber’s 1997 correspondence about Garry Kasparov’s Deep Blue defeat.

____________________________

From Choe and Markoff:

SEOUL, South Korea — Computer, one. Human, zero.

A Google computer program stunned one of the world’s top players on Wednesday in a round of Go, which is believed to be the most complex board game ever created.

The match — between Google DeepMind’s AlphaGo and the South Korean Go master Lee Se-dol — was viewed as an important test of how far research into artificial intelligence has come in its quest to create machines smarter than humans.

“I am very surprised because I have never thought I would lose,” Mr. Lee said at a news conference in Seoul. “I didn’t know that AlphaGo would play such a perfect Go.”

Mr. Lee acknowledged defeat after three and a half hours of play.

Demis Hassabis, the founder and chief executive of Google’s artificial intelligence team DeepMind, the creator of AlphaGo, called the program’s victory a “historic moment.”•

____________________________

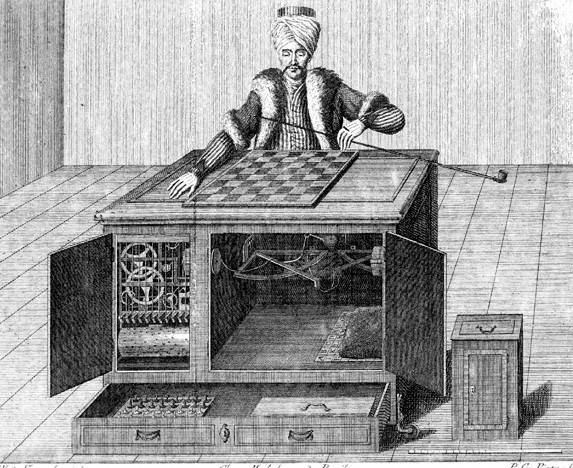

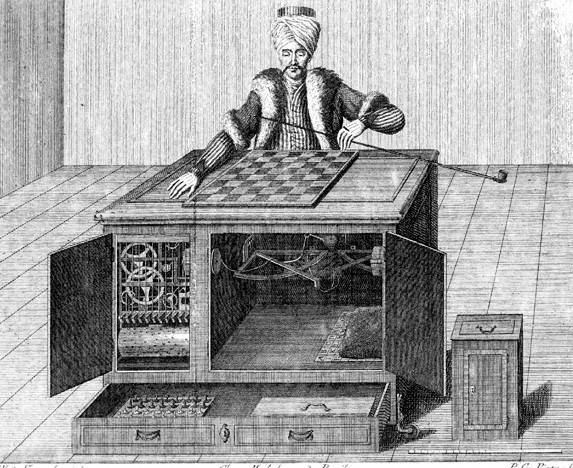

Garry Kasparov held off machines but only for so long. He defeated Deep Thought in 1989 and believed a computer could never best him. But by 1997 Deep Blue turned him–and humanity–into an also-ran in some key ways. The chess master couldn’t believe it at first–he assumed his opponent was manipulated by humans behind the scene, like the Mechanical Turk, the faux chess-playing machine from the 18th century. But no sleight of hand was needed.

Below are the openings of three Bruce Weber New York Times articles written during the Kasparov-Deep Blue matchup which chart the rise of the machines.

Responding to defeat with the pride and tenacity of a champion, the I.B.M. computer Deep Blue drew even yesterday in its match against Garry Kasparov, the world’s best human chess player, winning the second of their six games and stunning many chess experts with its strategy.

Joel Benjamin, the grandmaster who works with the Deep Blue team, declared breathlessly: “This was not a computer-style game. This was real chess!”

He was seconded by others.

“Nice style!” said Susan Polgar, the women’s world champion. “Really impressive. The computer played a champion’s style, like Karpov,” she continued, referring to Anatoly Karpov, a former world champion who is widely regarded as second in strength only to Mr. Kasparov. “Deep Blue made many moves that were based on understanding chess, on feeling the position. We all thought computers couldn’t do that.”•

Garry Kasparov, the world chess champion, opened the third game of his six-game match against the I.B.M. computer Deep Blue yesterday in peculiar fashion, by moving his queen’s pawn forward a single square. Huh?

“I think we have a new opening move,” said Yasser Seirawan, a grandmaster providing live commentary on the match. “What should we call it?”

Mike Valvo, an international master who is a commentator, said, “The computer has caused Garry to act in strange ways.”

Indeed it has. Mr. Kasparov, who swiftly became more conventional and subtle in his play, went on to a draw with Deep Blue, leaving the score of Man vs. Machine at 1 1/2 apiece. (A draw is worth half a point to each player.) But it is clear that after his loss in Game 2 on Sunday, in which he resigned after 45 moves, Mr. Kasparov does not yet have a handle on Deep Blue’s predilections, and that he is still struggling to elicit them.•

In brisk and brutal fashion, the I.B.M. computer Deep Blue unseated humanity, at least temporarily, as the finest chess playing entity on the planet yesterday, when Garry Kasparov, the world chess champion, resigned the sixth and final game of the match after just 19 moves, saying, “I lost my fighting spirit.”

The unexpectedly swift denouement to the bitterly fought contest came as a surprise, because until yesterday Mr. Kasparov had been able to summon the wherewithal to match Deep Blue gambit for gambit.

The manner of the conclusion overshadowed the debate over the meaning of the computer’s success. Grandmasters and computer experts alike went from praising the match as a great experiment, invaluable to both science and chess (if a temporary blow to the collective ego of the human race) to smacking their foreheads in amazement at the champion’s abrupt crumpling.

“It had the impact of a Greek tragedy,” said Monty Newborn, chairman of the chess committee for the Association for Computing, which was responsible for officiating the match.

It was the second victory of the match for the computer — there were three draws — making the final score 3 1/2 to 2 1/2, the first time any chess champion has been beaten by a machine in a traditional match. Mr. Kasparov, 34, retains his title, which he has held since 1985, but the loss was nonetheless unprecedented in his career; he has never before lost a multigame match against an individual opponent.

Afterward, he was both bitter at what he perceived to be unfair advantages enjoyed by the computer and, in his word, ashamed of his poor performance yesterday.

“I was not in the mood of playing at all,” he said, adding that after Game 5 on Saturday, he had become so dispirited that he felt the match was already over. Asked why, he said: “I’m a human being. When I see something that is well beyond my understanding, I’m afraid.”•