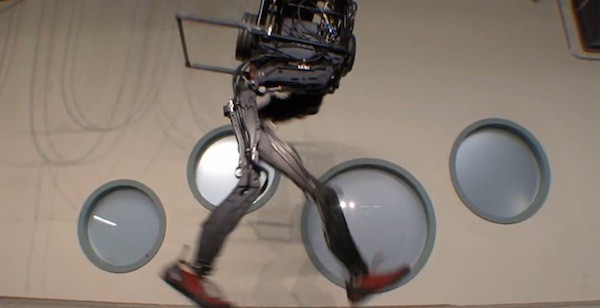

As someone consumed by robotics, automation, the potential for technological unemployment and its societal and political implications, I read as many books as possible on the topic, and I feel certain that The Second Machine Age, the 2014 title coauthored by Andrew McAfee and Eric Brynjolfsson, is the best of the lot. If you’re just beginning to think about these issues, start right there.

In his Financial Times blog, McAfee, who believes this time is different and that the Second Machine Age won’t resemble the Industrial Age, has published a post about an NPR debate on the subject with MIT economist David Autor, who disagrees. An excerpt:

Over the next 20-40 years, which was the timeframe I was looking at, I predicted that vehicles would be driving themselves; mines, factories, and farms would be largely automated; and that we’d have an extraordinarily abundance economy that didn’t have anything like the same bottomless thirst for labour that the Industrial Era did.

As expected, I found David’s comments in response to this line of argument illuminating. He said: “If we’d had this conversation 100 years ago I would not have predicted the software industry, the internet, or all the travel or all the experience goods … so I feel it would be rather arrogant of me to say I’ve looked at the future and people won’t come up with stuff … that the ideas are all used up.”

This is exactly right. We are going to see innovation, entrepreneurship, and creativity that I can’t even begin to imagine (if I could, I’d be an entrepreneur or venture capitalist myself). But all the new industries and companies that spring up in the coming years will only use people to do the work if they’re better at it than machines are. And the number of areas where that is the case is shrinking — I believe rapidly.•