In January, I wrote this:

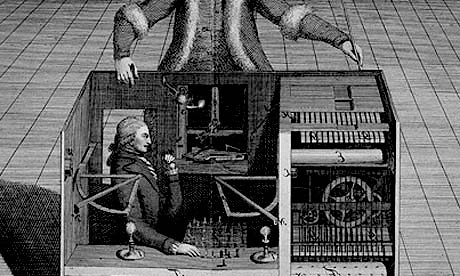

More than two centuries before Deep Blue deep-sixed humanity by administering a whooping to Garry Kasparov, that Baku-born John Henry, the Mechanical Turk purported to be a chess-playing automaton nonpareil. It was, of course, a fake, a contraption that hid within its case a genius-level human champion that controlled its every move. Such chicanery isn’t unusual for technologies nowhere near fruition, but the truth is even ones oh-so-close to the finish line often need the aid of a hidden hand.

In “The Humans Working Behind The Curtain,” a smart Harvard Business Review piece by Mary L. Gray and Siddharth Suri, the authors explain how the “paradox of automation’s last mile” manifests itself even in today’s highly algorithmic world, an arrangement by which people are hired to quietly complete a task AI can’t, and one which is unlikely to be undone by further progress. Unfortunately, most of the stealth work for humans created in this way is piecemeal, lower-paid and prone to the rapid churn of disruption.

An excerpt:

Cut to Bangalore, India, and meet Kala, a middle-aged mother of two sitting in front of her computer in the makeshift home office that she shares with her husband. Our team at Microsoft Research met Kala three months into studying the lives of people picking up temporary “on-demand” contract jobs via the web, the equivalent of piecework online. Her teenage sons do their homework in the adjoining room. She describes calling them into the room, pointing at her screen and asking: “Is this a bad word in English?” This is what the back end of AI looks like in 2016. Kala spends hours every week reviewing and labeling examples of questionable content. Sometimes she’s helping tech companies like Google, Facebook, Twitter, and Microsoft train the algorithms that will curate online content. Other times, she makes tough, quick decisions about what user-generated materials to take down or leave in place when companies receive customer complaints and flags about something they read or see online.

Whether it is Facebook’s trending topics; Amazon’s delivery of Prime orders via Alexa; or the many instant responses of bots we now receive in response to consumer activity or complaint, tasks advertised as AI-driven involve humans, working at computer screens, paid to respond to queries and requests sent to them through application programming interfaces (APIs) of crowdwork systems. The truth is, AI is as “fully-automated” as the Great and Powerful Oz was in that famous scene from the classic film, where Dorothy and friends realize that the great wizard is simply a man manically pulling levers from behind a curtain. This blend of AI and humans, who follow through when the AI falls short, isn’t going away anytime soon. Indeed, the creation of human tasks in the wake of technological advancement has been a part of automation’s history since the invention of the machine lathe.

We call this ever-moving frontier of AI’s development, the paradox of automation’s last mile: as AI makes progress, it also results in the rapid creation and destruction of temporary labor markets for new types of humans-in-the-loop tasks.•

The Harvard Business Review report was terrain previously covered from a different angle by Adrian Chen in Wired in 2014 with “The Laborers Who Keep Dick Pics and Beheadings Out of Your Facebook Feed.” The journalist studied those stealthily doing the psychologically dangerous business of keeping the Internet “safe.” The opening:

THE CAMPUSES OF the tech industry are famous for their lavish cafeterias, cushy shuttles, and on-site laundry services. But on a muggy February afternoon, some of these companies’ most important work is being done 7,000 miles away, on the second floor of a former elementary school at the end of a row of auto mechanics’ stalls in Bacoor, a gritty Filipino town 13 miles southwest of Manila. When I climb the building’s narrow stairwell, I need to press against the wall to slide by workers heading down for a smoke break. Up one flight, a drowsy security guard staffs what passes for a front desk: a wooden table in a dark hallway overflowing with file folders.

Past the guard, in a large room packed with workers manning PCs on long tables, I meet Michael Baybayan, an enthusiastic 21-year-old with a jaunty pouf of reddish-brown hair. If the space does not resemble a typical startup’s office, the image on Baybayan’s screen does not resemble typical startup work: It appears to show a super-close-up photo of a two-pronged dildo wedged in a vagina. I say appears because I can barely begin to make sense of the image, a baseball-card-sized abstraction of flesh and translucent pink plastic, before he disappears it with a casual flick of his mouse.

Baybayan is part of a massive labor force that handles “content moderation”—the removal of offensive material—for US social-networking sites. As social media connects more people more intimately than ever before, companies have been confronted with the Grandma Problem: Now that grandparents routinely use services like Facebook to connect with their kids and grandkids, they are potentially exposed to the Internet’s panoply of jerks, racists, creeps, criminals, and bullies. They won’t continue to log on if they find their family photos sandwiched between a gruesome Russian highway accident and a hardcore porn video. Social media’s growth into a multibillion-dollar industry, and its lasting mainstream appeal, has depended in large part on companies’ ability to police the borders of their user-generated content—to ensure that Grandma never has to see images like the one Baybayan just nuked.

So companies like Facebook and Twitter rely on an army of workers employed to soak up the worst of humanity in order to protect the rest of us. And there are legions of them—a vast, invisible pool of human labor.•

Chen has now teamed with Ciarán Cassidy to revisit the harrowing topic for a 20-minute documentary called “The Moderators.” It’s a fascinating peek into a hidden corner of our increasingly computerized world–one that’s even more relevant in the wake of the Kremlin-bot Presidential election–as well as very good filmmaking.

We watch as trainees at an Indian company that quietly “cleans” unacceptable content from social-media sites are introduced to sickening images they must scrub. That tired phrase “you can’t unsee this” gains new currency as the neophytes are bombarded by shock and gore. The movie numbers at 150,000 the workers in this sector trying to mitigate the chaos in the “largest experiment in anarchy we’ve ever had.” For these kids it’s a first job, a foot in the door even if they’re stepping inside a haunted house. You have to wonder, though, if they will ultimately be impacted in a Milgramesque sense, desensitized and disheartened, whether they initially realize it or not.

We are all like the moderators to a certain degree, despite their best efforts. Pretty much everyone who’s gone online during these early decades of the Digital Age has witnessed an endless parade of upsetting images and footage that was never available during a more centralized era. Are we also children who don’t realize what we’re becoming?