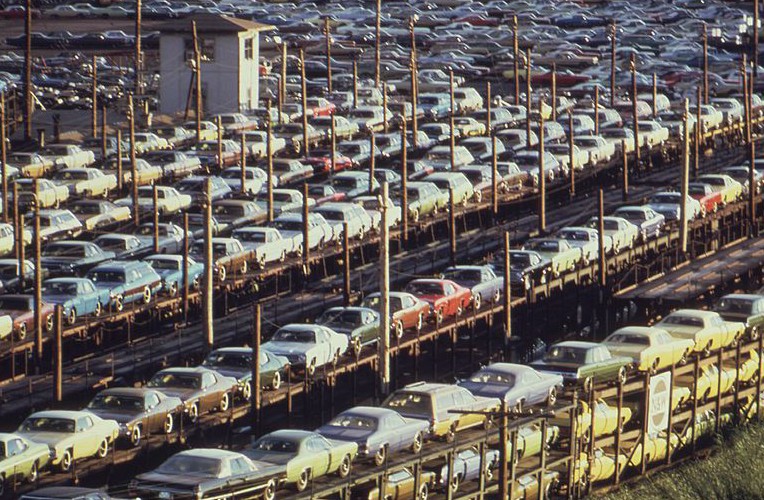

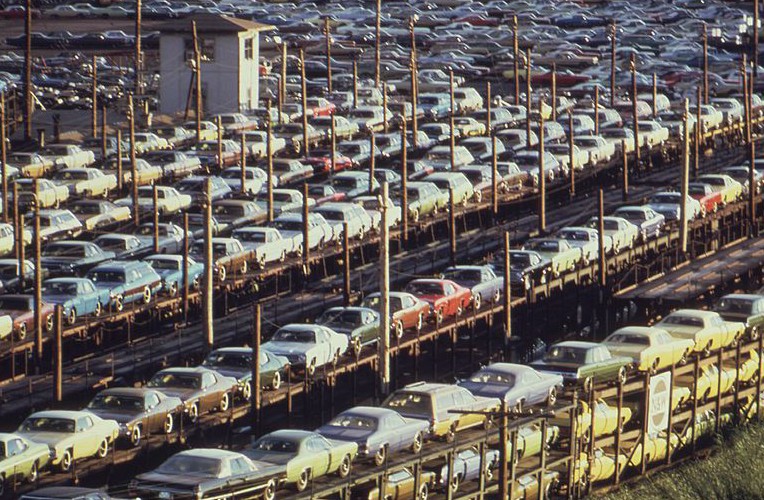

Reid Hoffman of Linkedin published a post about what road transportation would be like if cars become driverless and communicate with one another autonomously.

There’ll certainly be benefits. If your networked car knows ahead of time that a certain roadway is blocked by weather conditions, your trip will be smoother. More important than mere convenience, of course, is that there would likely be far fewer accidents and less pollution.

The entrepreneur believes human-controlled vehicles will be restricted legally to specific areas where “antique” driving can be experienced. There’s no timeframe given for this legislation, but it seems very unlikely that it would occur anytime soon, nor does it seem particularly necessary since the nudge of high insurance rates will likely do the trick.

Hoffman acknowledges some of the many problems that would attend such a scenario if it’s realized. In addition to non-stop surveillance by corporations and government and the potential for large-scale hacking, there’s skill fade to worry about. (I don’t think the latter concern will be precisely remedied by tooling down a virtual road via Oculus Rift, as Hoffman suggests for those pining for yesteryear.) I think the most interesting issues he conjures are advertisers paying car companies to direct traffic down a certain path to expose travelers to businesses or the best routes being conferred upon higher-paying customers. That would be the Net Neutrality argument relocated to the streets and highways.

It’s definitely worth reading. An excerpt:

Autonomous vehicles will also be able to share information with each other better than human drivers can, in both real-time situations and over time. Every car on the road will benefit from what every other car has learned. Driving will be a networked activity, with tighter feedback loops and a much greater ability to aggregate, analyze, and redistribute knowledge.

Today, as individual drivers compete for space, they often work against each other’s interests, sometimes obliviously, sometimes deliberately. In a world of networked driverless cars, driving retains the individualized flexibility that has always made automobility so attractive. But it also becomes a highly collaborative endeavor, with greater cooperation leading to greater efficiency. It’s not just steering wheels and rear-view mirrors that driverless cars render obsolete. You won’t need horns either. Or middle fingers.

Already, the car as network node is what drives apps like Waze, which uses smartphone GPS capabilities to crowd-source real-time traffic levels, road conditions, and even gas prices. But Waze still depends on humans to apprehend the information it generates. Autonomous vehicles, in contrast, will be able to generate, analyze, and act on information without human bottlenecks. And when thousands and then even millions of cars are connected in this way, new capabilities are going to emerge. The rate of innovation will accelerate – just as it did when we made the shift from standalone PCs to networked PCs.

So we as a society should be doing everything we can to reach this better future sooner rather than later, in ways that make the transition as smooth as possible. And that includes prohibiting human-driven cars in many contexts. On this particular road trip, the journey is not the reward. The destination is.•