Economist Tyler Cowen just did a fun Ask Me Anything at Reddit, discussing driverless cars, the Hyperloop, wealth redistribution, Universal Basic Income, the American Dream, etc.

Cowen also discusses Peter Thiel’s role in the Trump Administration, though his opinion seems too coy. We’re not talking about someone who just so happens to work for a “flawed” Administration but a serious supporter of a deeply racist campaign to elect a wholly unqualified President and empower a cadre of Breitbart bigots. Trump owns the mess he’s creating, but Thiel does also. The most hopeful thing you can say about the Silicon Valley billionaire, who was also sure there were WMDs in Iraq, is that outside of his realm he has no idea what he’s doing. The least hopeful is that he’s just not a good person.

A few exchanges follow.

Question:

What is an issue or concept in economics that you wish were easier to explain so that it would be given more attention by the public?

Tyler Cowen:

The idea that a sound polity has to be based on ideas other than just redistribution of wealth.

Question:

What do you think about Peter Thiel’s relationship with President Trump?

Tyler Cowen:

I haven’t seen Peter since his time with Trump. I am not myself a Trump supporter, but wish to reserve judgment until I know more about Peter’s role. I am not in general opposed to the idea of people working with administrations that may have serious flaws.

Question:

In a recent article by you, you spoke about who in the US was experiencing the American Dream, finding evidence that the Dream is still alive and thriving for Hispanics in the U.S. What challenges do you perceive now with the new Administration that might reduce the prospects for this group?

Tyler Cowen:

Breaking up families, general feeling of hostility, possibly damaging the economy of Mexico and relations with them. All bad trends. I am hoping the strong and loving ties across the people themselves will outweigh that. We will see, but on this I am cautiously optimistic.

Question:

Do you think convenience apps like Amazon grocery make us more complacent?

Tyler Cowen:

Anything shipped to your home — worry! Getting out and about is these days underrated. Serendipitous discovery and the like. Confronting the physical spaces we have built, and, eventually, demanding improvements in them.

Question:

Given that universal basic income or similar scheme will become necessity after large scale automation kicks in, will these arguments about fiscal and budgetary crisis still hold true?

And with self driving cars and tech like Hyperloop, wouldn’t the rents in the cities go down?

Tyler Cowen:

Driverless cars are still quite a while away in their most potent form, as that requires redoing the whole infrastructure. But so far I see location only becoming more important, even in light of tech developments, such as the internet, that were supposed to make it less important. It is hard for me to see how a country with so many immigrants will tolerate a UBI. I think that idea is for Denmark and New Zealand, I don’t see it happening in the United States. Plus it can cost a lot too. So the arguments about fiscal crisis I think still hold.

Question:

What is the most underrated city in the US? In the world?

Tyler Cowen:

Los Angeles is my favorite city in the whole world, just love driving around it, seeing the scenery, eating there. I still miss living in the area.

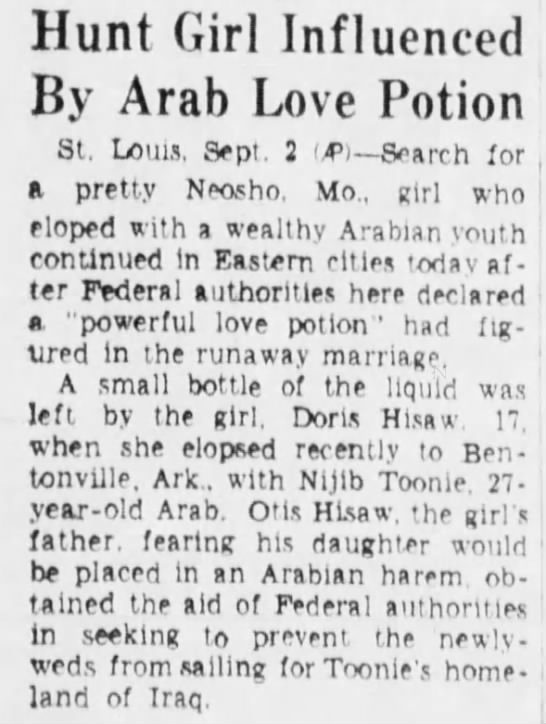

Question:

I am a single guy. Can learning economics help me find a girlfriend?

Tyler Cowen:

No, it will hurt you. Run the other way!•