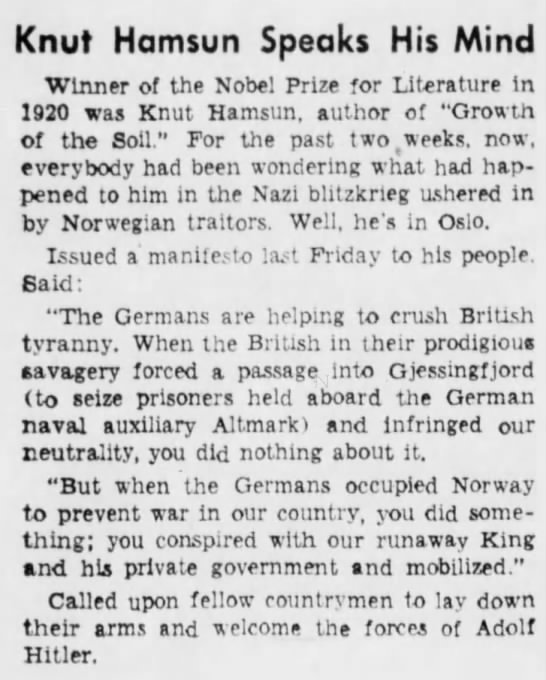

Facts today come into flavors: original and alternative.

Fox kicked off the Fake News Age in earnest just over two decades ago. The unspoken reason for selling lies and conspiracies and wedge issues rather than reality is that Republican policy had become twisted into something almost unrecognizable and truly deleterious to any non-rich citizen. It’s worked quite well as a strategy, even if it’s often made the popular vote at the national level unattainable.

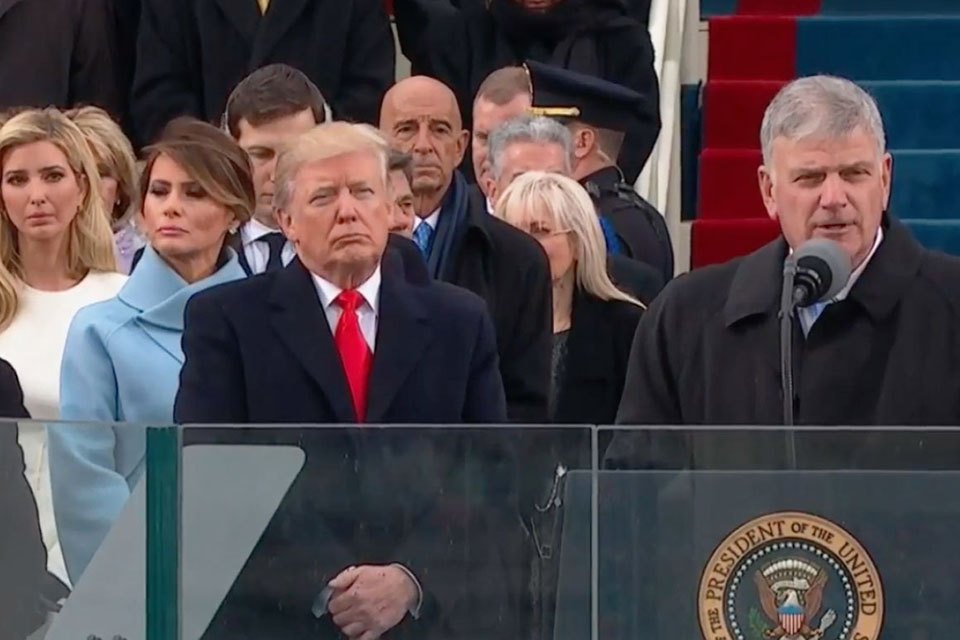

The most recent Presidential election, with its armies of bots, alt-right trolls and Russian interference used Big Data to deliver lies at the granular level. It seemed shocking, although our society and technology has been heading in this direction for a long time. It was almost inevitable.

Of course, factual distortions are nothing new nor are they limited to current events. History can also be a funny thing, as the dangerous absurdity of modern North Korea reminds us every day. Suki Kim, author of Without You, There Is No Us, just conducted a Reddit AMA about her experience going undercover as a schoolteacher in the deeply troubled, delusional state to learn more about the culture. In two exchanges, she addresses historical distortions about the country that exist on the inside and also the outside.

Question:

What wildly held belief among your students surprised you the most?

Suki Kim:

There were so many things. They just learn totally upside down information about most things. But one thing I think most people do not realize is that they learn that South Korea & US attacked North Korea in 1950, and that North Korea won the war due to the bravery of their Great Leader Kim Il Sung. So they celebrate Victory Day, which is a huge holiday there. So this complete lie about the past then makes everything quite illogical. Because how do you then explain the fact that Korea is divided still, if actually North Korea “won” the war? One would have to question that strange logic, which they do not. So it’s not so much that they get taught lies as education, but that that second step of questioning what does not make sense, in general, does not happen, not because they are stupid but because they are forbidden and also their intelligence is destroyed at young age. There were many many examples of such.

Question:

In your experience, what are the biggest misconceptions Americans have about either North or South Korea?

Suki Kim:

I think the biggest misconception goes back to the basic premise. Most Americans have no idea why there are two Koreas, or why there are 30,000 US soldiers in South Korea and why North Korea hates America so much. That very basic fact has been sort of written out of the American consciousness. By repackaging the Korean War as a civil war, it has now created decades of a total misconception. The fact that the US had actually drawn the 38th Parallel that cut up the Korean peninsula, not in 1950 (the start of the war) but in 1945 at the liberation of Korea from Japan is something that no Korean has forgotten — that was the beginning of the modern Korean tragedy. That the first Great Leader (the grandfather of the current Great Leader) was the creation of the Soviet Union (along with the US participation) is another horrible puzzle piece that Americans have conveniently forgotten.

Question:

Anyone know where can I find information regarding how the first Great Leader was a creation of the U.S.A. & Soviets? I’d love to read about it

Suki Kim:

That would be taking it out of the context to claim that first Great Leader was “created” by US. He was a soldier (protege of the Soviet), while US participated in that set up handpicking the US educated South Korean first president. US had drawn the 38th Parallel, and that division was trumpeted by the Cold War, two separate govts formed by 1948 & war broke out in 1950. That is a very simplified version of the history of the two Koreas which most Americans don’t remember and now wonder why they are in South Korea today and why is North Korea mad at them. If you are genuinely curious, there are many many books on this topic by serious historians.•