Probably because I came before smartphones, I often catch myself thinking of them as not exactly a fad but as future artifacts of a period that will ultimately pass. I’ll be happier once people stop staring at them in a daze, living inside them, once this epidemic has ended. It must be a temporary form of insanity. In those moments it seems similar to the opioid crisis.

Of course, that’s not going to work out as my fantasy would have it. The shape of the tool may change—perhaps disappear entirely—but we’ll come to realize belatedly that we were in their pockets all along, not the other way around.

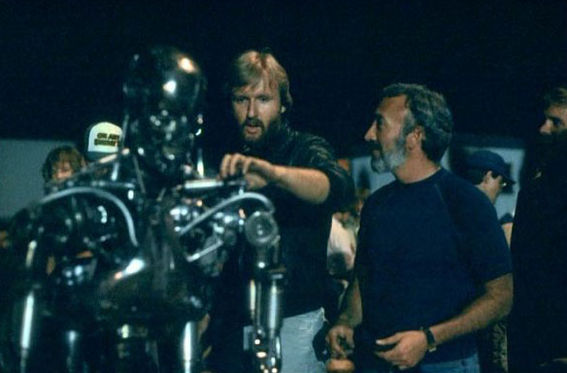

James Cameron, a truly miserable man in so many ways, is right when he tells the Hollywood Reporter that the machines are already our overlords, the emergence of superintelligence not even necessary for the transition of power. Then again, living under even the most soul-crushing machines would probably be preferable than having to answer to Cameron. The interview of the director and Deadpool helmer Tim Miller was conducted by Matthew Belloni and Borys Kit. An excerpt:

Question:

The conflict between technology and humanity is a theme in a lot of Jim’s movies. Does technology scare you?

James Cameron:

Technology has always scared me, and it’s always seduced me. People ask me: “Will the machines ever win against humanity?” I say: “Look around in any airport or restaurant and see how many people are on their phones. The machines have already won.” It’s just [that] they’ve won in a different way. We are co-evolving with our technology. We’re merging. The technology is becoming a mirror to us as we start to build humanoid robots and as we start to seriously build AGI — general intelligence — that’s our equal. Some of the top scientists in artificial intelligence say that’s 10 to 30 years from now. We need to get the damn movies done before that actually happens! And when you talk to these guys, they remind me a lot of that excited optimism that nuclear scientists had in the ’30s and ’40s when they were thinking about how they could power the world. And taking zero responsibility for the idea that it would instantly be weaponized. The first manifestation of nuclear power on our planet was the destruction of two cities and hundreds of thousands of people. So the idea that it can’t happen now is not the case. It can happen, and it may even happen.

Tim Miller:

Jim is a more positive guy [than I am] in the present and more cynical about the future. I know Hawking and Musk think we can put some roadblocks in there. I’m not so sure we can. I can’t imagine what a truly artificial intelligence will make of us. Jim’s brought some experts in to talk to us, and it’s really interesting to hear their perspective. Generally, they’re scared as shit, which makes me scared.

James Cameron:

One of the scientists we just met with recently, she said: “I used to be really, really optimistic, but now I’m just scared.” Her position on it is probably that we can’t control this. It has more to do with human nature. Putin recently said that the nation that perfects AI will dominate or conquer the world. So that pretty much sets the stage for “We wouldn’t have done it, but now those guys are doing it, so now we have to do it and beat them to the punch.” So now everybody’s got the justification to essentially weaponize AI. I think you can draw your own conclusions from that.

Tim Miller:

When it happens, I don’t think AI’s agenda will be to kill us. That seems like a goal that’s beneath whatever enlightened being that they’re going to become because they can evolve in a day what we’ve done in millions of years. And I don’t think that they have the built-in deficits that we have, because we’re still dealing with the same kind of urges that made us climb down from the trees and kill everybody else. I choose to believe that they’ll be better than us.•

Tags: Borys Kit, James Cameron, Matthew Belloni, Tim Miller