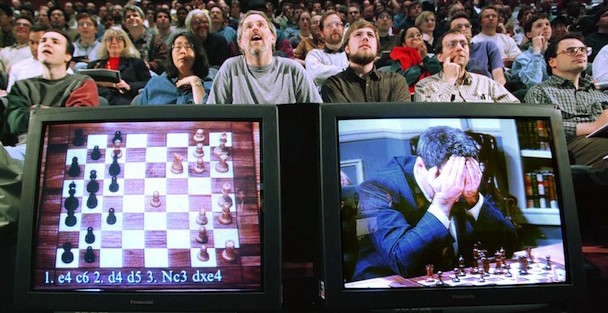

When Garry Kasparov was defeated by Deep Blue, a key breaking point was his mistaking a glitch in his computer opponent for a human level of understanding. These strange behaviors can throw us off our game, but perhaps they can also shed light. In “Artificial Intelligence Is Already Weirdly Human,” David Berreby’s Nautilus article, the author believes that neural-network oddities, something akin to AI meeting ET, might be useful. An excerpt:

Neural nets sometimes make mistakes, which people can understand. (Yes, those desks look quite real; it’s hard for me, too, to see they are a reflection.) But some hard problems make neural nets respond in ways that aren’t understandable. Neural nets execute algorithms—a set of instructions for completing a task. Algorithms, of course, are written by human beings. Yet neural nets sometimes come out with answers that are downright weird: not right, but also not wrong in a way that people can grasp. Instead, the answers sound like something an extraterrestrial might come up with.

These oddball results are rare. But they aren’t just random glitches. Researchers have recently devised reliable ways to make neural nets produce such eerily inhuman judgments. That suggests humanity shouldn’t assume our machines think as we do. Neural nets sometimes think differently. And we don’t really know how or why.

That can be a troubling thought, even if you aren’t yet depending on neural nets to run your home and drive you around. After all, the more we rely on artificial intelligence, the more we need it to be predictable, especially in failure. Not knowing how or why a machine did something strange leaves us unable to make sure it doesn’t happen again.

But the occasional unexpected weirdness of machine “thought” might also be a teaching moment for humanity. Until we make contact with extraterrestrial intelligence, neural nets are probably the ablest non-human thinkers we know.•

Tags: David Berreby